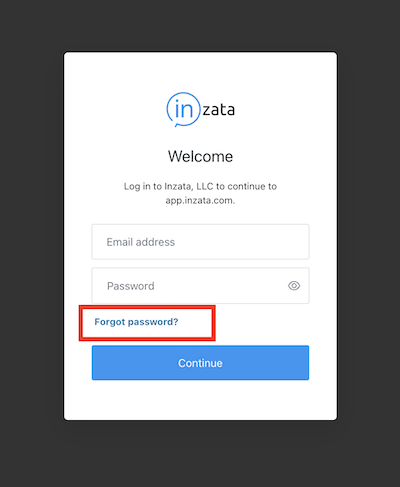

After your account has been created, complete the following steps to log in to the platform for the first time.

1. Go to app.inzata.com.

2. Click “Forgot password?”

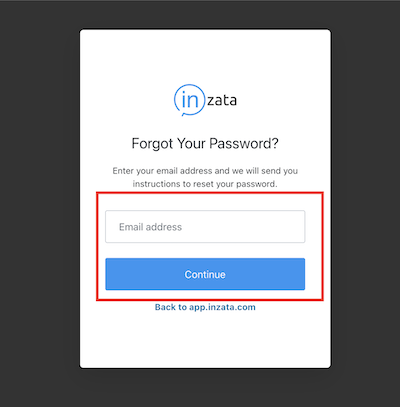

3. Enter the email address you registered with Inzata and click “Send Email”.

4. Go to your inbox and follow the steps to set up your password, then continue to log in at app.inzata.com.

On the project screen, click the + Project button. Give the project a descriptive name so you can find it easily later.

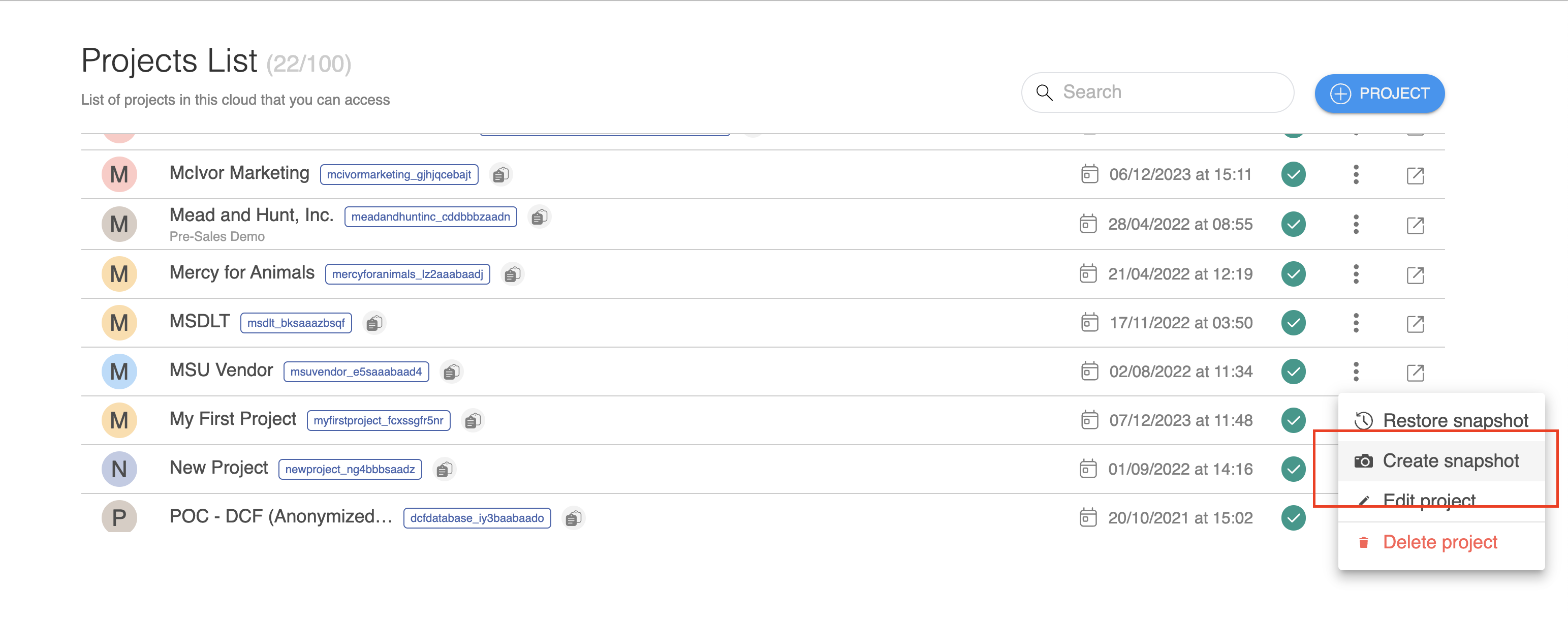

Create a snapshot of Production Project

Using the three buttons on the right side of the screen, create a snapshot. Give it a name and a description.

You can use the project snapshot you just made to create a new project, or restore the snapshot to overwrite an existing one. This is useful if you wish to have separate “Development” “QA-Test” and “Production” environments. You can use snapshots to promote code changes between these different environments. You can make your changes in the Dev project, snapshot it, then restore that snapshot to your QA environment for testing, then once testing is completed, restore the snapshot to production. (You may want to create a pre-push snapshot of Production so you quickly roll back the changes if necessary.)

On the project you wish to restore to, click “Restore snapshot”

1. Using the menu on the right side of the screen, “Restore Snapshot”.

.png)

2. Choose the snapshot you just created and press the play button.

.png)

3. This will create an exact replica of the Inzata project including all of the flows, the data model, the metrics, and the dashboards, and any authorized user accounts. Any modifications on this project will NOT go to production unless specifically pushed to it.

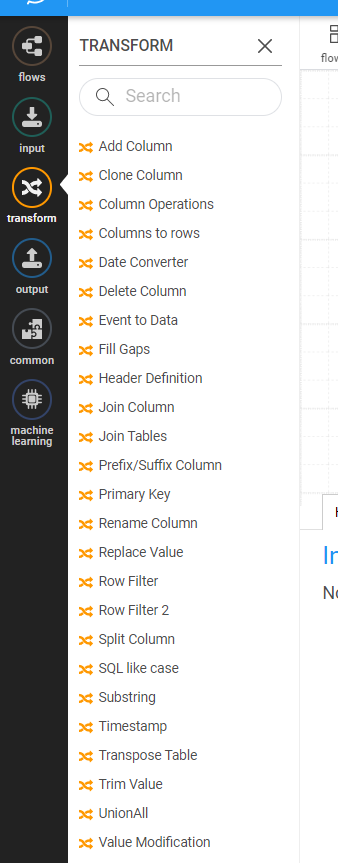

Transformation Widgets are used to make changes to your data during the loading process. There are multiple transformations available, each with its one set of actions. Multiple transformations can be chained together to accomplish more complex tasks. Branching and splitting your flow(s) into two or more sub-flows is also supported via the Row Conditional Filter transformation. Flows can then be re-consolidated using one of the Union transforms.

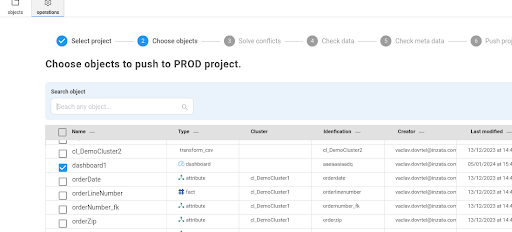

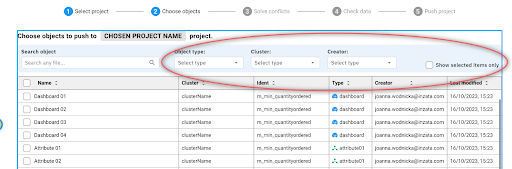

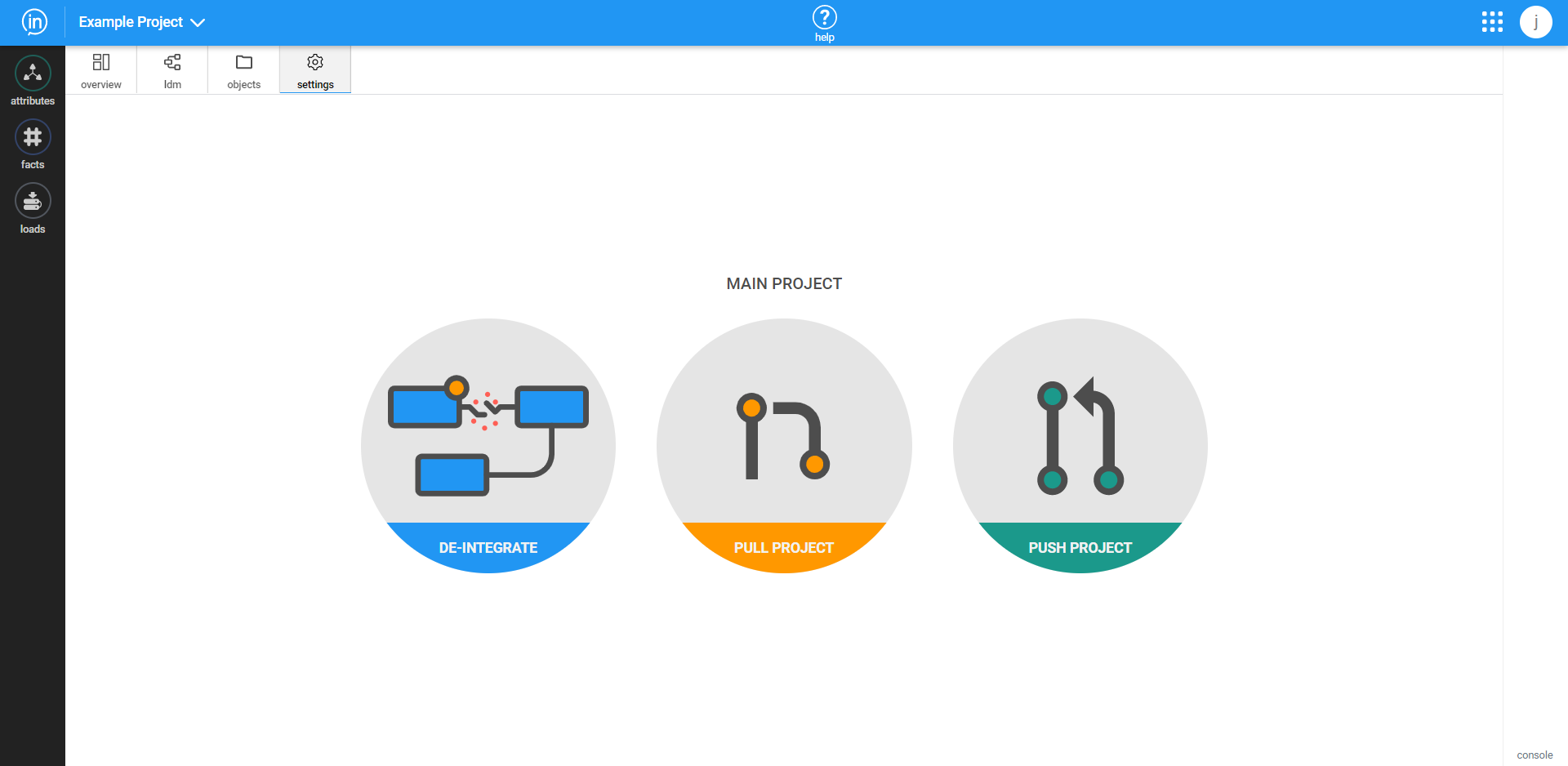

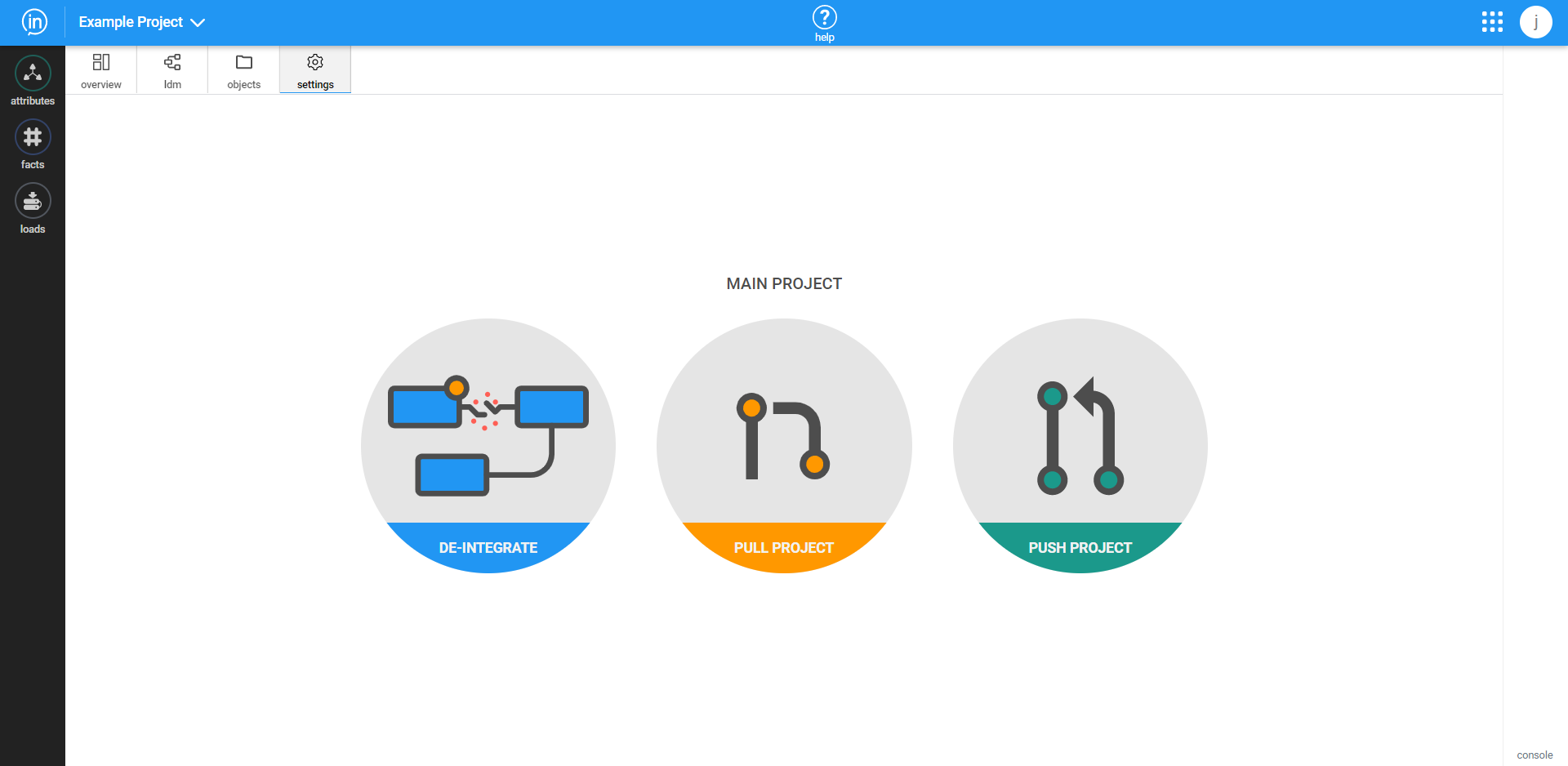

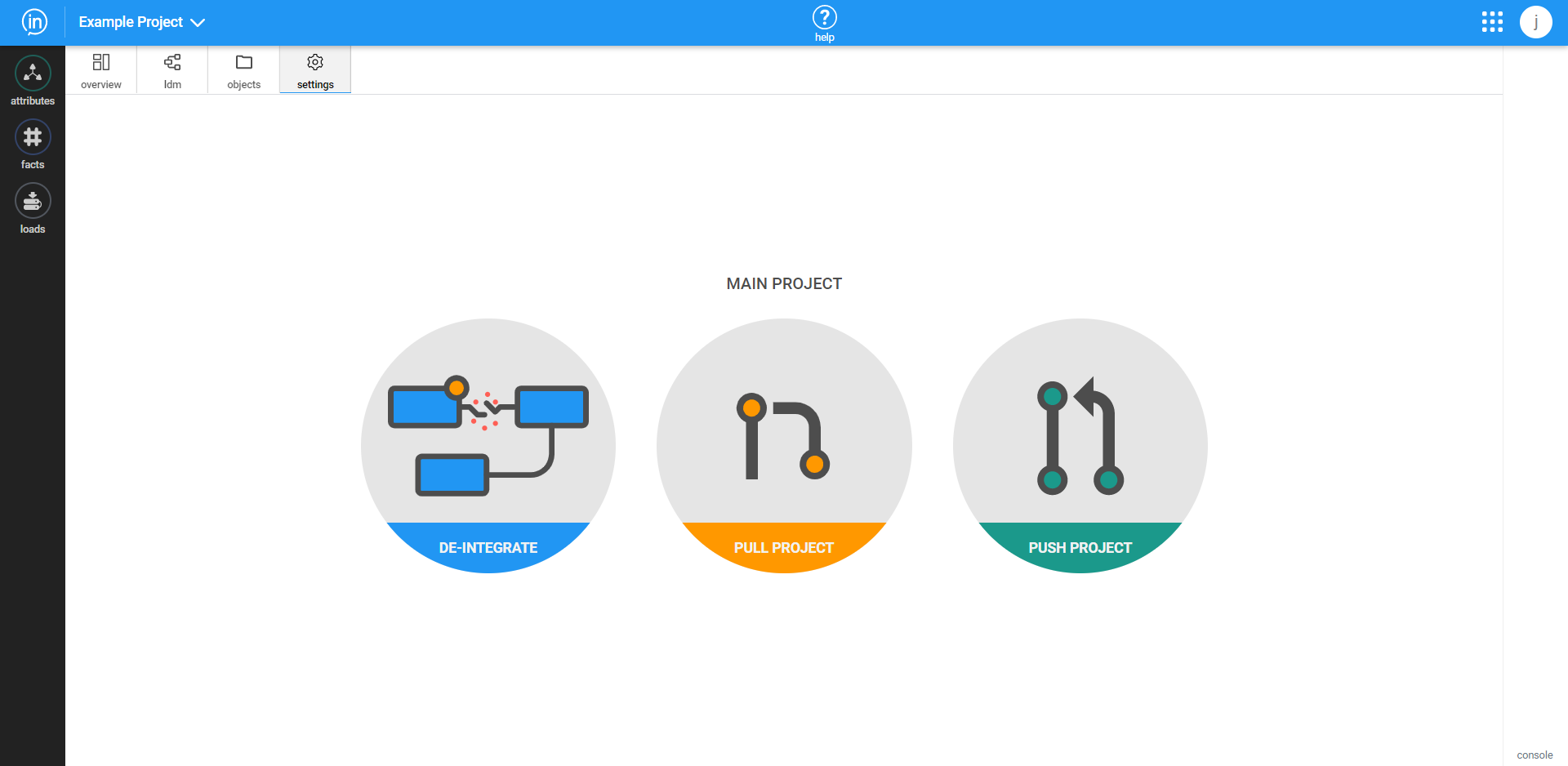

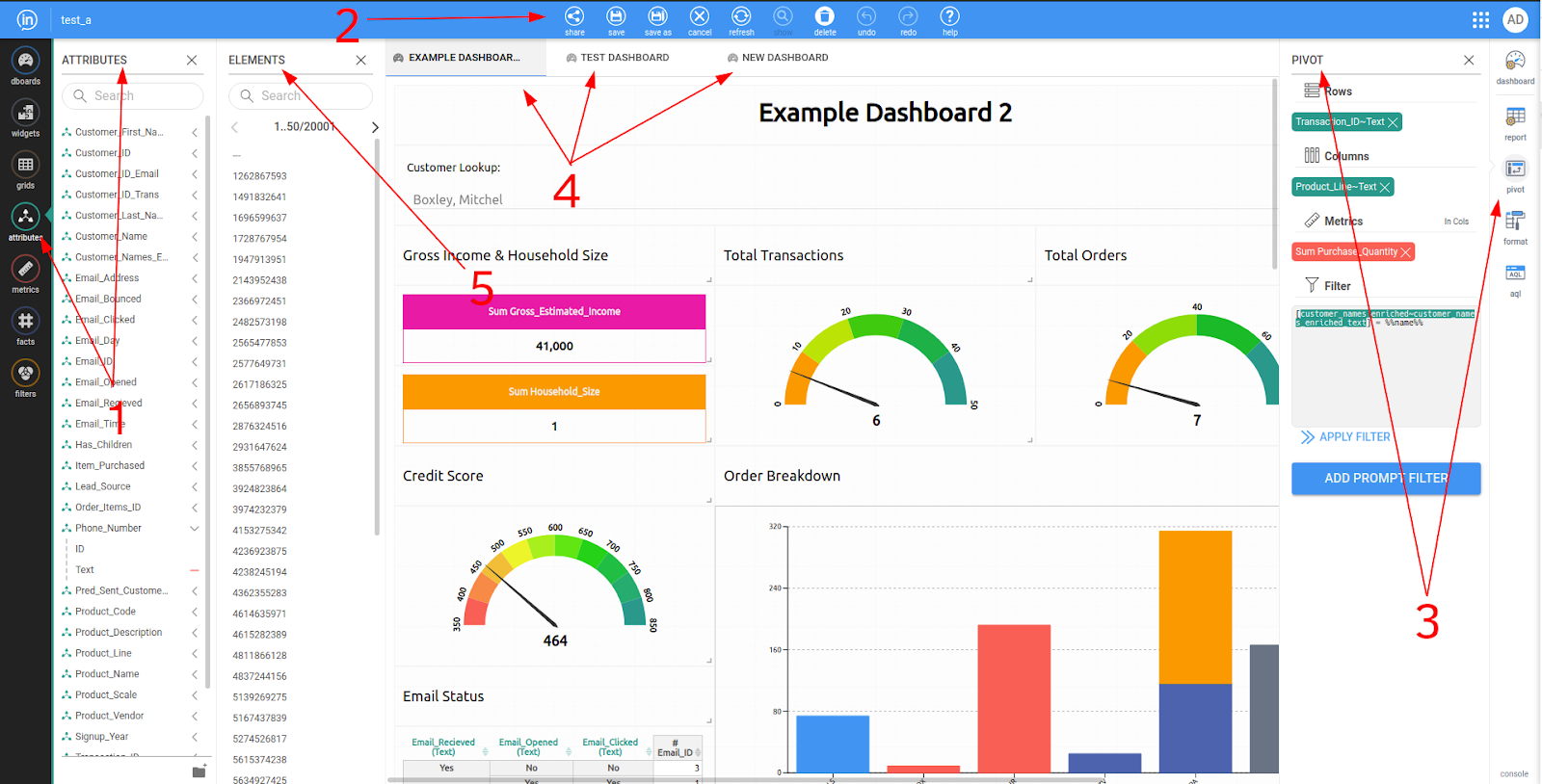

The PUSH process facilitates the smooth propagation of changes from the Quality Assurance (QA) environment to the Production (PROD) environment. This process involves selecting the project and objects to be propagated through a few-step procedure.

During the first and second steps of the PUSH process, users are required to choose the project and objects slated for propagation.

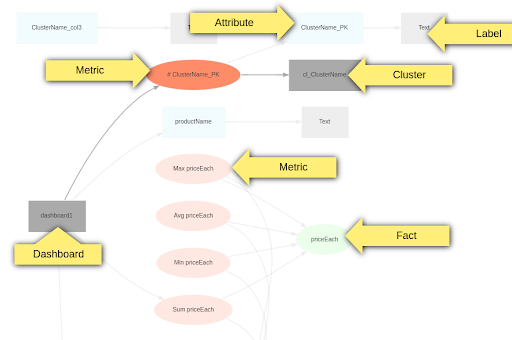

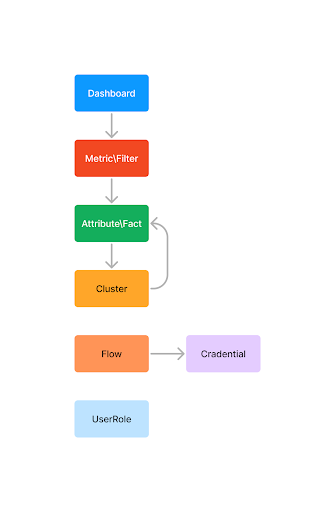

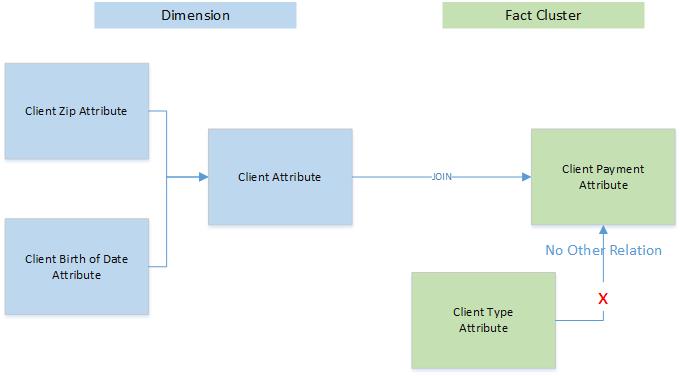

This marks a significant improvement from the previous version, as users are no longer obligated to be aware of all dependent objects. The system leverages the Object Dependency Model (ODM) graph, illustrated in Image 1, to identify and manage dependencies. This eliminates the need for users to manually specify all dependencies.

The system leverages the Object Dependency Model (ODM) graph, illustrated in Image 1, to identify and manage dependencies. This eliminates the need for users to manually specify all dependencies.

Dependency resolution relies on other dependencies, with the following exceptions:

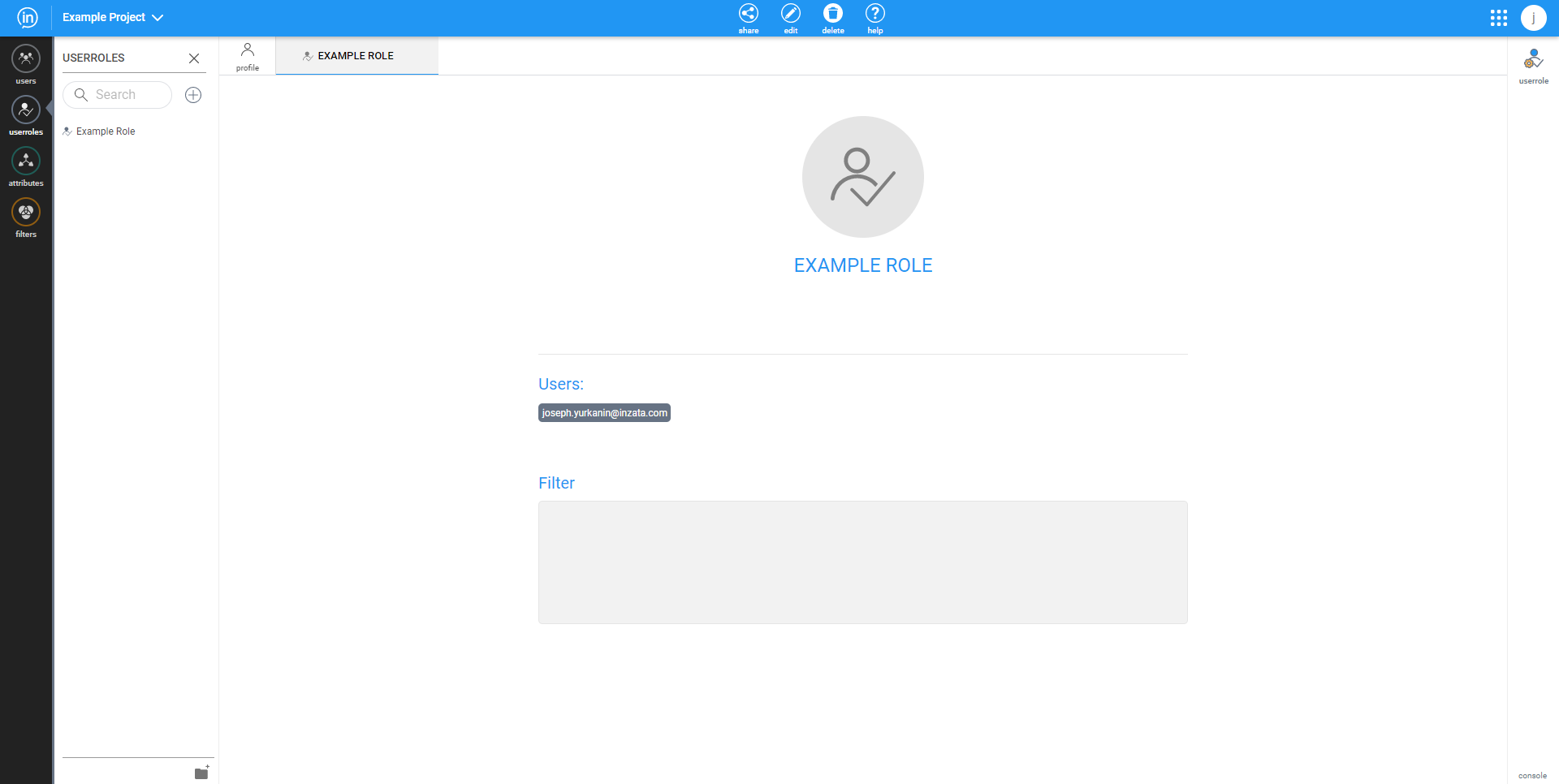

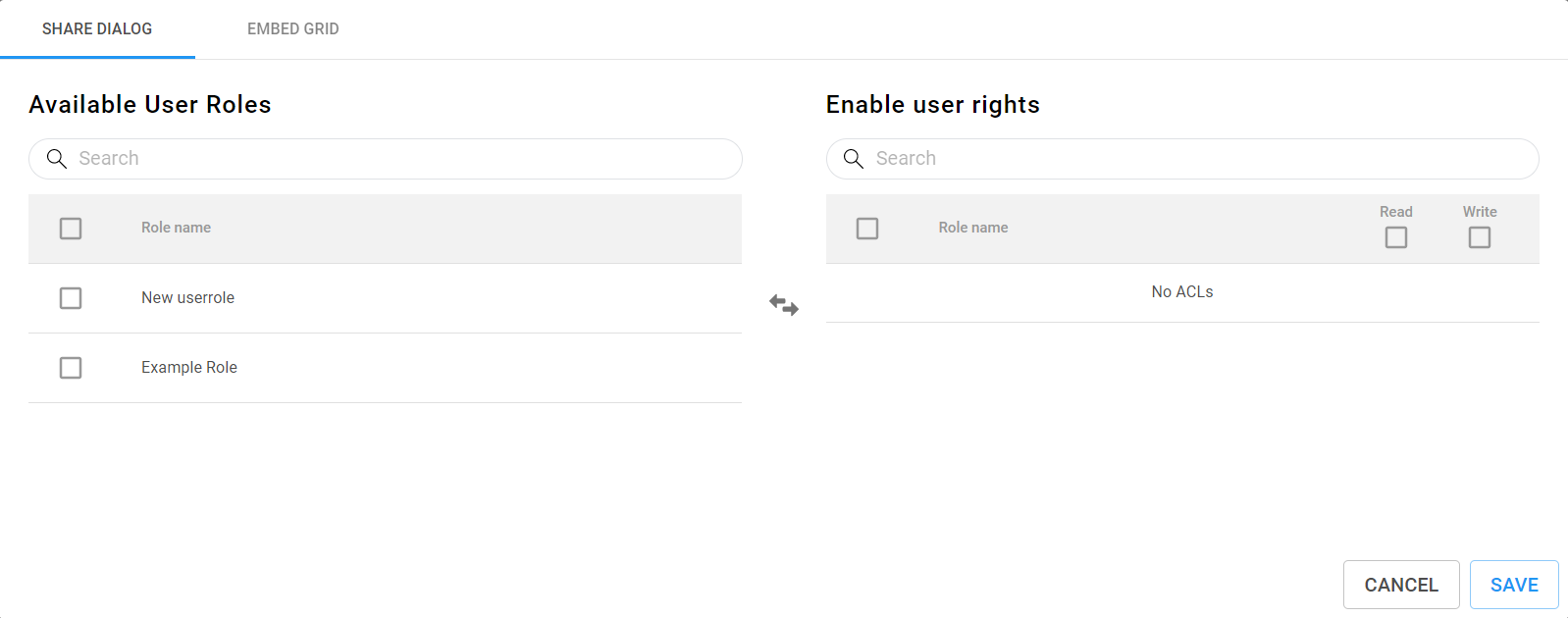

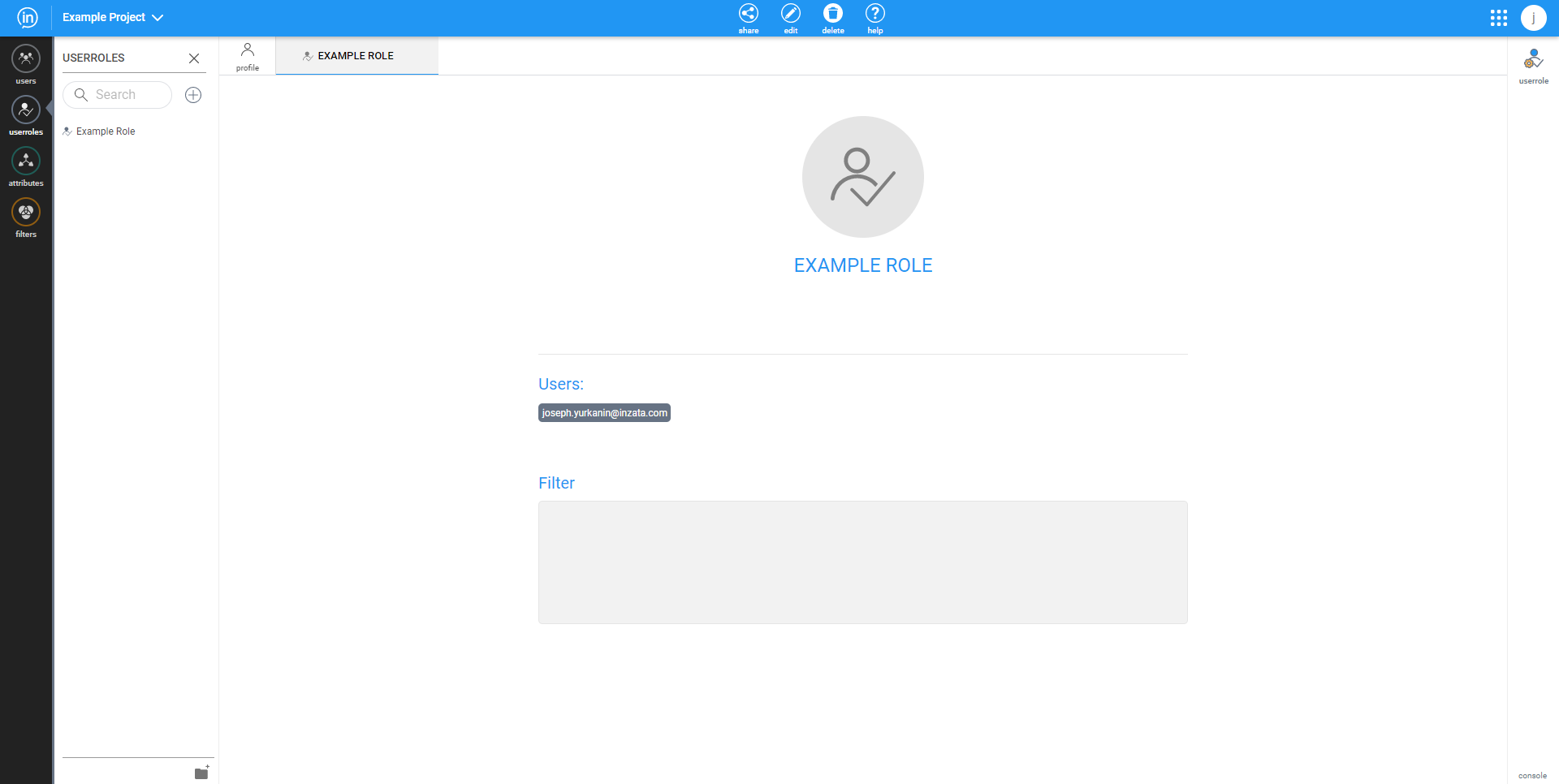

UserRole: User role objects are not automatically marked as dependencies but can be selected in Step 2(choose object).

UserRole Users: Users within user role objects are not synchronized at all.

Cluster: Clusters are synchronized as a single object. This implies that all newly added or deleted columns will be synchronized, ensuring the cluster is identical after the synchronization process. Additionally, all dependent attributes and facts will be propagated to the target project. An attribute/fact can be deleted if the corresponding column was removed in the source project.

Cluster and Joins: Clusters are recursively synchronized with all joined clusters.

Flow: Currently, flows are not dependent on clusters or other objects and must be selected in Step 2(choose object).

Flow Credentials: Credentials are created in the target project but without content and must be set again in the target project.

WebDAV Files: All dependent files from WebDAV, including input files for inflow and images for dashboards, are ignored and must be synchronized manually.

Folder: All objects within a folder are marked as dependent for synchronization.

Market Packages: Currently, packages cannot be synchronized. This capability will be available at the beginning of February.

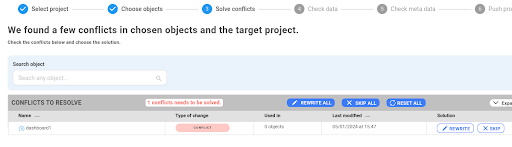

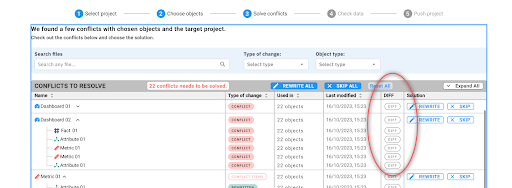

Currently, conflicts can only be resolved by rewriting the target object. This entails rewriting both the title and content. Or the second option that the input object selected in step 2 will be ignored. This step shows only objects with conflicts, not all that will be added. To show and check all changes in the target project go to next step.

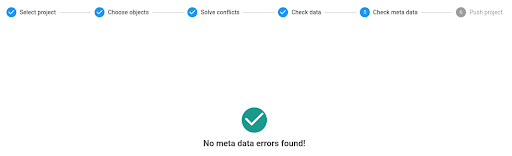

In this step, users can check for all possible objects and data issues in the target project after the push. If everything is ok it will show:

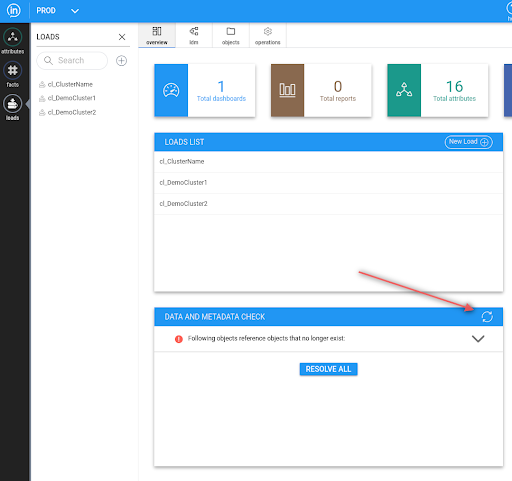

There may also be issues present in the target project before the push. Therefore, please carefully inspect the errors. If you are unsure, you can also review current issues in inModeler within the target project:

1. If objects has “userrole” as dependency the push fails now on “Cannot process object aaeaaaiaadq: its ACL definition points to role aafbbb1aade, which does not exist!”. Will be fixed at the end of January.

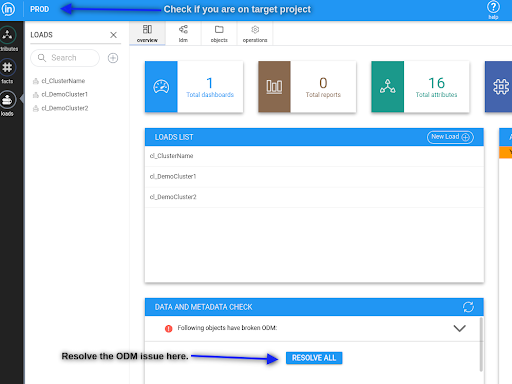

2. In step 5 (check meta data) there can be an issue “Following objects have broken ODM: These objects can be regenerated.”. This issue means that the user has to go to the target project to inModeler after the push and click on resolve md issue.

WARNING: Before the resolve, check that there is only one issue “Following objects have broken ODM”.

See image:

1. Ability to push dependent packages. For example, users connect “time dimension” from the market in QA and want to propagate these changes to PROD. This capability will be available at the beginning of February.

2. Ability to filter objects in step2(choose object) by type, time of modifications … TBD

3. The capability to display differences between objects during conflict resolution. TBD

4. Mark inflows as dependent objects for clusters. Currently, inflow is not dependent on the cluster, and if the cluster is synchronized, the flow is not propagated to the target. TBD

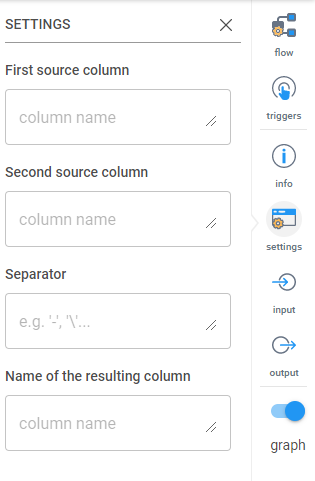

InFlow is Inzata’s data ingestion and application, used to create real-time data pipelines for transporting your data. Whether you want to load in a single CSV file or connect to a database, this is where you’ll start. See the inFlow Navigation to reference particular pieces of the application. InFlow can be used to create both inbound and outbound data flows, giving you maximum flexibility.

Creating a new Flow

Design tip: It is good practice to give each data source its own flow so you can schedule and execute each one individually using Triggers, however you can have multiple data sources within one flow.

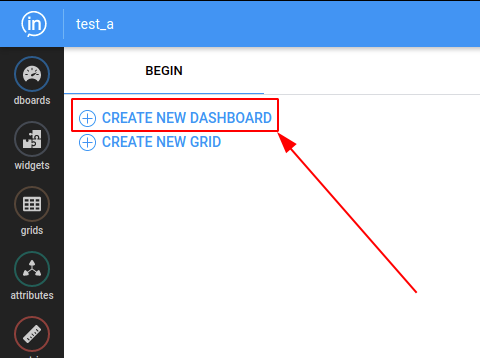

To create a new flow click either of the buttons indicated with red arrows in the picture below. If there are existing data loads, you may see them listed on this page.

Upon creating a new flow, you will see the default new flow. While you can create flows in this view, we recommend changing to a list view by toggling the button on the bottom of the right side menu.

The new view functions similarly to the graph but is easier to see the order of inputs and transformations when you are first starting to work with Inzata. After toggling to a list, you should see the following screen:

Once your flow is created, you should name it using the options on the far right. Click the top icon “Flow” and enter in a name that will remind you what the data table or information you’re loading will be so you can easily locate it again to make changes if needed.

You should also save your flow periodically as you work to avoid losing any work. The save button is located at the top of the page. After saving, you’ll have to click “Edit” to make any other changes to your flow.

Loading Data from a Source

Now that you have a flow created, you will use the correct input type to start the process of bringing your data into the Inzata platform. The inputs are found on the left of the screen.

When you select that, a menu will appear on the right of the screen. (You may need to click on Settings to toggle the menu open.)

Click the blue UPLOAD button which will open your folders for you to select a file to upload. If “Mutiload” is selected, you can upload multiple files at once but you will still only be able to connect to a single CSV file in this Flow.

Once your file is uploaded, click on it in the list so that its name appears in the “File selected” box.

While not required, it is suggested to click the box next to “Use Safe Mode” which just strips out any residual formatting from the source file as it’s uploaded into Inzata.

Using the “Output data” to preview your data

After you have your file selected, you can click the arrow button at the far right of the input data (in this case CSV Reader) to run the flow up to that point.

Once you click that, it will show a preview of your data in the bottom right under the “Output Data” header. This will allow you to check that the data coming in is in the expected format and give a visual representation as you start to add transformations to your data.

Common Transformations and When to Use Them

Now that you have a file selected to upload, you can use Inzata’s built-in transformations to set up cleaning procedures to improve data quality as it’s brought into the Inzata system. The transformations are found on the left of the screen by clicking on the yellow icon with “transform” under it.

Several transformations are commonly used as best practices:

1. Date Converter: While in rare cases dates are standardized throughout a file, Inzata recommends using the Date Converter transformation to ensure all dates read in the same format.

2. Primary Key: If there is no primary key on the table, this transformation will create one. Simply enter the name of the primary key column (ex. “pkgen”) and Inzata will create a primary key column. If you would like the Primary Key to be derived from existing columns (ex. First Name, Last Name, Transaction Date), select the “Generate from columns” and enter in the names of the columns in the “Which Columns to Use” box. Make sure to separate the column names with a comma.

3. Prefix/Suffix Column: When loading in data from multiple tables, the chance of repeating column headers increases. To avoid confusion as you’re building your report, it is recommended to add an identifying prefix to each column header. To add the prefix to every column, leave the “Column Name” box blank. (Ex. If you’re loading in a table of Customers, add “cust_” in the Prefix box and select “use on header”.) This is typically the last transformation applied so no new columns are created without the Prefix added.

Loading the Data

Once your data source is connected to and the transformations are applied, you are ready to load your data into Inzata. Click on the Inzata Load icon to open the settings on the right. It will give you the option to name your cluster by typing a name in the box under “Cluster”. Creating a name here will make it easier in the inModeler application but is not necessary.

Use the Manual Table Input to copy and paste a table to load into Inzata. The settings are as follows:

Table Input: Type or paste a table in the box. Each value should be separated by a comma and each new row should start on a new line. The first row will be read in as headers and each subsequent row will be a new “entry” row in the table.

Table Editor: Clicking this button will give you a visual of the table being created and allow you to see how the table will load in. After clicking on the Table Editor button, the following popup will appear.

The options within the table editor are as follows:

1. To manually enter values, click on any cell. The col1, col2… will be the header columns. val1, etc will be the values in the table.

2. Click the plus (+) to add a column to the end or the minus (-) to remove the last column.

3. ‘Save’ will save the table and ‘Close’ will close the table editor.

4. Click the plus (+) to add a row to the end or the minus (-) to remove the last row.

The Inzata Report box operates off data that is already loaded into Inzata. This box is primarily used to generate exports of subsets of your inzata data for other platforms or to create flags or derived attributes from data already loaded. This can be done in the following ways:

AQL: Paste the AQL definition of the report you wish to use.

Select Grid: Select a pre-saved grid you wish to use.

.png)

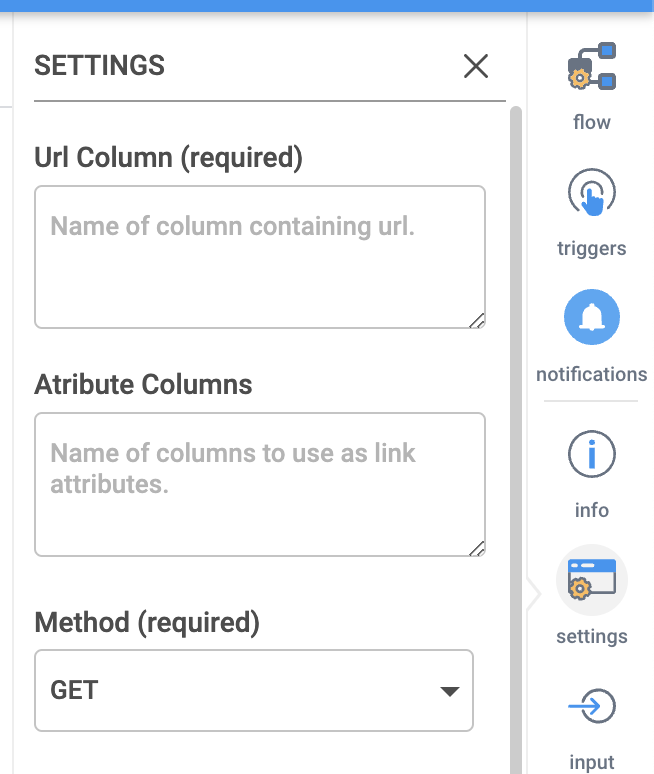

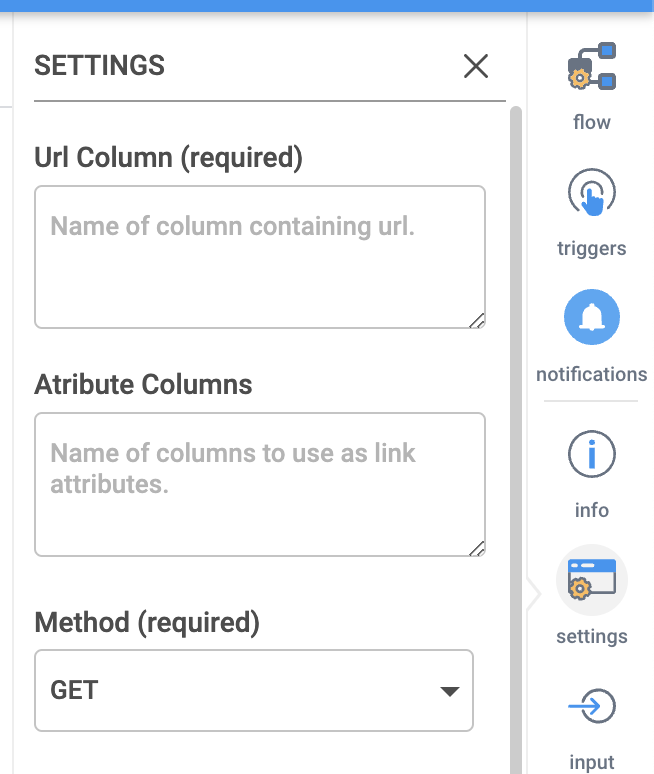

After dragging in the “Batch JSON Downloader” and selecting it, the settings should appear on the right. Shown on the right side of this screen, the options are as follows:

URL Column: The column from the data being fed to this input that contains the URLs of the data to be ingested

Attribute Columns: What columns in the data being fed to this input contain the data to formulate the batch of requests

Method: If the request will be GET or POST, POST will be used when you need to do something to have the data returned first. If you are not sure what to use then use GET

Post data: If you selected the POST method, here is where you would supply the data for that POST statement

Selector: Where the data is located or where the post statement should be given. This can be found by right clicking on the desired data on the webpage and selecting “Inspect Element”. Then in the console that opens hover over items in this console until your data is highlighted and then right click again and chose “Copy Selector”. Then enter that information here.

The HTML Table input task is a great tool for scraping individual tables from a webpage, but it can only pull data from a single table as opposed to the HTML Downloader. If only one table is needed from a webpage, then it is recommended to use this input task. While it is simpler to use than the HTML Downloader, this task still involves a level of technical understanding regarding webscraping in order to configure it.

Settings

URL: The URL where the data is located

Method: If the request will be GET or POST, POST will be used when you need to do something to have the data returned first. If you are not sure what to use then use GET

Post data (optional): If you selected the POST method, here is where you would supply the data for that POST statement

HTML Entity Selector: Where the data is located or where the post statement should be given. This can be found by right clicking on the desired data on the webpage and selecting “Inspect Element”. Then in the console that opens hover over items in this console until your data is highlighted and then right click again and chose “Copy Selector”. Then enter that information here.

Request custom headers(in JSON format) (optional): If additional authorization information is required as a header it can be included in here

What to use as header: What should be recognized as the headers of the data, is it the first column of data, should Inzata just use the column number, or did you want to define your own headers

Own definition of header (optional): If you define your own headers then you would enter that definition here

.png)

The CSV Local File input will read in a file in csv, tsv, and some forms of txt formats. There may be other file formats that work as well.

To use this input method, the user must select a file from their local file system by clicking the “upload” button and using the pop-up file browser. The selected file will then be present and auto-selected in the “select or upload file” list shown below. At this point, most use cases will not require any further setting configuration, but if this task is run and subsequently stop with an error, then the user should click the check box next to “Use Safe Mode”. This should resolve most errors, but if the error persists or another error appears, then further troubleshooting will be required.

.png)

Settings

Multiload (optional): If this is selected, you will be able to use regular expressions to match multiple files.

Select or upload file: First, click the blue UPLOAD button to find a file on your device to upload into Inzata. Click on the file you would like to load with this input selector. If there are files in the box you would like to delete, click on it to highlight it and click the red DELETE button.

File selected: When you select a file in the previous box, it will be displayed here so you can confirm it is the correct file.

Without header (optional): If this box is selected, the top row of the CSV will not be brought in to Inzata.

Separator (optional): If you would like to specify the separator used, you can enter it here. Generally Inzata will be able to recognize the separator and you can leave this blank.

Encoding: If you know the encoding of your csv file, you can select it here. The default and most common is UTF-8.

Use safe mode (optional): Check this box if you would like to upload with safe mode.

The CSV Local File input will upload a file in xlsx and xls formats.

To use this input method, the user must select a file from their local file system by clicking the “upload” button and using the pop-up file browser. The selected file will then be present and auto-selected in the “select or upload file” list shown below. At this point, most use cases will not require any further setting configuration, but if this task is run and subsequently stop with an error, then the user should click the check box next to “Use Safe Mode”. This should resolve most errors, but if the error persists or another error appears, then further troubleshooting will be required.

Settings

Select or upload file: First, click the blue UPLOAD button to find a file on your device to upload into Inzata. Click on the file you would like to load with this input selector. If there are files in the box you would like to delete, click on it to highlight it and click the red DELETE button.

File selected: When you select a file in the previous box, it will be displayed here so you can confirm it is the correct file.

Choose file encoding: If you know the encoding of your csv file, you can select it here. The default and most common is UTF-8.

Sheet name (optional): Leave blank to load in the first sheet of the excel file. To load in a sheet that is not the first, type the name in this box.

Range of file data: Select one of the four options. By default, it will select ‘Leave as is’.

• Leave as is: This will read in the entire sheet

• Start at row: Selecting this will show a box at the bottom of the list. Type in the row number you would like to start at. If you have blank columns or extra metadata at the top of your excel sheet, this will allow you to skip those rows and load in only the rows containing the data you need for analysis.

• Read n rows: Selecting this will show a box at the bottom of the list. Type in the number of rows you would like read in. This will start at the first row and only read in the number of rows you specify.

•

Range of data: Selecting this will show two new boxes at the bottom of the list. The first asks you to specify the Top left cell and the second asks to specify the Bottom right cell. This will read in every cell between the two specified cells.

Use safe mode (optional): Check this box if you would like to upload with safe mode.

Clicking the ‘input’ icon on the left side navigation will open a menu with the following options. To use one, simply click and drag it into the main canvas for your.

The JSON downloader will pull data in a JSON format. The settings are as follows:

URL: The URL where the data is located

Method: If the request will be GET or POST, POST will be used when you need to do something to have the data returned first. If you are not sure what to use then use GET

Post data (optional): If you selected the POST method, here is where you would supply the data for that POST statement

Selector (optional): Where the data is located or where the post statement should be given. This can be found by right clicking on the desired data on the webpage and selecting “Inspect Element”. Then in the console that opens hover over items in this console until your data is highlighted and then right click again and chose “Copy Selector”. Then enter that information here.

Custom headers(in JSON format) (optional): If additional authorization information is required as a header it can be included in here

.png)

The Excel or CSV SFTP loader will load data in that’s sent to an SFTP. The settings are as follows:

Hostname of SFTP server: the host name or IP address of the SFTP server

Port: The port of the SFTP server

Username for authentication on SFTP server: Username credentials for the SFTP server

Password for authentication on SFTP server: Password for the SFTP server

Path to file on SFTP server (optional): If you want to pull a specific file, put the path name here.

.png)

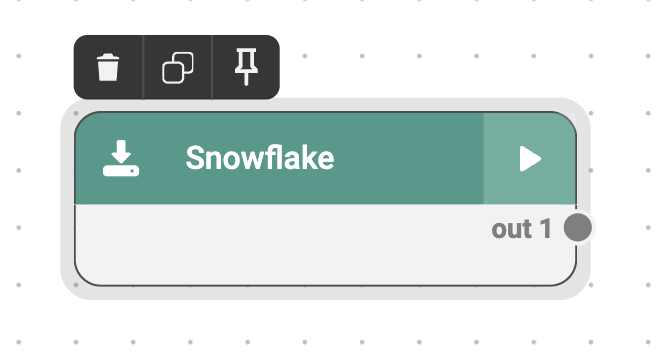

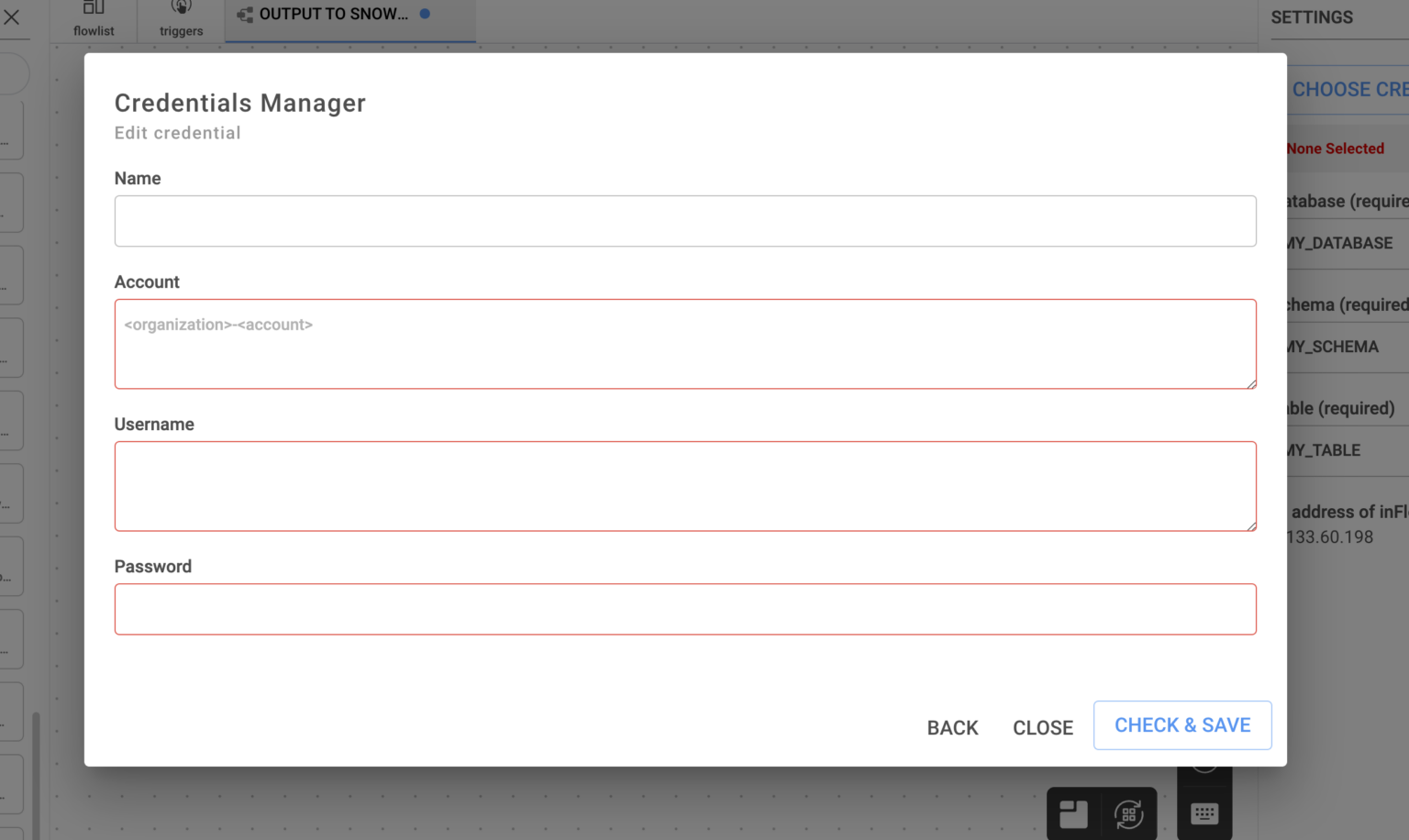

1. From the InFlow > Inputs menu, select the Snowflake connector and drag it into your workspace.

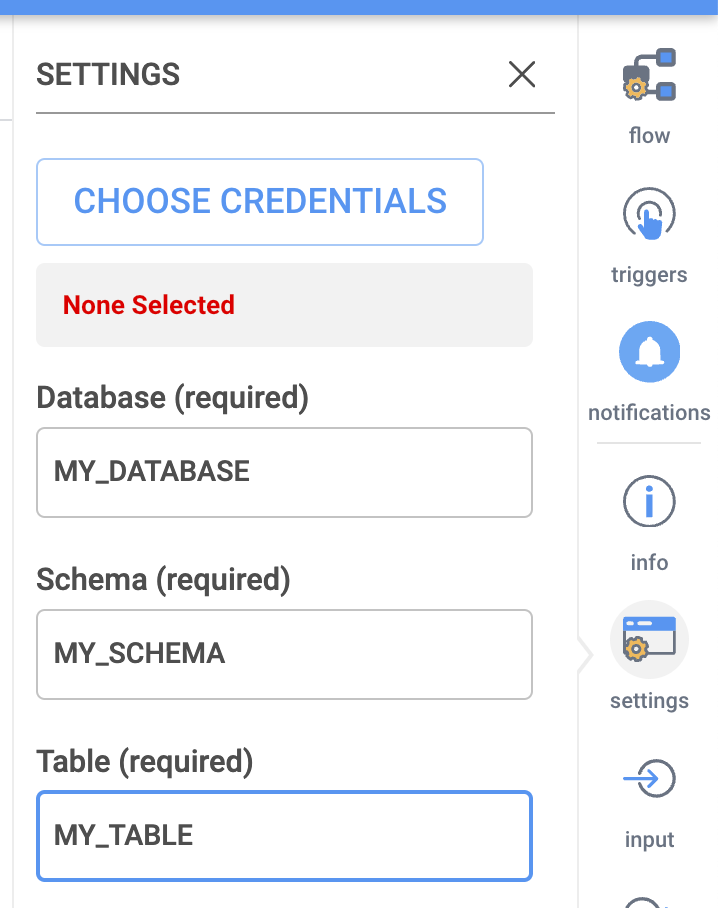

2. Then click on the connector in the workspace to open the Settings menu on the right.

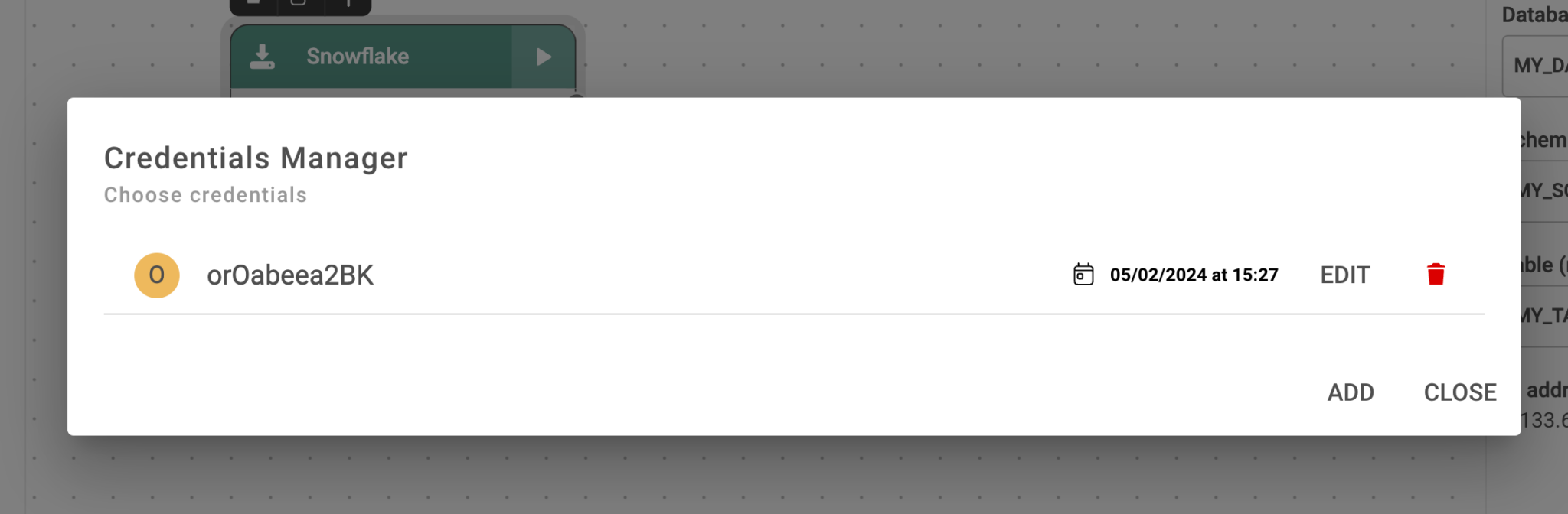

3. Click on CHOOSE CREDENTIALS to bring up the Credentials dialogue.

4. In the Dialogue that opens, click on Add if you wish to add a new Snowflake access credential. (If you wish to use an existing Snowflake credential, simply click on it and the dialogue will close.)

5. In the Credentials Manager – Edit window, you will need to collect a few pieces of information from your Snowflake account:

1. Organization ID

2. Account ID

You can ask your Snowflake administrator for these, or if you have access to Snowsight, they can both be found under Admin > Accounts.

You will also need the username and password of a Snowflake account with access to the target data.

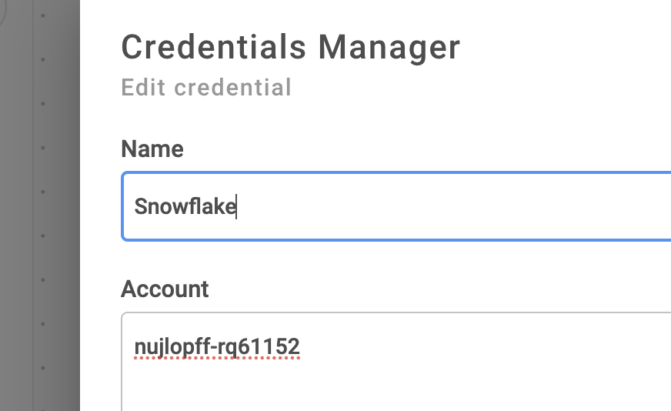

6. Give your Credential a descriptive name.

7. In the account field, enter your Organization and Account IDs in the following format, note the dash between the two values:

Organization_ID-Account_ID

Example:

nujlopff-rq61152

(Do not include the .snowflakecomputing.com domain extension.)

8. Enter your Snowflake Username and Password and click “Check and Save” to save and test the credential.

If you receive an error, verify the following:

– Are Org and Account ID valid? Are they formatted properly (No spaces, single hyphen “-” between?)

– Does Snowflake user have appropriate access to this account?

– Is password correct?

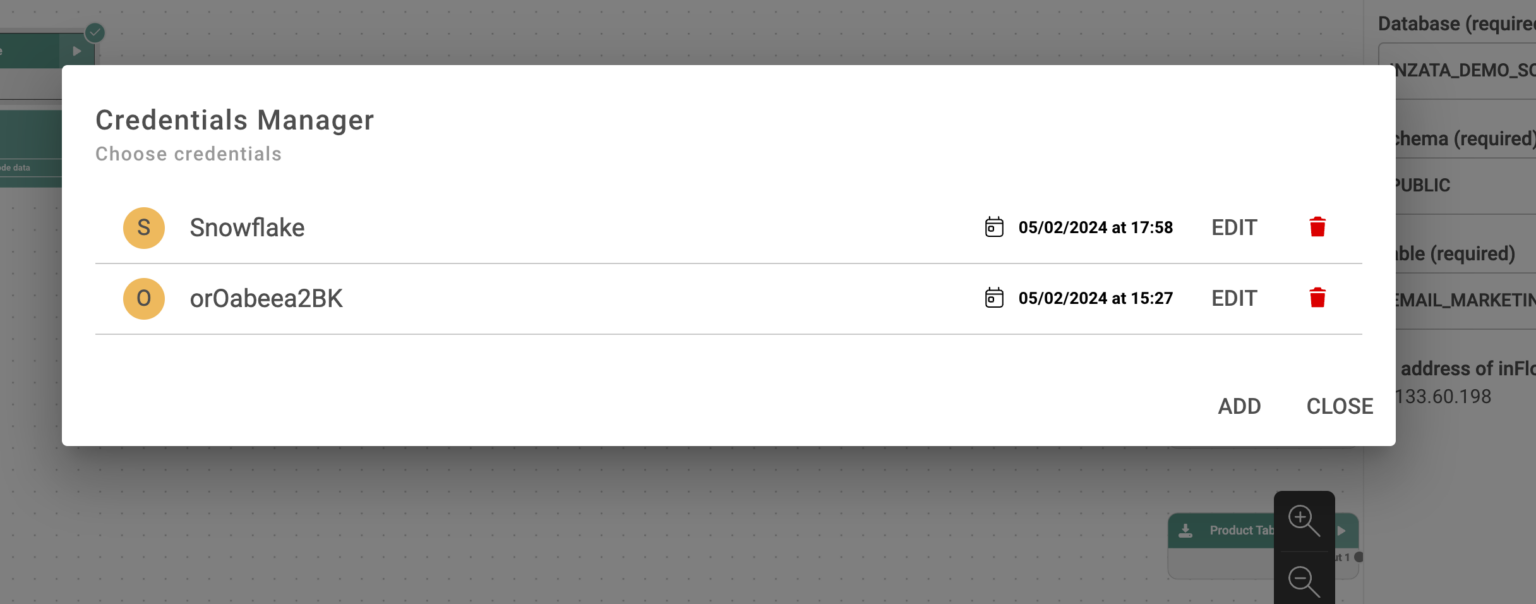

9. From the Manage Credentials dialogue, click to select the Snowflake Credential you just entered.

The window will close.

10. Now navigate back to the Settings on the Right menu. Enter the target Database, Schema and Table you want the connector to read data from, exactly as they appear in your Snowflake console.

If you wish to read data from more than one table, please configure a second connector in the workspace. You can clone the 1st connector and simply change the database parameter in the new one to speed this up.

11. You can now test your connector by loading sample data from Snowflake, then connect it to the rest of your Flow.

The PostgresSQL Database Connector will create a connection to a database to load data into Inzata. Shown on the right side of this screen, the settings are as follows:

Choose Credentials: Click this button to open the Creditials dialogue. Then click “Add” in the dialogue window that opens to add your first PostgresSQL login credential.

Choose Database: Choose the name of the database.

Choose Tables Use SSL (optional): Select this if you would like to secure the server to browser transaction. If the data being loaded contains secure or identifying information, this should be selected.

Use Custom Query: Type or paste your SQL Select statement into the box.

Number of rows to download (optional): Enter in the number of rows to load in. This will only load in the number of rows specified, even if the Query would normally return more rows of data.

.png)

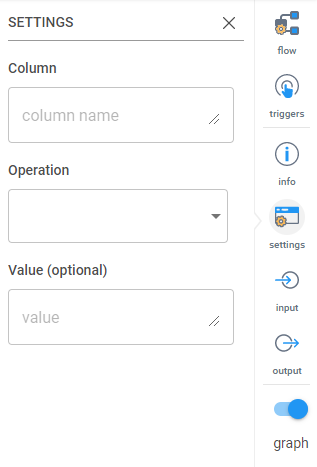

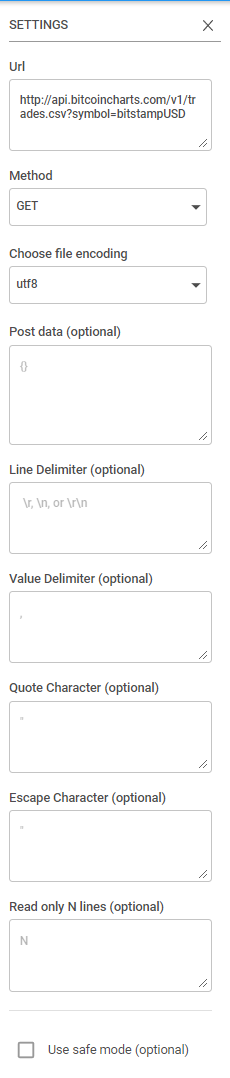

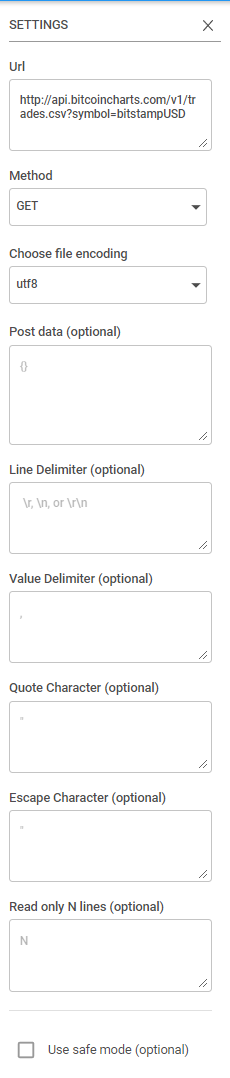

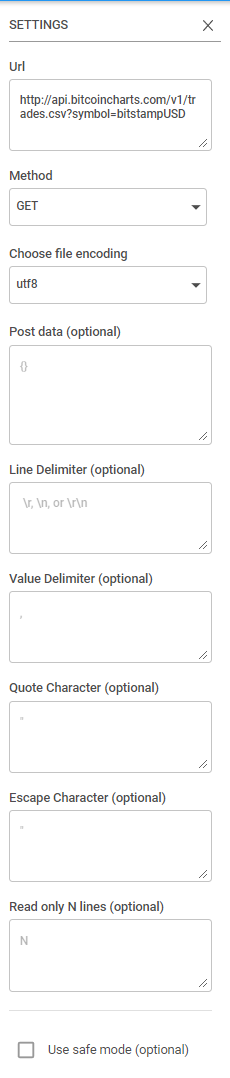

This input task requires a greater technical understanding for how files are made available via hosting or API. It is used to download csv, tsv, or txt files that are publicly hosted or are made available through an API. It may work for other file formats as well, however those are not officially supported.

Settings

URL: The web URL of the file you wish to be downloading

Method: If the request will be GET or POST, POST will be used when you need to do something to have the data returned first. If you are not sure what to use then use GET

Choose file encoding: Is the file utf8 or ascii

Post data (optional): If you selected the POST method, here is where you would supply the data for that POST statement

Line Delimiter (optional): This is where you can specify the type of end of line character for the data

Value Delimiter (optional): This is the delimiter of the data, this should be a single character

Quote Character (optional): You can also specify a quote character if the data is enclosed within single quotes or something else

Escape Character (optional): How the data is escaped, most of the time this is also a quote character

Read only N lines (optional): If you only want to read the first X lines of data you can put a number here

Use safe mode (optional): If the data is quoted and escaped you should use this option for more checks while parsing the data

This Inflow input method is a highly flexible tool that involves some technical understanding of how webscraping works. It differs from the HTML Table Downloader in that it can scrape from more than one location at a time and requires an input table. This input table must contain a column of URLs and it may also contain one or more columns containing the link attributes associated with each given URL.

Settings

URL Column: The column from the data being fed to this input that contains the URLs of the data to be ingested

Attribute Columns: What columns in the data being fed to this input contain the data to formulate the batch of requests

Method: If the request will be GET or POST, POST will be used when you need to do something to have the data returned first. If you are not sure what to use then use GET

Post data: If you selected the POST method, here is where you would supply the data for that POST statement

Selector: Where the data is located or where the post statement should be given. This can be found by right clicking on the desired data on the webpage and selecting “Inspect Element”. Then in the console that opens hover over items in this console until your data is highlighted and then right click again and chose “Copy Selector”. Then enter that information here.

HTML entity type: If the data is contained in a table object or a block of text

What to use as header: What should be recognized as the headers of the data, is it the first column of data, should Inzata just use the column number, or did you want to define your own headers

Own definition of header (optional): If you define your own headers then you would enter that definition here

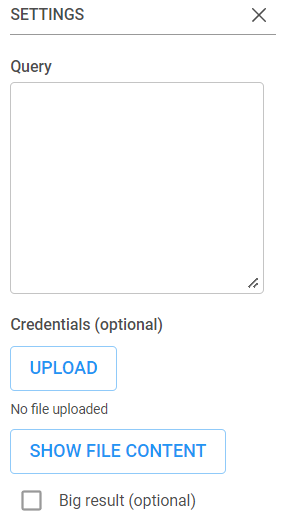

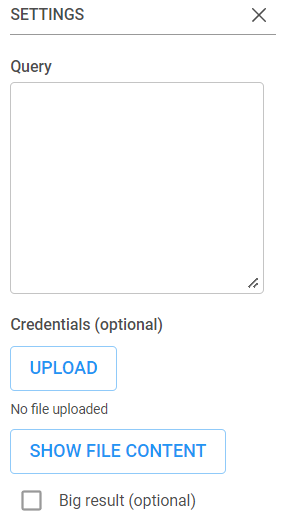

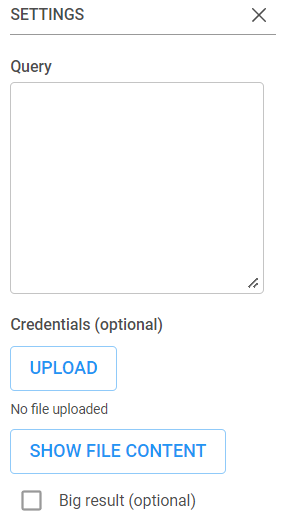

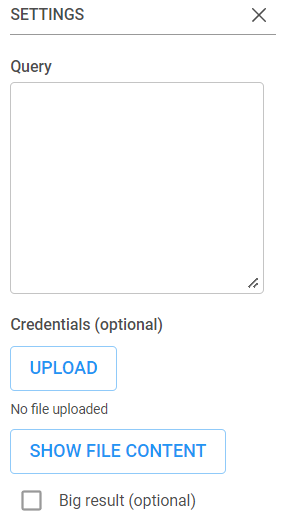

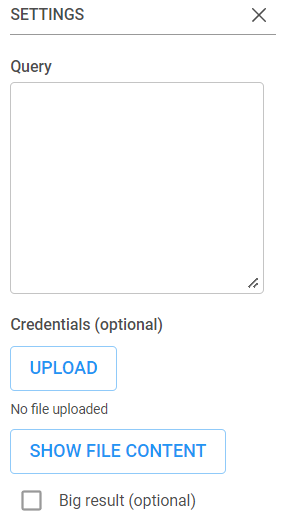

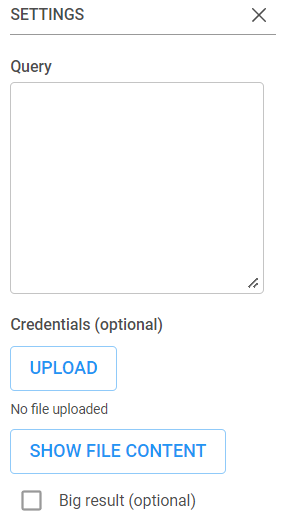

Pulling data from BigQuery is made straightforward with this connector. To learn more about how to get a JWT for access credentials if needed, please refer to Google’s documentation here. Settings Query: This should be a query that Selects a table or a view from withing Google Big Query. Please note Google Big Query will refuse to export tables if they have a lot of “Order” or “Group” statements or potentially if the table is poorly built in Google Big Query. Credentials (optional): If there are access restrictions on the Google Big Query data then you need to provide the json access credentials for a service account with access to that data here. Show File Content: When a user wants to review the uploaded credentials json file they can use this button. It will load a pop-up window within the browser that displays the file contents. Big Result (optional): This option is for users using legacy sql in their query to set the AllowLargeResults flag.

You should now have downloaded a JSON file with credentials for your service account. It should look similar to the following:

{

"type": "service_account",

"project_id": "*****",

"private_key_id": "*****",

"private_key": "*****",

"client_email": "*****",

"client_id": "*****",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "*****",

"universe_domain": "googleapis.com"

}

In InFlow, enter the following information in the Connector's Settings tab

Selected

Dataset (required)

Enter the name of an existing dataset from your Google Big Query project

Table (creates new if does not exist) (required)

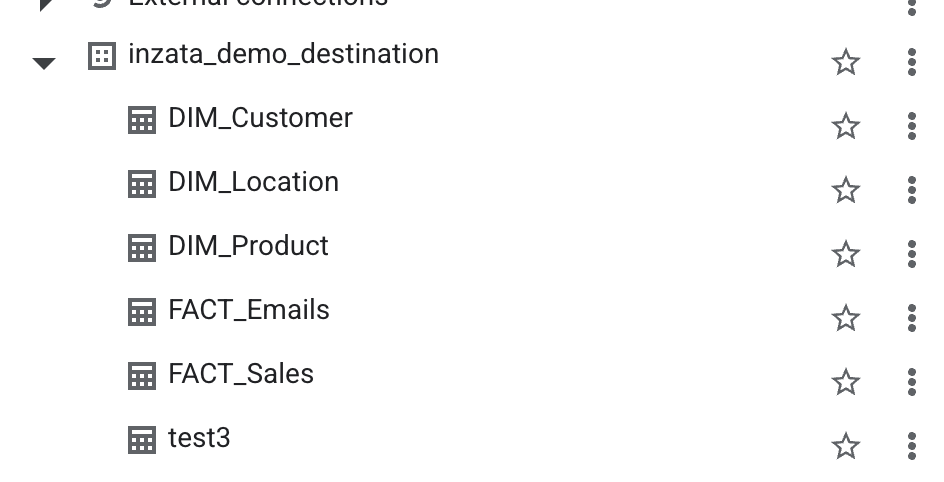

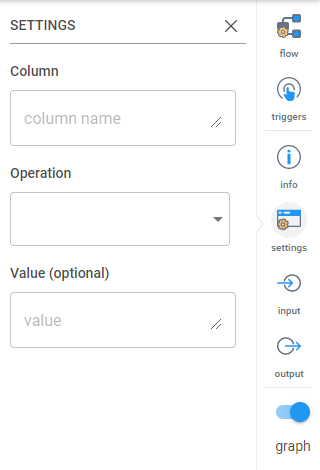

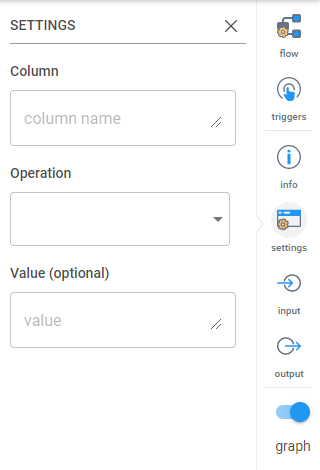

This transformation modifies values in columns. The settings are:

Column: The name of the column that has the values you want to modify.

Operation:

• Plus (+): Add a value to the values in the column

• Subtraction (-): Subtract a value to the values in the column

• Division (/): Divide the values in the column by a number

• Multiplication (*): Multiply the values in the column by a number

• Modulo (%): Modify the values by a modulo.

• Exponentiation (**): Raise all the values to a power.

• Not (for boolean): Modify boolean values

• Prepend (for string): Put a value at the front of a string

• Append (for string): Put a value at the end of a string

Value (optional): The value you want to use to modify the entries.

Acts like a SQL Union. All and joins two datasets together by appending the values for columns that exist in both data sets and adding nulls for columns that only exist in one data set.

There are no settings for this transformation.

This transformation will transpose a table. There are no configurable settings.

The AI widget within Inzata requires a properly configured AI flow to have been previously set up and run within InFlow for information on how to do this please see the inFlow help document. The AI widget has two options from within the widget menu. They are:

1. AI

2. Format

AI Menu

In this menu the user can customize the AI widget.

The label option allows the user to set the title of the AI widget. The inflow component dropdown allows the user to select what previously designed AI algorithm they want to use and the column, value option is where the user sets what columns from the algorithm should be represented with what values for the prediction.

Format Menu

The format menu is how the appearance of an AI widget is changed.

Bringing this transformation in lets you add a column at the end of your table with a prefilled value. The settings are as follows:

Name: Name of the column

Value: Value to fill the column with.

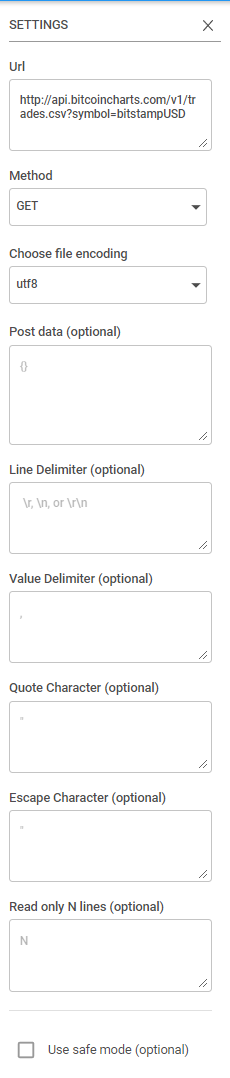

This transformation will join two columns together into a single column. Entries in each column will be combined by a given string. A new column will be created as a result of this transformation. The settings are:

First source column: Type in the header of the column you want as the first (or left) part of the combined column. Example: LastName

Second source column: Type in the header of the column you want as the second (or right) part of the combined column. Example: FirstName

Separator: Type in the character you want to separate the two combined entries. Example: ,

Name of the resulting column: Type in the name of the new combined column. Example: Full Name

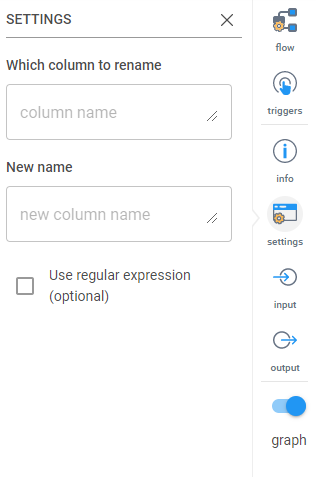

Use this to rename a column. The settings are:

Which column to rename: Type the name or a regular expression to find the column you want to rename.

New name: Type in the new name for the column.

Use regular expression (optional): Select this if you want to use regular expression to identify the column.

Inzata’s Fully Automated data quality solution

Ensuring the accuracy, completeness, and reliability of data is most important for organizations. The integration of diverse data sources into a centralized data warehouse poses challenges that, if not addressed, can lead to compromised data quality. This article delves into the robust Data Quality Assurance Program employed by the Inzata Platform and the innovative IZ Data Quality System, both of which play pivotal roles in maintaining data integrity throughout the entire data lifecycle.

Inzata Platform’s Data Quality Assurance Program

The Inzata Platform’s data quality assurance program is designed to address challenges encountered during the entry of information into the data warehouse. Several key strategies contribute to enhancing data quality:

• Quality improvement in data is achieved by enhancing knowledge about source system data and increasing access to source data owners/analysts. The effectiveness is further boosted by well-documented data attributes, entities, and business rules governing them, resulting in superior modeling, and reporting from the data warehouse. Understanding how data is translated and filtered from source to target is crucial, necessitating mandatory access to Source System Developers when documentation is insufficient. In cases where developers lack comprehensive source definitions due to personnel changes, an empirical “data discovery” process may be required to unveil source business rules and relationships.

Integrated planning between the source and target systems, with agreement on tasks and schedules, enhances efficiency and reduces resource-wasting surprises. Effective communication plans during projects, providing timely updates on the current status of and addressing issues, are integral to this collaborative workflow approach.

• Collaborative planning between source and target systems minimizes surprises and increases efficiency.

• A well-communicated data quality control process provides current status updates and addresses issues promptly.

1. Conducting Data Quality Design Reviews with all relevant groups present is a proactive measure to prevent defects in raw data, translation, and reporting. In cases where design reviews are omitted due to tight schedules, a compensatory allocation of at least double the time is recommended for defect corrections.

2. Implementing Source Data Input/Entry Control is crucial for data quality improvement. Anomalies arise when human users/operators or automated processes deviate from defined attribute domains and business rules during data entry. This includes issues like free-form text entry, redefinition of data types, referential integrity violations, and non-standard uses of data entry functions. Screening for such anomalies and enforcing proper entry at the source creation significantly enhances data quality, positively impacting multiple downstream systems that rely on the data.

Expanding the Data Quality Assurance Horizon with Inzata Platform’s Program and IZ Data Quality Control Process

Beyond procedural activities, the Inzata Platform’s Quality Assurance Program considers user and operational processes that may impact data quality. Meanwhile, the IZ Data Quality System provides a comprehensive solution with its Data Audit functions, Data Quality Statistics, and ETL Data Quality Monitoring.

Common Challenges and Solutions:

1. Operating Procedures:

• Solution: The business users responsible for the source systems and the end users’ processes need to be engaged in identifying standard procedures that create bad data. They should understand the root causes and they should be chartered with process improvement of those data creation processes.

2. Mis-Interpretation of Data:

• Solution: Developers in the data warehouse must possess a comprehensive understanding of the data to ensure accurate modeling, translation, and reporting. In cases of incomplete documentation, conducting design reviews for both the data model and translation business rules becomes crucial. It is essential to document business rules influencing data transformation, subject them to an approval process, and, in instances of personnel changes, undertake an empirical “data discovery” process to unveil business rules and relationships.

3. Incomplete Data:

• Solution: To address incomplete data, a thorough review of each data source is imperative. Specific causes of incompleteness should be identified and targeted with quality checks. This includes implementing scripts to identify transmission errors and missing data, as well as generating logs during load procedures to flag data not loaded due to quality issues. Routine review and resolution of these logs, coupled with planned root cause analysis, are essential to spot and correct repetitive errors at their source. A designated data quality steward should regularly review the logs for effective quality control.

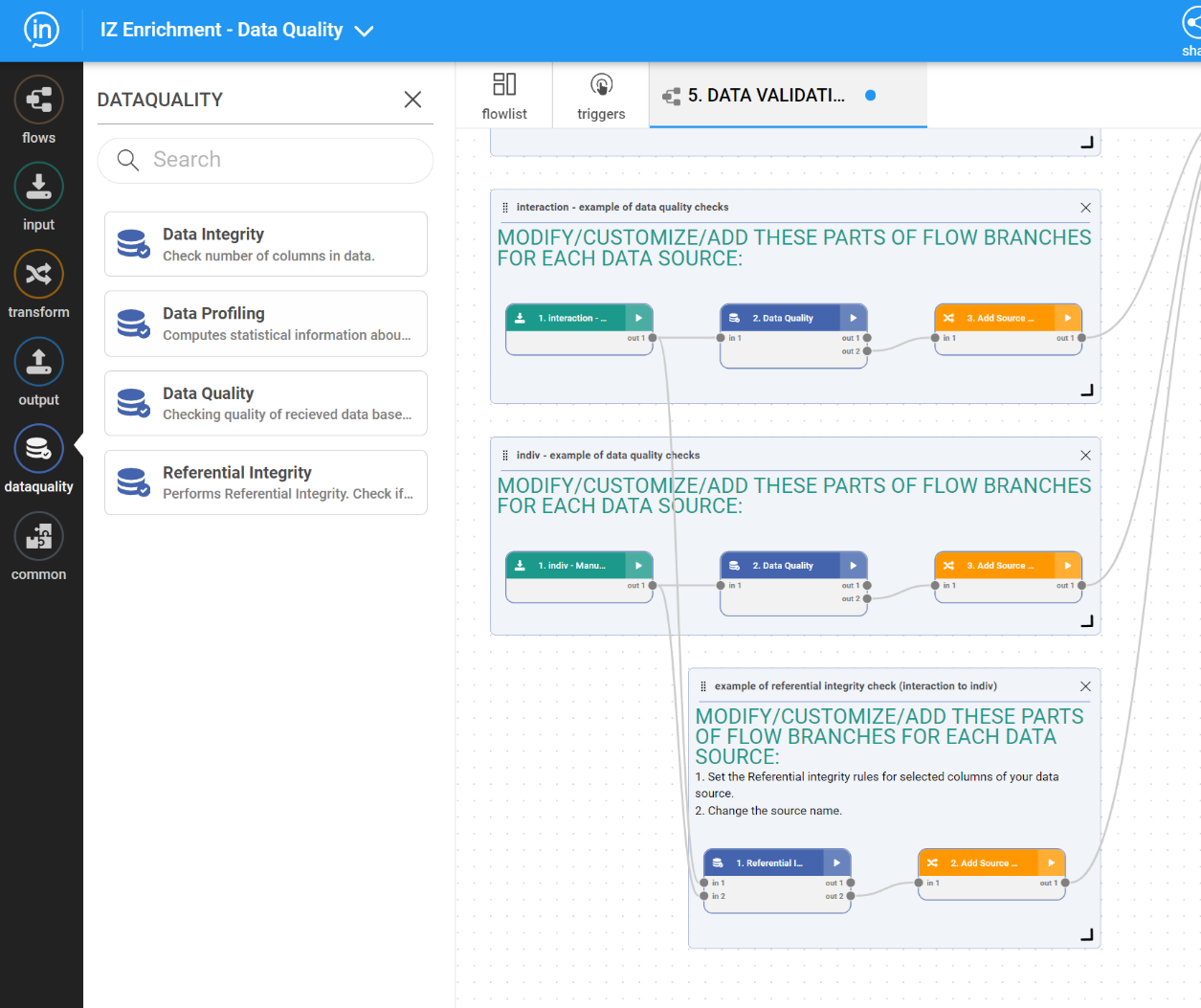

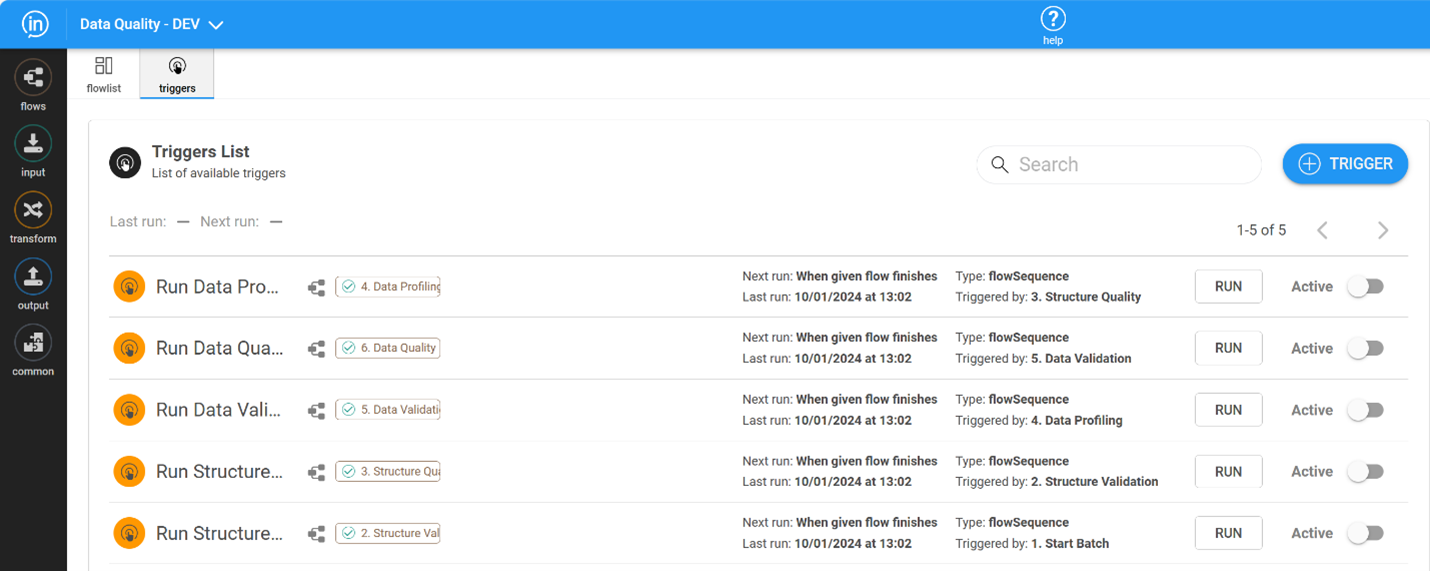

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

IZ Data Quality System: A Closer Look

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

1. InFlow:

• InFlow Special Data Quality Functions – InFlow has pre-built special Data quality functions allowing easy and fast configuration of various functions (see the list at the end of this document).

• Data Integrity – checks the number of columns in each data cluster.

• Data Profiling – calculates basic statistical patterns and characteristics for a given data cluster.

• Data Quality – checks data quality features of received data based on a pre-configured set of selected audit functions (the list at the end of this document)

• Referential Integrity – checks if values from the first data set are present in the second data set.

These functions are available in a special IF menu:

• DQ Flows monitors data anomalies in provided data and verifies the correctness of source data. The DQ Flow system also executes automatic go/no go decisions in terms of the ETL process’s continuation based on the results of data auditing procedures.

• On-the-fly communication with an automated messaging system created in the IZ Project performs data verification after transformation into target PDLs and lookup files used by the IZ project

• Loading DQ audit results into Inzata – results of Data profiling and DQ analysis are loaded into IZ DW

• Automation – the orchestration of all the processes is supported by the Triggers functionality of the InFlow module. The system is designed to make smart go/no-go decisions in a fully automated way.

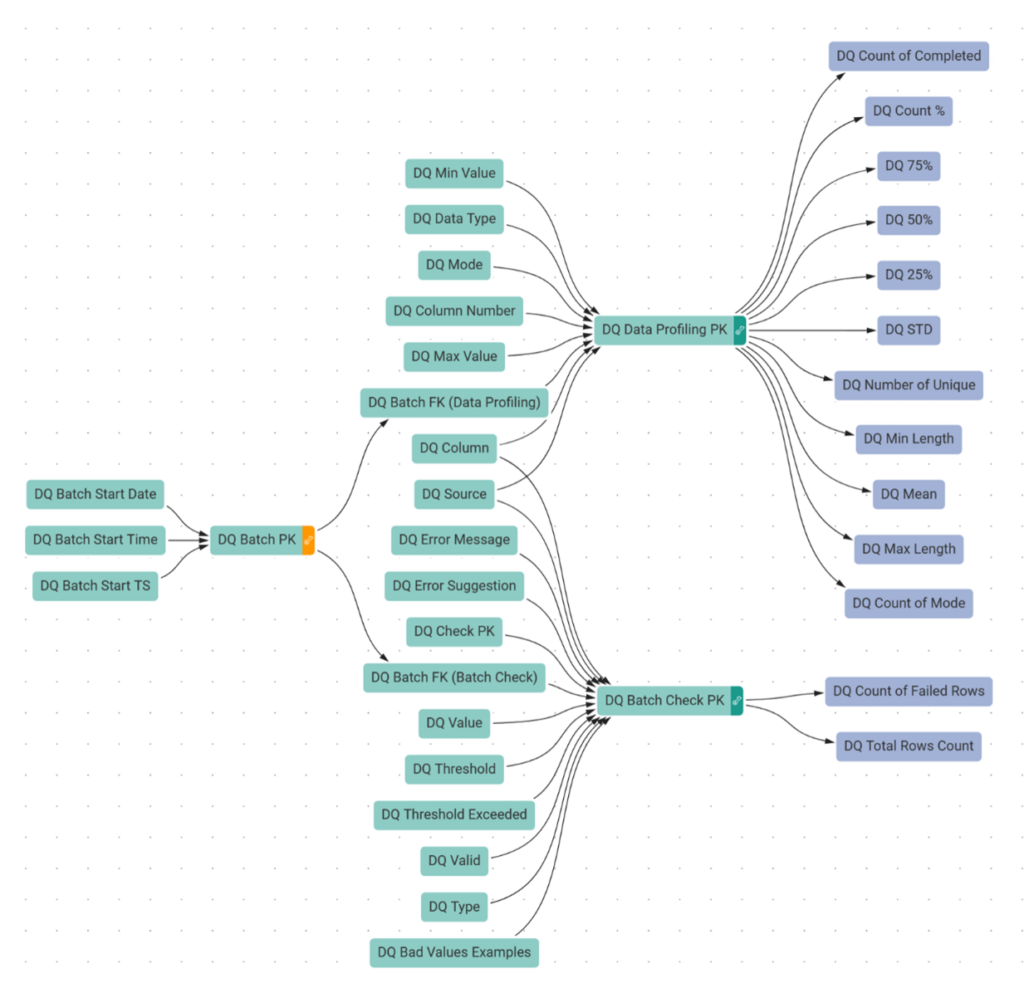

2. InModeler

• The dimensional data structure is designed to accommodate results from the ETL process of data quality analysis in the InFlow module.

3. InBoard

• This module supports flexible reporting over the data profiling and DQ audit results, see examples in the next section below.

The IZ ETL process includes the following DQ flow types:

1. Start Batch Flow – adding a new batch record to the Batch cluster. It is run typically when a new data file is to be processed from the data quality point of view.

2. Structure Validation – during this phase the system verifies the presence of all the columns in a given data source file.

3. Structure DQ Manager – executes the decision whether the data file structure is correct, in such a case system continues with the next phase, otherwise, processing stops, and automated generated messages are sent out to predefined recipients.

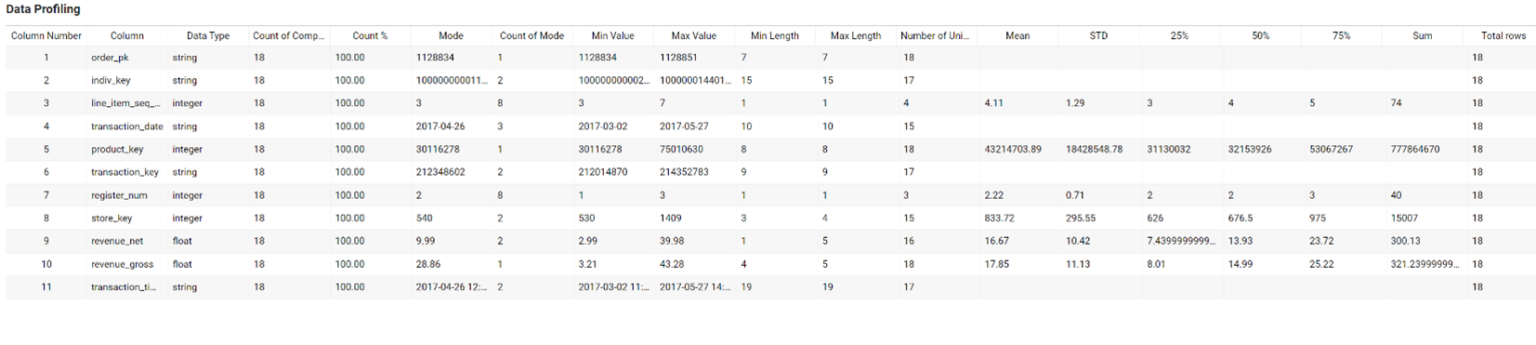

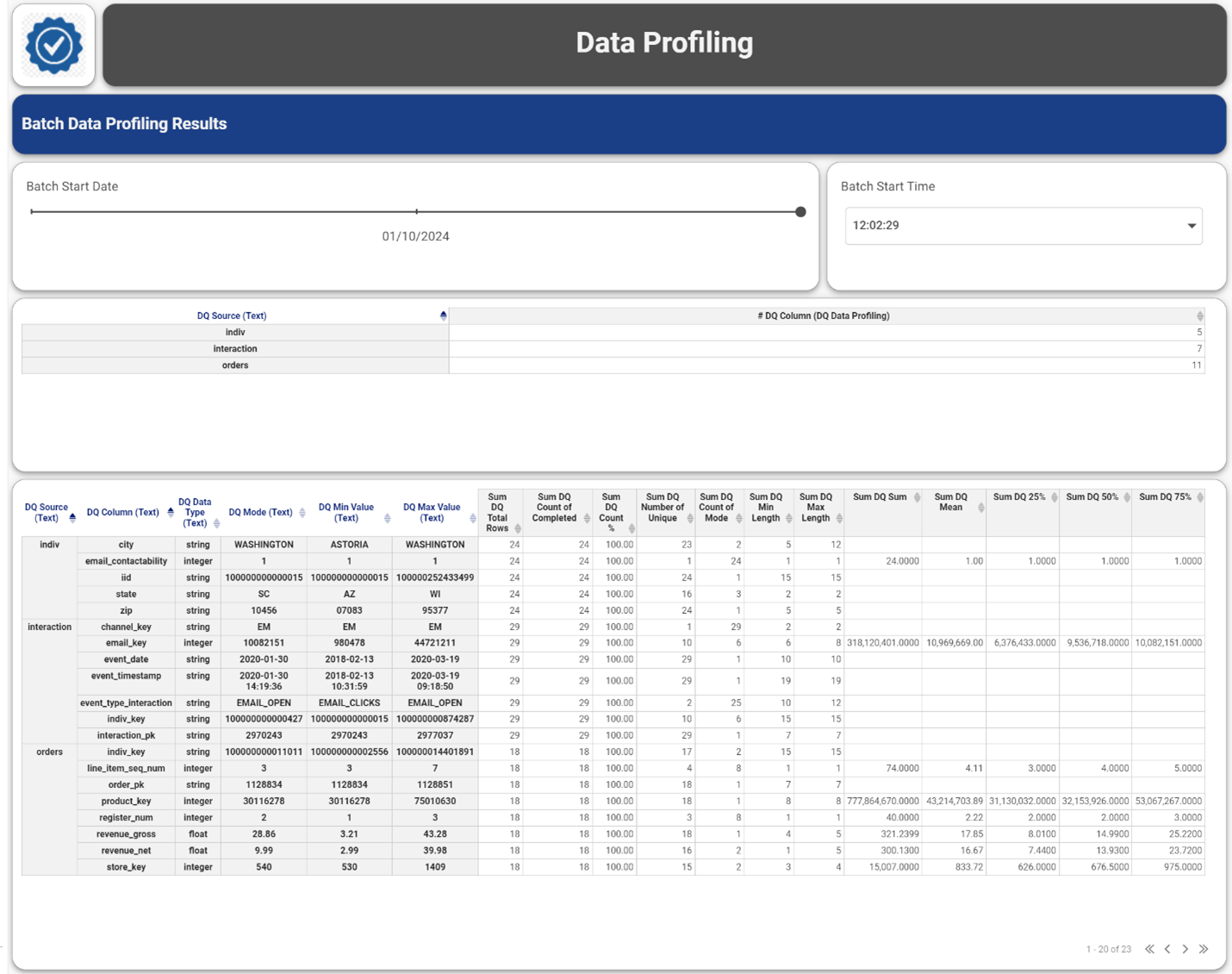

4. Data Profiling – basic statistical profiles are generated over all the columns and the results are loaded into DQ DW

5. Data Validation – all the predefined audit checks are executed over all the columns of a given data source. The results are loaded into DQ DW. All possible DQ check types are listed in the Appendix section.

6. Data DQ Manager – executes the decision whether the data quality of all the columns is correct, in such a case system continues with the next phase, otherwise processing stops, and automated generated messages are sent out to predefined recipients. Some critical data checks may end with an error, however, if the number of failed rows stays within a given, predefined threshold, the process is still allowed to continue in the next phase.

7. Reporting – during the processing phase, the results of structure validation, data profiling, and data quality auditing were loaded DQ DW (step 2. And 5.) The results are available for comprehensive reporting and analysis. Examples of dashboards can be seen below:

The integration of the Inzata Platform’s Data Quality Assurance Program and the IZ Data Quality System creates a comprehensive framework for maintaining high-quality data throughout its lifecycle. By addressing challenges at multiple stages and employing innovative tools, organizations can ensure the reliability and accuracy of their data, empowering informed decision-making and maximizing the value derived from their data assets.

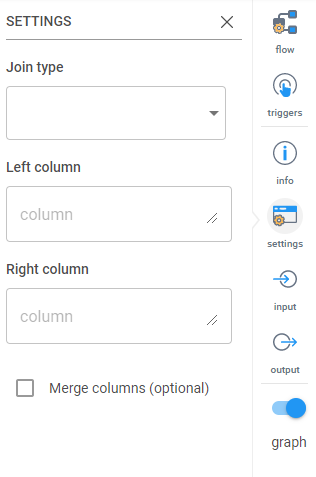

This transformation will perform a join similar to a SQL join of two tables. Currently you can only join on a single column. The settings are:

Join types:

• Inner Join: This will take the column to match and the resulting table will only have rows whose values appear in BOTH tables.

• Outer Join: This will take the column to match and will combine the rows of both tables with values matching on the identified columns. If any values do not match, it will enter null values in the other table but still bring in the row.

• Left Join: This will take the column to match and will only bring in rows on the right table that match the value in the left table. Any row without a match in the right table will still be brought in but with null values in the right table columns.

• Right Join: This will take the column to match and will only bring in rows on the left table that match the value in the right table. Any row without a match in the left table will still be brought in but with null values in the left table columns.

Left Column: Identify by the column header which column you want to be the ‘left’ column.

Right Column: Identify by the column header which column you want to be the ‘right’ column.

Merge column (optional): Select this if you want to merge the left and right columns.

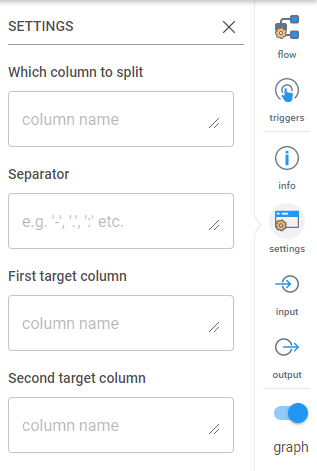

This transformation will split a single column into two on an identified separator. The settings are:

Which column to split: Type the name of the column here. Case sensitive.

Separator: What character will identify the split. Common ones are ‘-‘, ‘,’ or ‘.’.

First target column: Name the first of the new columns

Second target column: Name of the second of the new columns

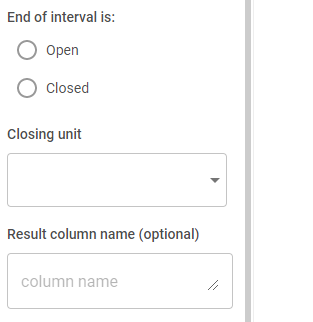

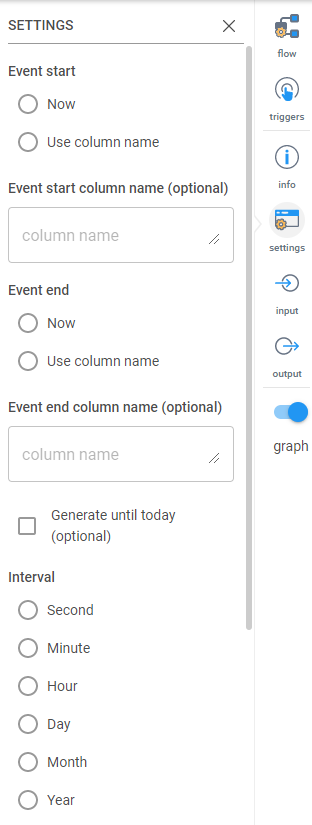

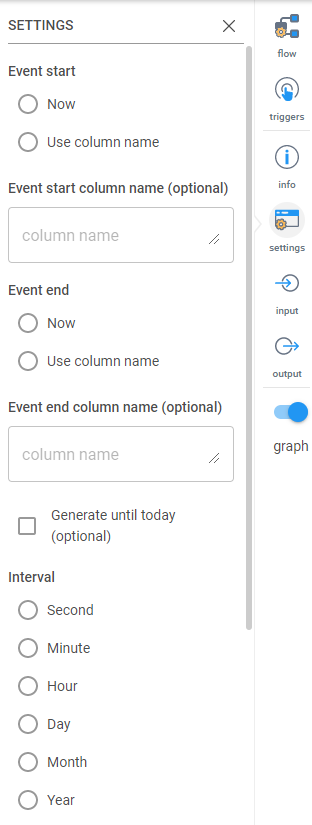

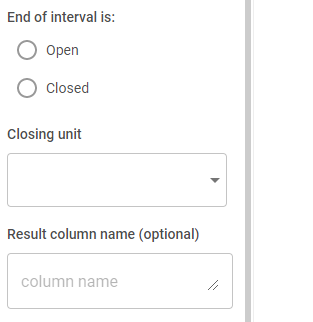

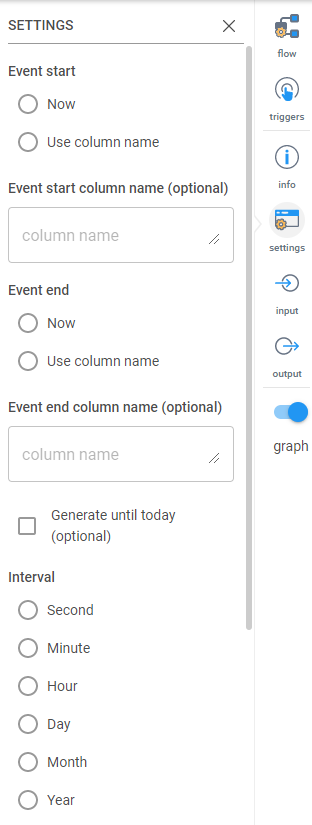

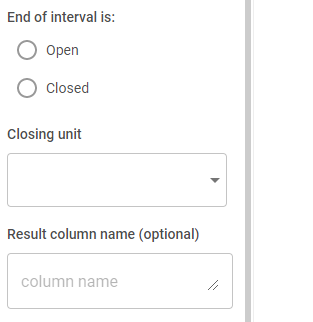

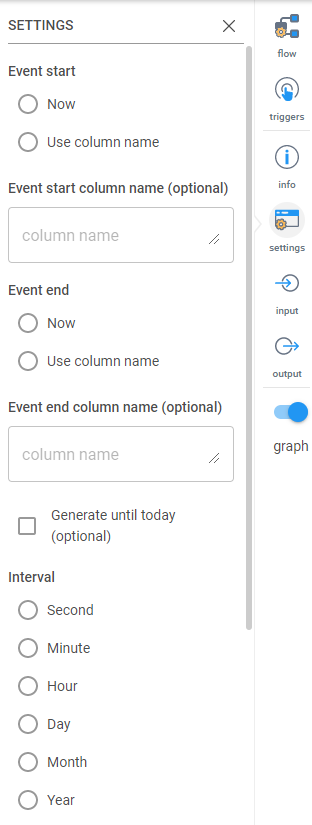

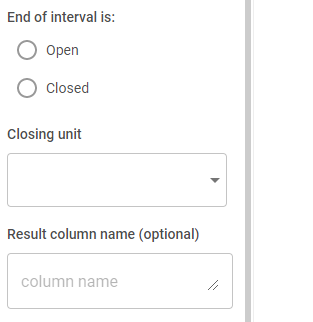

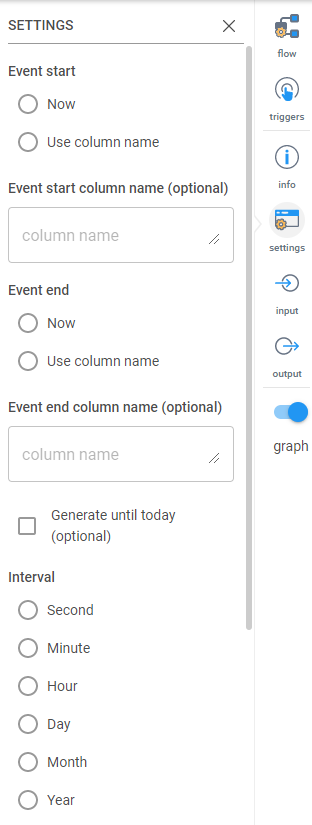

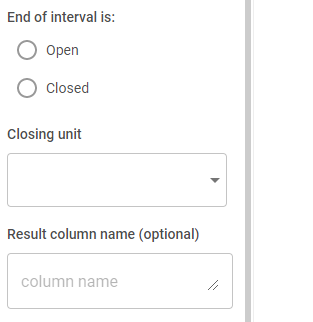

Convert a single event into data per time unit. Example: From an entry containing only year, make entries for each month in year. The settings are as follows:

Event start: This allows you to use today’s date or the column specified in the section below as the start date for the events replication

Event start column name (optional): If you chose ‘Use column name’ for the event start, you must specify the column name here.

Event end: This allows you to use today’s date or the column specified in the section below as the end date for the events replication

Event end column name (optional): If you chose ‘Use column name’ for the event end, you must specify the column name here.

Generate until today (optional): This will tell Inzata to only generate data until today

Interval: Select the time interval (Second, Minute, Hour, Day, Month, Year) that you would like to create.

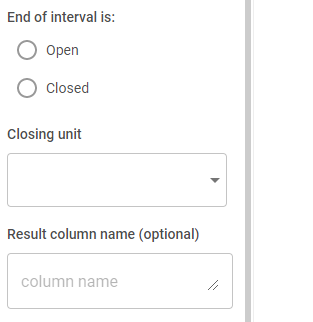

End of interval is: An open interval means that records should be continued to be created where as a closed interval means that all intervals should be closed out by some other column of data.

Closing Unit: This designates the unit of measure that should be closing out the replication, should it close out on a given year, month, day, etc

Result column name (optional): A name for the resulting replicated column

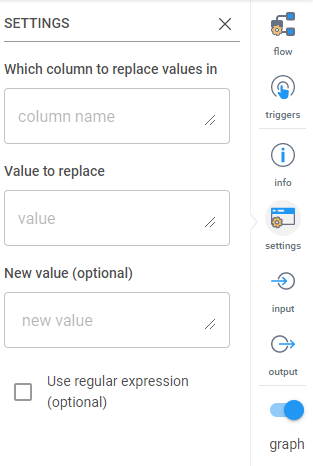

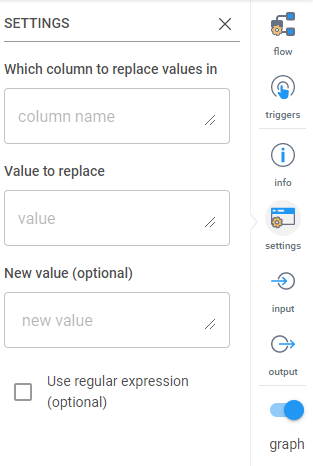

Use this to replace a specified value in a column. The settings are:

Which column to replace values in: Type in the name of the column that contains the values you want to replace.

Value to replace: Specify the value you want to replace. You can use regular expressions.

New value (optional): Specify the new value you want to take the place of the current value. If nothing is entered, it just deletes the value and leaves a blank.

Use regular expression (optional): Select this if you want to use regular expressions to identify the value to replace. Supports positive lookbehind.

This transformation removes the blank spaces from around values in a column. The settings are:

Which column to trim values in: Enter the column name you want to remove the spaces from.

Which side to trim:

• Left: Before the values

• Right: After the values

• Both: Before and after the values.

Bringing this transformation in lets you clone a column in your table.

Clones data from given column into a new one. Column name can be either chosen from available columns, or entered as regular expression (regex), allowing multiple columns to be cloned at once based on wildcards and pattern matches. (I.e. “all columns whose names contain the string “sales”.)

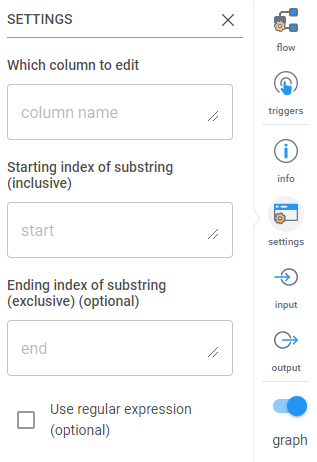

Substring applied on the values in the column or columns. Indexing from one. The settings are:

Which column to edit:

Starting index of substring (inclusive): This is 0 indexed meaning the first character in your string is character 0.

Ending index of substring (exclusive) (optional): This is also 0 indexed, meaning if you wanted to remove everything after the 5th character you would enter “6” here as the 6th character onward will be removed.

Use regular expression (optional): If this box is checked you can use regex to define the starting and ending indexes of the columns identified.

Perform operations on columns. The settings are:

Operations to perform:

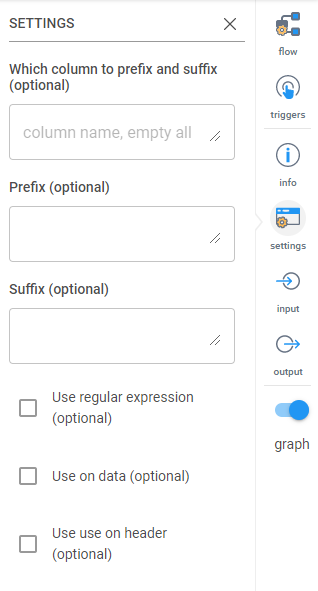

This will add a prefix or suffix to the headers or the data in a column. The settings are:

Which column to prefix and suffix (optional): Type the column name(s) here. Separate columns by a comma (,). If this is left blank, the default is all columns.

Prefix (optional): If adding a prefix, type it here. Can be left blank.

Suffix (optional): If adding a suffix, type it here. Can be left blank.

Use regular expression (optional): Select this if you’d like to use regular expressions to identify the column names.

Use on data (optional): Select this if the prefix/suffix is being added to the data in the identified column(s).

Use on header (optional): Select this if the prefix/suffix is being added to the column headers.

This will perform a SQL like SELECT with given WHEN – THEN case. Example: “WHEN Quantity Ordered > 40 THEN ‘a’ WHEN Quantity Ordered > 30 THEN ‘b’ ELSE ‘c'”

The settings are:

Column to store result: Name of the column the data will be stored in

SQL Case (optional): When your WHEN….THEN…. statement in here. Make sure column names are spelled correctly (case sensitive) and the condition you want entered is in single quotes.

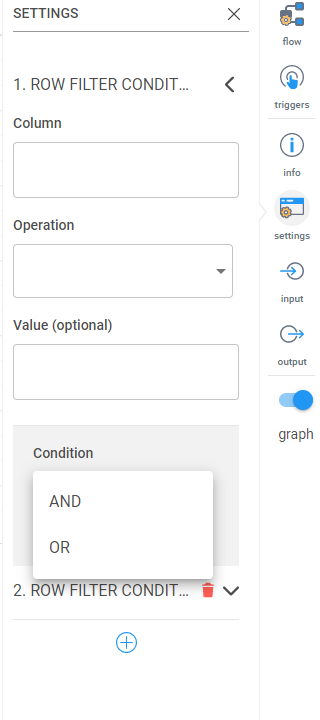

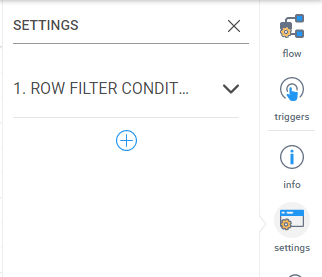

This is similar to the Row Filter transformation, but it allows you to perform multiple row filters on multiple columns combined with AND or OR conditions.

The settings by default look like below. Click the arrow on the right to expand down.

After clicking the arrow, you’ll see the following options.

The basic settings for the first row filter are the same as the Row Filter transformation:

Column: The name of the column that contains the values you want to filter on.

Operation: Equal (==), not equal (!==), greater than (>), less than (<), less than or equal to (<=), greater than or equal to (>=), starts

With and endsWith.

Value (optional): Type in the value you want to perform with the operation (example, typing in a 100 while the == is selected for Operation will look for values that are exactly equal to 100). If left blank, ___________

After filling in the above fields, click the plus (+) sign to add a new row filter condition.

First it will prompt you to select if you want the second filter as an AND (both must be correct) or OR (meet one or both conditions).

Then a second row filter condition will appear with the same options as the first row filter.

.png)

Generate a primary key for the table. This can be used to combine multiple column values together to create the primary key. The settings are:

New PK column name (optional): If you’d like to name the column, type it here.

Generate from columns (optional): When selected, this will prompt you to enter the column names to be used to generate the primary key. (Shown below)

Which columns to use (optional): This will only show if the “Generate from columns” box is checked. List the column header names in the order you want them combined. Use a comma to separate the names.

Use this transformation to delete a column from the data being loaded. The settings are:

Which columns to delete: Enter in the name(s) of the column(s) you would like to delete. Multiple columns may be identified in one Delete Column transformation.

Use regular expression (optional): Use regular expressions to identify the column names.

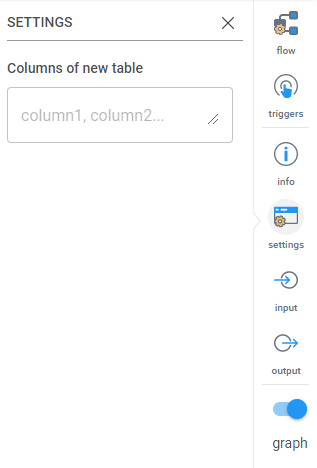

Use this transformation to select the columns you want to keep in your dataset. If a column name is not mentioned here, they will be deleted and not loaded in. If a column is named that doesn’t exist in the original data set you’re loading in, it will be created with null values.

You can also use this to rearrange your data set. The order column names are mentioned will be the order of the data set coming out of the transformation.

The settings are:

Columns of new table: Enter the names of the column headers you want to keep. Separate names by comma (,). List names in the order you want the output data set in.

Convert date and times to a uniform format. The settings are:

Which column to format: Enter the name of the column that contains the date.

Input date format (optional): Enter the format of the dates that you’d like to change. If left blank, Inzata will auto detect the format.

Choose output format: Options are:

• Date format

• Time format

• Date-Time format

• Custom output format: If this is selected, you must enter in the custom format you want in the next box.

For the full list of how to enter in dates, see this link (https://momentjs.com/docs/#/displaying/format/).

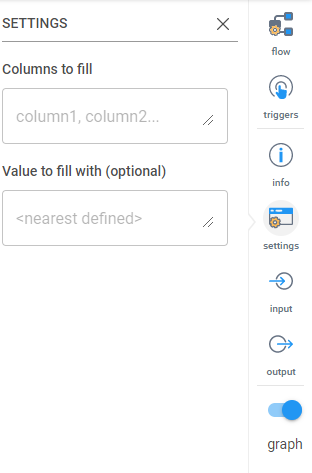

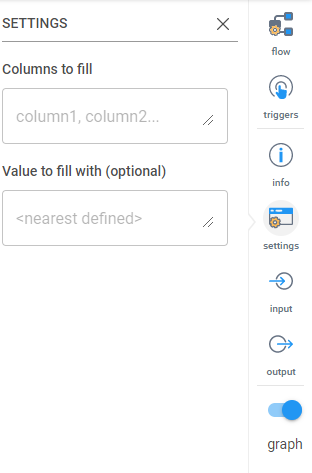

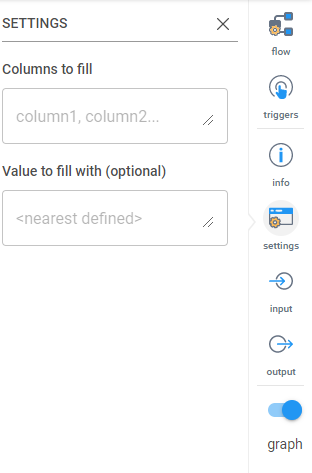

Fill null values in the specified column. The settings are as follows:

Columns to fill: Specify the names of the column you want to check for blank/null values.

Value to fill with (optional): Specify the value you want to fill in the blank entries. If nothing is specified, the preceding value will be used to fill in the blank.

Splits multiple values in one column to rows copying the rest of row data. The settings are:

Which column contains more values: Enter the name of the columns you want to split into rows.

Values separator (optional): Indicate the character that is separating the values. This is optional.

Filters your data based on a value in a column. Only rows that meet the condition will be returned. The settings are:

Column: The name of the column that contains the values you want to filter on.

Operation: Equal (==), not equal (!==), greater than (>), less than (<), less than or equal to (<=), greater than or equal to (>=), startsWith and endsWith.

Value (optional): Type in the value you want to perform with the operation (example, typing in a 100 while the == is selected for Operation will look for values that are exactly equal to 100). If left blank, ___________

This transformation will add a column that inserts a timestamp when data is loaded in or changed. The settings are:

Timestamp column name: Name of the new column that will contain the timestamp.

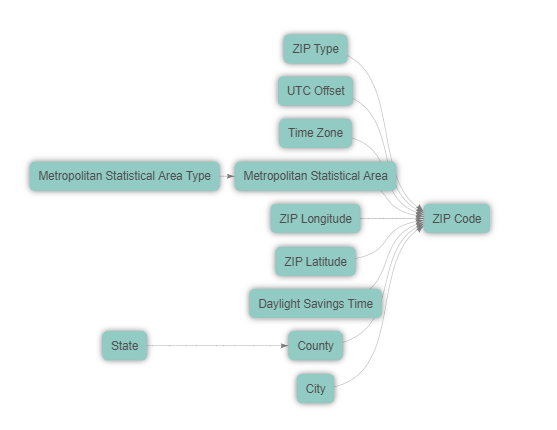

An enrichment, also referred to as a package, is a pre-prepared cluster that is able to be connected into your project. Inzata automatically explores all the attributes of a project’s data model and compares them with the contents of the Inzata Object Marketplace. Only compatible attributes are listed to be selected for integration. The recommended-listed results must be approved by a user before they are connected into a project’s data model. The guessing algorithm uses a comparison of the look up elements’ format with the elements’ formats of the available dimensions in the marketplace. The list of compatible dimensions and packages proposed for integration must be treated as recommended ones.

There are two types of packages: a dimension enrichment and a cluster with any facts enrichment.

• Dimension enrichment

It is the most commonly used type. It is a dimension which consists of attributes with logical relations. Here is an example of the Geographical dimension:

Figure 18: An enrichment example – geospatial enrichment

The picture shows that the “ZIP Code“ attribute is a primary key of this dimension package. This attribute will be used as a dimension join attribute to integrate this whole dimension into your cluster containing the zip code attributes (the column in the source file).

Load your base cluster first and then join the Geospatial dimensional package into this cluster. The cluster join attribute (ZIP Code) is offered by Inzata’s data analysis package matching.

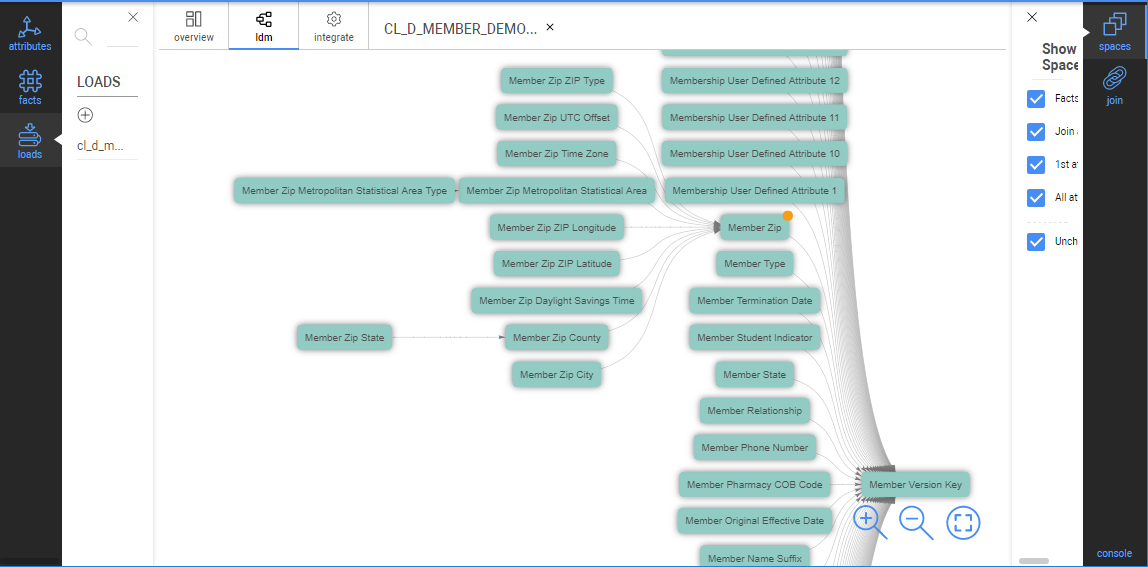

Figures 18, 19, and 21 together are an example of the connection of a Geographical dimensional package into the Member cluster using the “Member Zip“ join attribute.

Figure 19 is the part of an LDM — the Member cluster — before a connection:

Figure 19: Identify the “To Attribute” that the geospatial enrichment is connecting to

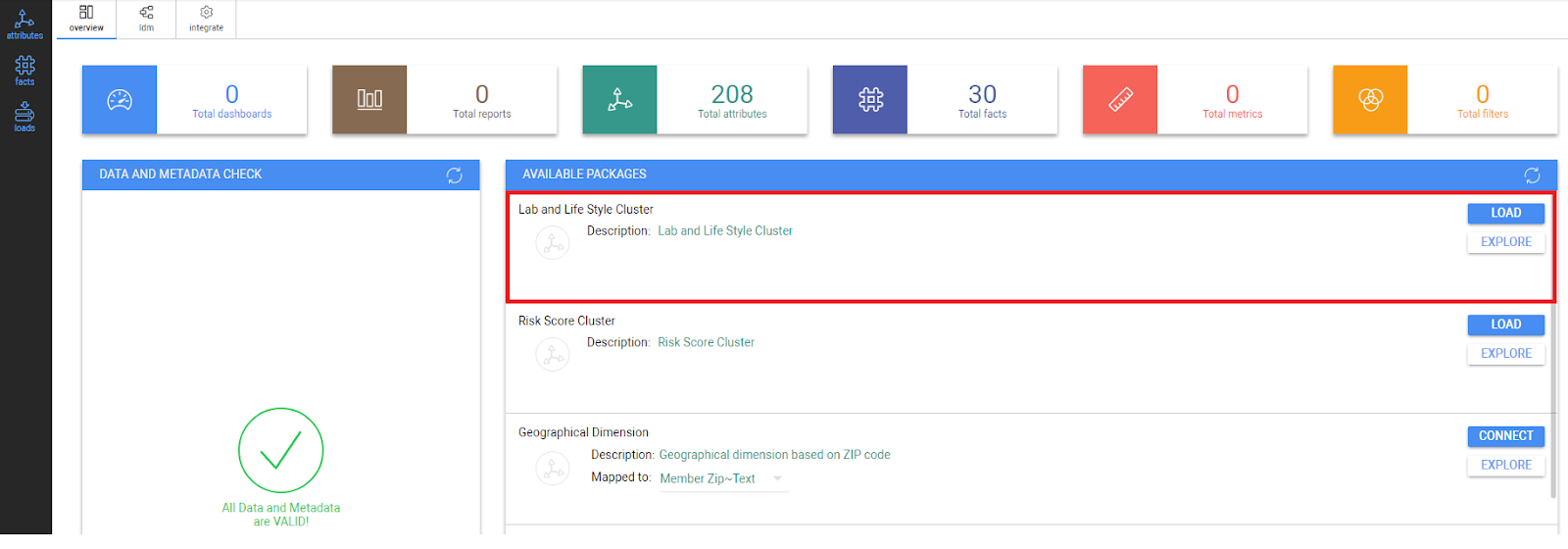

Click on the Overview tab and then press the “Load“ button in the Available Packages window to display all available packages. 4. Select the object and drop it into the To Object section of Hierarchy the panel in the same way.

Figure 20: The geospatial enrichment displayed on the Overview tab of InModeler

The Inzata marketplace only shows the packages that can be mapped to an attribute in integrated clusters. Keep in mind that a join cluster attribute has to be defined with a label. When setting load parameters up, the join cluster attribute has to be set as an Attribute-String. Otherwise a package will not be displayed (offered) in Available Packages for possible integration.

A dimension package has the “Mapped To“ property as a list of offered join attributes from all the integrated clusters. Select one attribute from this list where a dimension will be joined to.

Click on the “Explore“ button to display all the information about such a package in the Inzata marketplace panel of the Overview tab.

Click on the “Connect“ button to connect the enrichment to your cluster or data model. Confirm the next button to start the join process up. The package is then immediately integrated into your LDM and subsequently into the physical layer.

As soon as the join process and the Data and metadata check is finished (the twisting circle in the upper right hand corner is off) , you can click the LDM tab to display the result of your join process.

Figure 21: The LDM after enrichment connected

After a package join is done, the join attribute has the same name as the join cluster attribute. All the attributes in the dimensional hierarchy will be renamed using a role name.

In our example, the “ZIP Code“ attribute from the package is substituted by the “Member Zip“ attribute from the Member cluster. All the dimensional attributes are renamed with the “Member Zip“ prefix.

• Cluster with any facts enrichment

These are clusters with facts which are not usually used for denormalization into any other cluster.

Figure 22: Lab and Lifestyle Cluster displayed in the Overview Tab

There are the following steps for a join of such two clusters:

1. Press the “Load“ button.

2. Confirm this action with the next button to start the join process

3. After the join process and the Data and metadata check is performed, you can look at the LDM tab to control the result of your integration process.

4. Such a cluster may stay as a stand alone one, or if a user wants to integrate it into existing enterprise LDM, such cluster can be joined via a common attribute using the join process described above(“Creating new join”).

For greater detail on joining two clusters, please refer to the “Join of Loads” article.

.png)

Figure 5: Loads panel and part of the overview tab

Left Toolbars

Attributes

As shown in figure 6, in the left toolbar, click on the attributes tab to open the Attributes panel. Click on the button or the attribute name to show all the labels of an attribute.

Click on the button next to the label name to show its values.

.png)

Facts Toolbar

In the left menu, click on facts to open the Facts panel to show all created facts. Moving cursor over fact name and clicking on icon the new tab with fact properties is created.

Creating a folder to organize your Facts and Attributes

All objects in your Inzata project can be grouped into folders. The folder can contain different types of objects, such as attributes, metrics etc. The folder is usually used to associate all objects of one cluster or to logically organize objects such as “basic metrics”,“percentage metrics”, “date attributes” etc.A folder can be created in the list of any object, e.g. Attribute list, by clicking on the Add folder button at the bottom of the Attribute or Fact panels.

.png)

From there, you can give the new folder a. There is also the option to save the new folder as a Global Folder. Saving it as a Global Folder will make the new folder appear in other modules of Inzata (InBoard, InViewer). If you do not save it as a Global Folder, the folder will only appear in the panel in InModeler.

Click the Create button to finalize the and save the new folder, which is then added to the top of the Attribute and Fact panels as shown in figure 8.

.png)

Figure 8: Create New Folder dialogue

To add items to folders, drag any object (attribute or fact) into the folder and drop it on the folder name. The added object moves from the main object list and will now appear in the folder.

Double click on the folder name for showing the folder properties as seen in figure 9.

.png)

Figure 1 (repeated): The InModeler Overview page.

In figure 1, the colored boxes displayed horizontally along near the top of the webpage show the count for each aspect of a given Inzata project. Below that count bar, there are three windows:

• Loads List

A list of all loads

• Data and Metadata Check

The physical and logical models are automatically checked and the results are shown in this window. This can be used to debug errors that may appear as a data model is created.

• Available Packages

This is described in the “Connecting a package into a cluster” section below.

Logical Data Model (ldm tab)

.png)

Figure 10: Logical Data Model tab

This tab displays the logical data model of a project. The model (LDM) shows the relationships among attributes and facts as they are defined in the project’s metadata. The lower level (right side) of a cluster is represented by facts while the second level (middle) is represented by the primary keys of the clusters. The other attributes displayed on the highest level (left side) are related to the primary key that they are connected to by lines.

The blue boxes denote facts and the green ones are attributes, the dark green-accented boxes are the primary keys in their respective clusters. Setting the cursor over the graphic symbol and then clicking will highlight an object’s relations. A user can click on a relation to keep it highlighted in a model (suitable when scrolling in larger LDM to follow the link between objects). Your model can be zoomed in or zoomed out using a mouse scroll bar or buttons in the tab at the bottom of the screen.

The right side of your screen contains the Properties Type toolbar:

.png)

Spaces

The Spaces menu defines the levels of model display. It can be used to filter objects by type.

Join

The Join menu is used to define join attributes between 2 clusters, when integrating originally separated clusters (source files) into one unified, enterprise LDM. This action is described in the “Join of loads” section below.

Hierarchy

This tool allows you to place two attributes into a hierarchy (Parent-Child) relationship. Simply drag the parent attribute into the parent location, and the child attribute beneath it. You can create multi-level hierarchies as well by using the child attribute of one hierarchy as the parent of another. These relationships will be displayed as a horizontal row in the data model.

Conversion

This allows you switch an individual object’s classification from Attribute to Fact, or from Fact to Attribute.

Relation

This tool allows you to check whether a relationship exists between two objects, for example before creating a manual join.

Objects (objects tab)

.png)

The objects page in figure 11 displays all the metrics, facts, and attributes in a given project, along with different characteristics of those objects. The different columns in order of left to right are:

Checklist

Checklist for selecting objects. These selected objects can then be shared so that different users can access them by using the button in the panel on the right, under the word “selected”.

Name

The name assigned to a given object to help the users identify the object.

Ident

The ID that is given either by the system or the user to an object that distinguishes it from the other objects.

Type

The type of an object describes its purpose or usage within the project, IE attribute, fact, metric, dashboard.

Creator

This is the user’s name who created the object initially.

Clusters

A group of objects associated with a file source. If two or more clusters are integrated by joining, then this column will list all the clusters that an object is related to. It is possible that all the clusters are integrated into one, cohesive data model, leading to every attribute, fact, and metrics being able to relate to each other.

Modified

This will list the last date and time that an object was modified.

Acl

The Access Control List shows the different users that have access to an object.

Description

The system or user assigned description of a given object. There does not necessarily need to be a description for every object. One such case would be when the name of the object clearly, accurately, and sufficiently describes the object

Figure 3: New Data Load Cluster Profiling window

Primary Key Selection

The primary key column is a very important column in a Dataset. It should contain a unique value each individual row with a format that is consistent across every row. An example would be a simple integer serial or “count” key: (1,2,3,4,5,…n). If none of the values here seem suitable as a primary key, you may wish to go back to Inflow and use the Primary Key transform to add a new Primary Key column to your dataset, then run the InFlow again to update the data load.

Below the Primary Key, you can set the following options for the each column in the data load.

Inzata reads in the column headers and records them in the Column Name field. These cannot be modified. Inzata’s AI inspects the data contents columns and detects most likely column type for each column (attribute, fact, date, or ident). A user can change or update them if they wish.

Label – Inzata gives you the ability to create a custom label or alias for each column. The orginal column name is recorded, and the new label will be how that column will be identified inside of Inzata. A great use for labels is to give columns a friendlier name that will be more easily recognizable to users. The value defaults to the original Column Name. Double quotes or apostrophes are not available to be used in object names. These column labels can contain spaces.

Tips for using labels:

When a column is the same within different source files, e.g. “Customer” in both the “Order” and “Payment” clusters, you can use the same name in all source files. Then the same attribute will be generated in a project, and it will be part of several clusters.

On the other hand, when a column is in multiple source files, but will be used for the joining of 2 clusters (using the join function under the LDM tab in the top left), use different names in the associated source files.

id – the identifier for a column of data or metadata object (mandatory value). An ID must be unique within an Inzata project. The id is automatically derived from a column’s name by substitution of non-valid characters with an underline character. You can modify them in this edit box where the name is listed in figure 3.

An identifier can have max 255-char length. Allowed chars are only [“A-Z”, “a-z”, “0-9_”]. ID values are copied from the header of a CSV file. You can modify them here via this edit box. We recommend to use the prefix “a_” for attributes and “f_” for facts – please see Inzata Standard prefix (check box) above.

Similar to the names, when a column is the same within different source files, e.g. “Customer” in both the “Order” and “Payment” clusters, use the same id in all source files. Then the same attribute will be generated in a project and it will be part of several clusters.

On the other hand, when a column is also in more source files, but it will be used for the joining of 2 clusters (using join function), use different ids in associated source files.

Column type – there are 4 possible values: Attribute, Fact, Skip (the column is not processed), and Label (please see Rules for CSV file).

Attribute:

When Attribute is selected the Name and id items (described above) are used as the Attribute Name and the Attribute id. There are 5 possible data types for a column, which are further detailed below. If the “String” Data Type (described below) is selected concurrently with the Attribute this column from data layout is defined as the label with the “Text” default name. You can rename this label name and also label id by clicking on the Attribute Name. Then the Right Properties Panel appears for this Column Option.

Note: This column is used as the primary key for the attribute definition. It means that for each unique value from this column is generated a unique id for this attribute.

Data type – there are the following possible data types for each Attribute column:

• String

• Date

• Date Time

• Time

• Ident

Ident is a special type defined as a numeric one. The value is directly used as an identifier of an attribute and thus identifiers are not generated in a project. These attributes have no labels (e.g. codes, descriptions).

When the other column types are selected, then the identifier values are generated in Inzata format and the CSV values are stored as a TEXT label of an attribute.

For the date and date time data type the format of source data is displayed on the next row.

Label:

When Label is selected the Name and id items (described above) are used as the Label Name and the Label id. You also have to assign which attribute the label belongs to. Select from the Attribute Name list which is displayed below the Column Type. The previous attribute is pre-selected.

Fact:

The data type for a fact is always Numeric. Facts have additional settings for the number of decimal places. This is the number format used in the Inzata environment. For instance when you select the “0.00” numeric format, then your number 1.1234 will be loaded as 1.12. Use the “0” selection for integer numbers.

Skip:

The final column type is Skip. This is a column type that is not selected by the AI when uploading a new file because it tells InModeler to disregard that column for the upload to a project. On a new upload, this type is usually only used when manually selected by the user

To set more detail parameters up, click on “show advanced options“ as in figure 4:

.png)

Figure 4: New Data Load Advanced Options

Inzata Standard prefix (check box)

The standard identifiers of attributes are strings with the prefix “a_”, for facts there is the “f_” prefix . The identifier of an object has to be unique within an Inzata project. These prefixes help to keep consistency of metadata in the Inzata project. When this box is checked the prefixes are automatically added into ID strings.

File Encoding Using this, set the encoding of your source text file.

Create _____ metrics from ___ checkboxes

Inzata can autogenerate commonly used aggregation metric formulas from facts and attributes. For facts, available metrics are: SUM, AVG, MIN and MAX. For Attributes, it can generate COUNT metrics. Once generated, these will appear automatically in the Metrics menu in InBoard.

After setting the parameters, press the “Complete Load“ button.

.png)

An Inzata data model an integrated structure that associates and connects your different datasets in Inzata to support your analytics. Logical Data Models are characterized by the logical “Join” relationships between associated fields that allow of your datasets to function as a cohesive whole to support your analytics activities.

Joins within a Data Model allow your users to use fields from nearly anywhere in the data model in their reports and dashboards, and enable you to answer much more advanced questions than you could when the data was separated.

Tips for creating new join relationships

• Both data clusters contain common data columns with the same distinct values. The join attributes’ names must be different.

• The join attribute for the “from” dimension cluster has to be defined as a primary key. This means that values in this column are unique across each row and the column must be tagged as a primary key within Inzata.

• Both join attributes have to be created with text labels (e.g. Client ~Code, Client Payment ~Code). These labels are used for joining.

Figure 12: Illustration of join rules

Creating a new join

When you integrate two loads into a single project, you can join them while following the rules described above.

There are two possibilities for how to join loads – suggested or manually. To join two loads or clusters, follow the following instructions when in the InModeler module:

1. Click on the ldm tab.

2. Click on the Join Panel in the Right Properties Type Menu to open the Join panel.

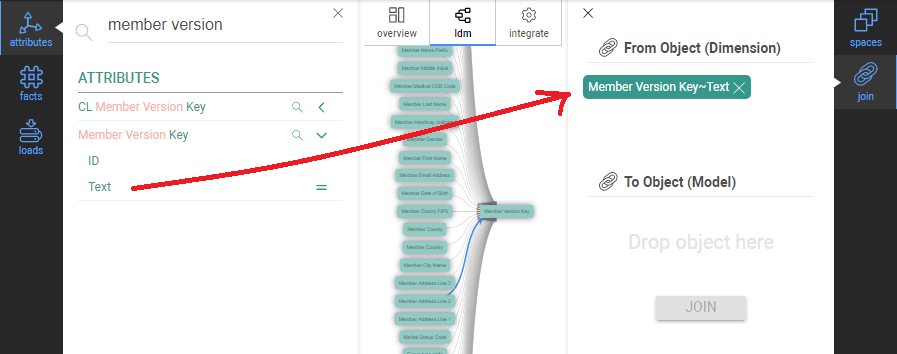

3. In the left menu, click on attributes to open the Attributes panel. Select the attribute from dimension cluster (primary key) and click on

< button to show the labels of the attribute.

4. Drag the label to be used for the join to the From Object section of the Join panel and drop it there.

Figure 13: Drag primary key attribute text label to the “From Object” area on the right properties panel

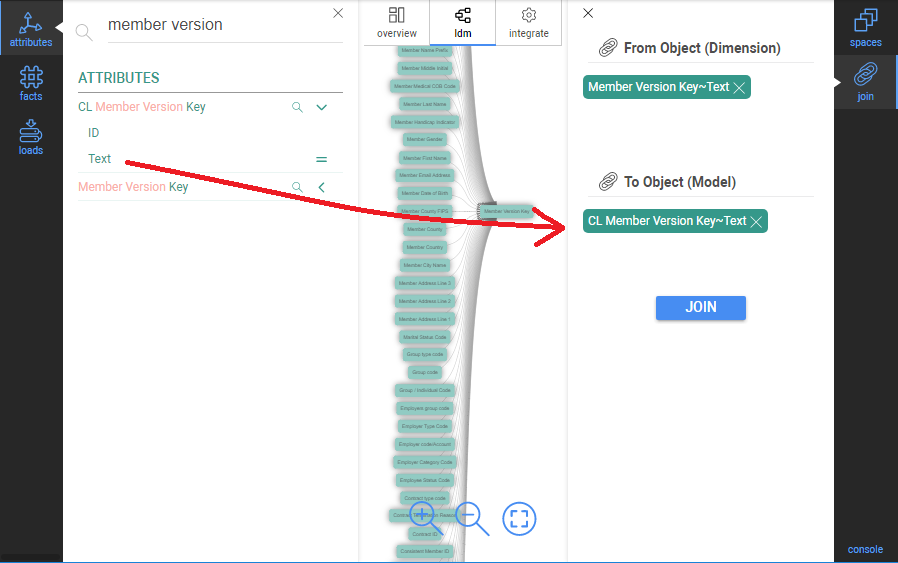

5. Select the join cluster attribute and drop it into the To Object section of Join the panel in

the same way.

Figure 14: Drag second attribute text label (not a primary key) to the “To Object” area on the right properties panel

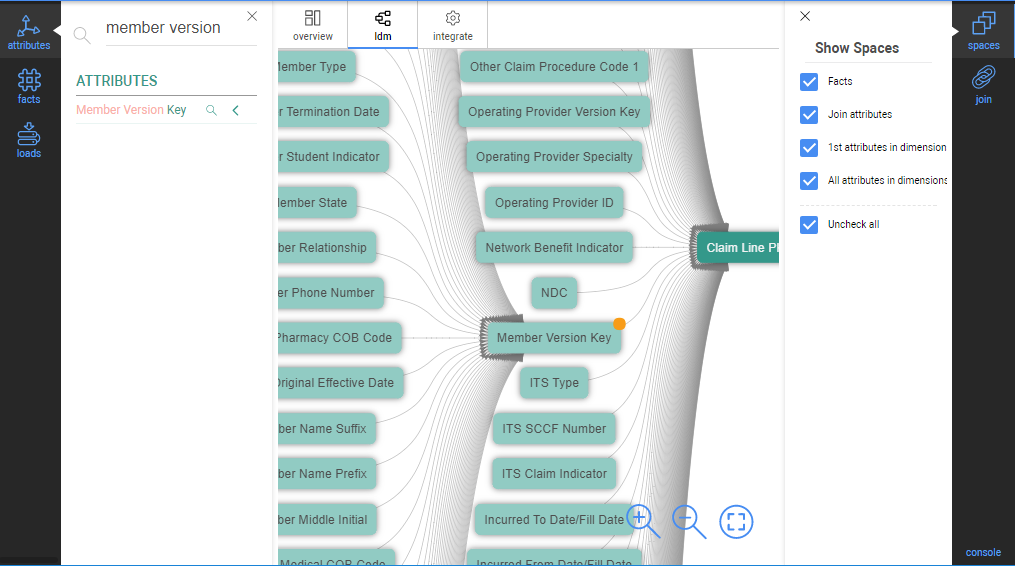

6. Press the Join button. The LDM graph is automatically refreshed.The graph symbol of the

join attribute is changed (an orange corner is added). The join dimension attribute is

connected to the target cluster and the join cluster attribute (in the target cluster) is

removed.

In our example, the “CL Member Version Key“ was substituted by the “Member Version Key“.

A hierarchy represents the logical relations among attributes. A typical example is the date hierarchy. A date belongs to one month and month has more dates (1:n relation). Accordingly, the next date relations among month, quarter and year.

The Hierarchy tool allows you to place two attributes into a hierarchy (Parent-Child) relationship. Simply drag the parent attribute into the parent location, and the child attribute beneath it. You can create multi-level hierarchies as well by using the child attribute of one hierarchy as the parent of another. These relationships will be displayed as a horizontal row in the data model with the highest level of the hierarchy to the left, and lowest level, to the right.

Figure 16 shows an example of a naturally-occuring hierarchy, a calendar/date hierarchy.

.png)

Figure 16: Example of hierarchy

You can create a new hierarchy among the loaded attributes:

1. Click on the ldm tab.

2. Click on the Hierarchy Panel in the Right Properties Type Menu to open the Hierarchy panel.

3. In the left menu, click on attributes to open the Attributes panel. Select the attribute (not the and Drag it to be used for the join to the From Object section of the Hierarchy panel and drop it there.

.png)

4. Select the object and drop it into the To Object section of Hierarchy the panel in the same way.

.png)

5.Click the Join button

The result view after the Join is shown here:

.png)

Figure 17: Example of Hierarchy after a join

To remove or reverse a hierarchy, select any of its elements, then use the Break button.

.png)

There are multiple ways text-based data files (CSV, TSV, Pipe delimited) can be formatted. To ensure a seamless experience loading your text-based data file into Inzata, we recommend following the guidelines below:

Text file delimited by one of the following way is supported:

• Comma

• Semicolon

• Tab

• Tilde

• Pipe

A text file represents one table (referred to as a cluster in an Inzata Project) with the data column types:

• attributes (identifiers, codes or descriptions for categorical variables. The values of an attribute column can be created as any alphanumeric text.)

• labels (A label is part of an attribute object. An attribute can be represented by more display forms, e.g. the “Person” attribute has the “Last Name”, “SSN” and “Email Address” labels).

• facts (quantitative measures defined as numbers). Use numeric values only.

The first row of this file contains the column names with a delimiter. These names are pre-selected as attribute and fact identifiers (names) during the “Creating a New Load” process article. We recommend using a file header with business names of attributes and facts.

One column is a primary key which has the unique values in the text file or across all the files loaded incrementally into one cluster.

We recommend (as the first – data integration) using a set of smaller files to create a logical data model in inModeler. Any file with from 100 to 1000 records is sufficient for this. If a user wants to load big data then to such crated enterprise LDM, then the IM ETLp system is designed for such data processing. This option also supports automated, scheduled data loading on a regular basis.

The NULL value is represented as an empty string (without quotes) in a text file.

String values in a file data can be used with double quotes or apostrophes as a character indicating beginning and end of a string.

Double quote character cannot be used in data except using it as a string separator mentioned above. The special characters [%’/#@$<>*] are available in data but they are not available in the first row as a column’s header.

There are following available Date and Time formats:

• Date:

YYYYMMDD

DD/MM/YYYY

D/M/YYYY

MM/DD/YYYY

M/D/YYYY

MM-DD-YYYY

DD-MM-YYYY

YYYY-MM-DD

YYYY-DD-MM

YYYY/DD/MM

• Date Time:

the same as date including a time with format HH:MM

• Time:

The available format: HH:MM.

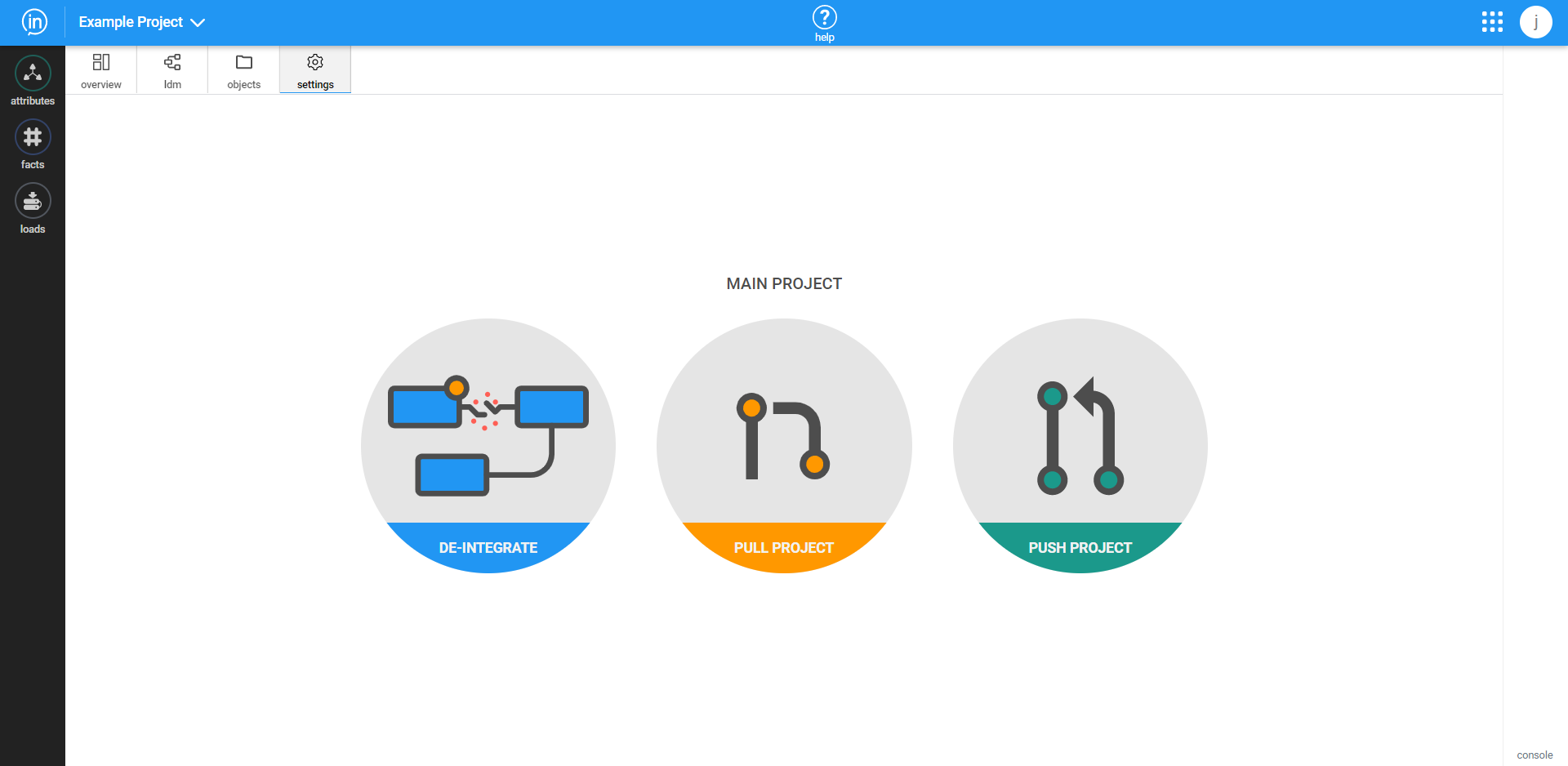

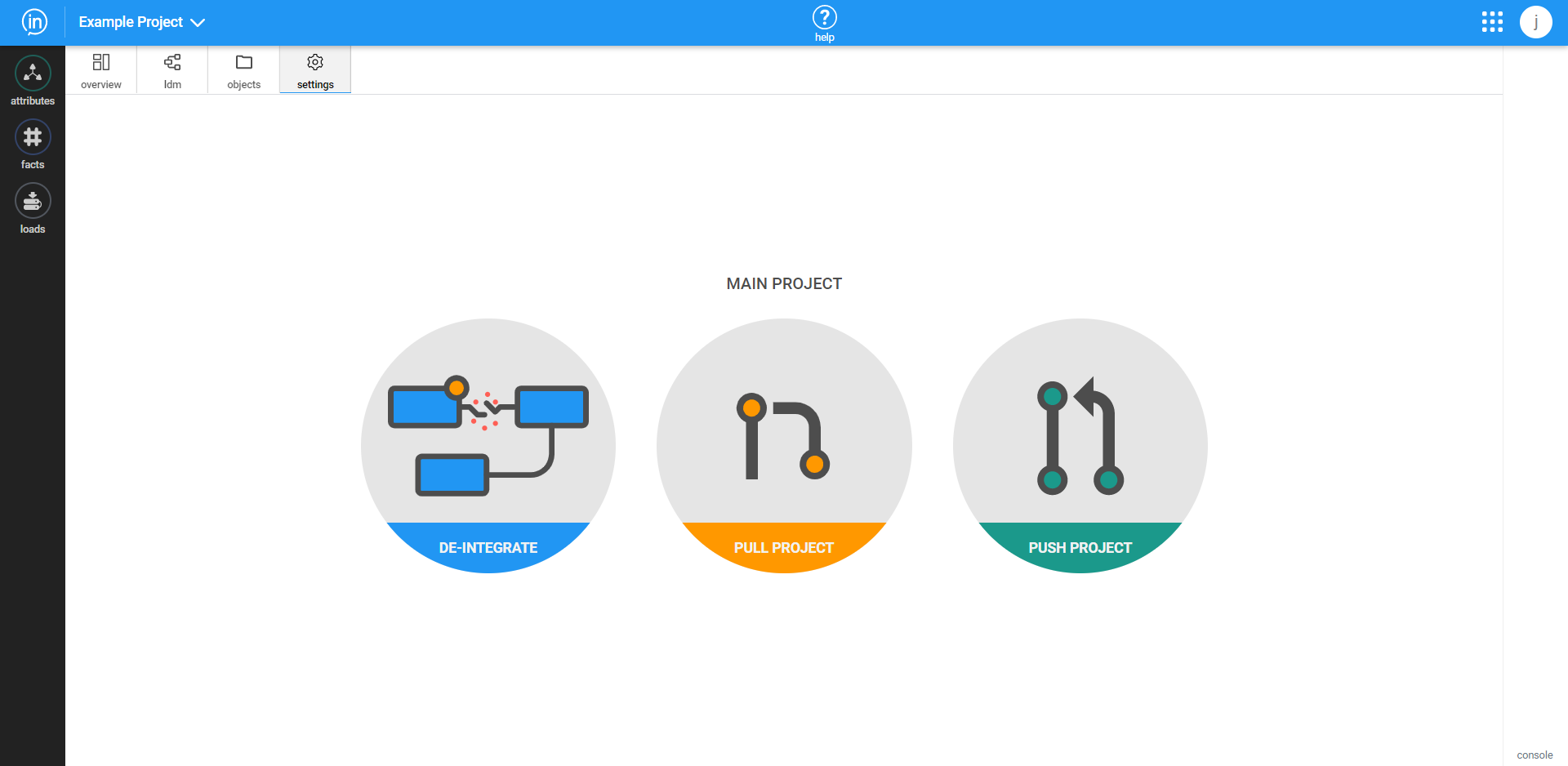

The Settings tab allows you to modify your loaded clusters.

De-integrate

This feature enables the user to remove all the connections and joins among loads which have been implemented in a project. All the dimensions added and integrated from the list of offered – compatible ones from the IM Object Marketplace will be automatically removed.

USE THIS FUNCTION WITH CAUTION. Accidental de-integration will require the data model to be recreated from the clusters.

Click on the settings tab.

Press the “De-Integrate” button.

All the-integrated steps are described in in the picture:

• All connected packages will be removed

• All joined dimensions will be disconnected and removed

• LDM will be restored to its original state ( as they were after loading all the data clusters into the common space (not joined/connected, just stand alone status).

Press the next “De-Integrate” button to start the process:

.png)

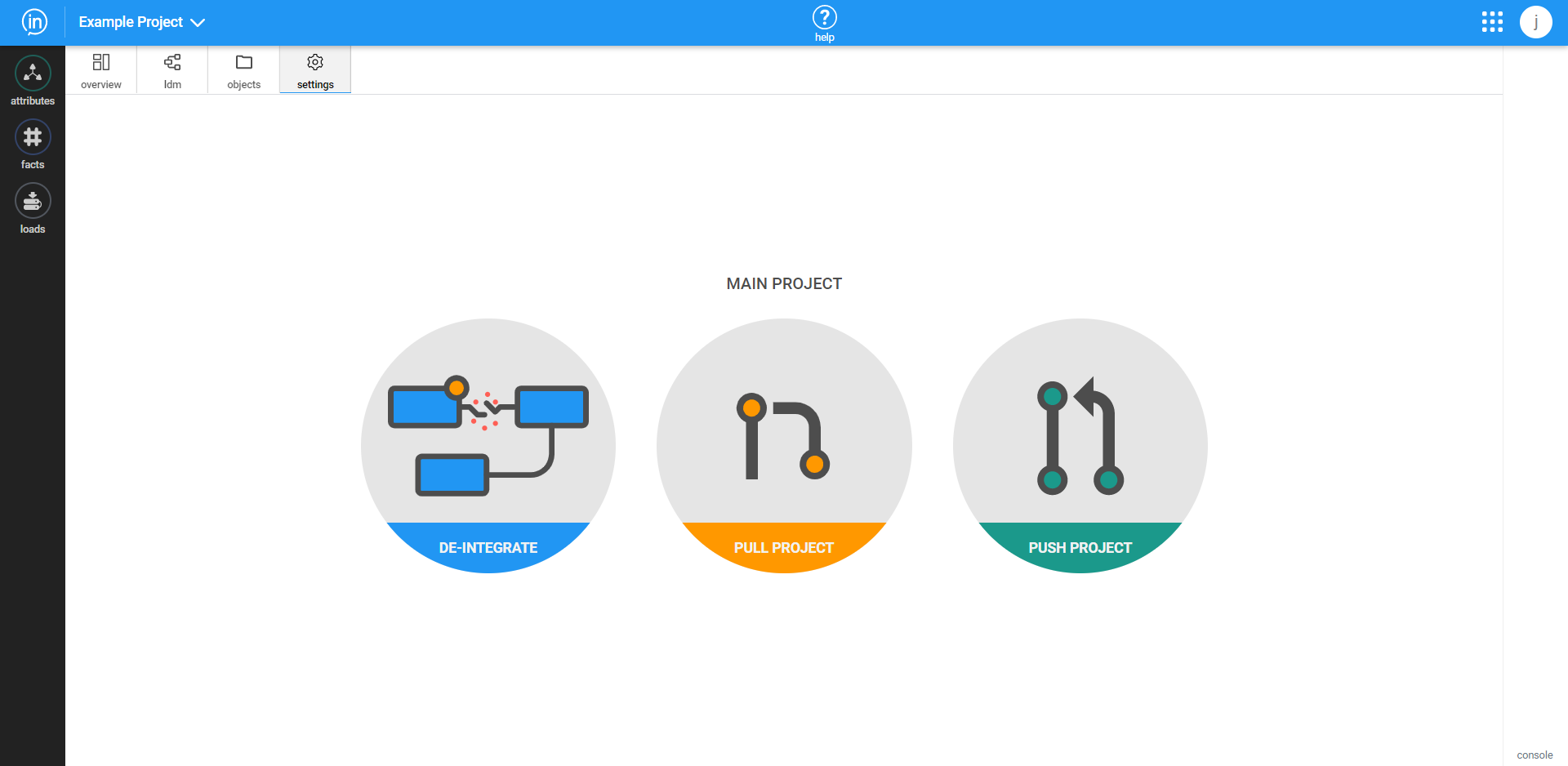

Pull/Push Project

Because multiple projects can be worked on by a user, Inzata supports the creation of staging and development projects that can be worked on without affecting the main production project. If a user takes this approach to developing their data warehouse, then they can use the Pull function to take the changes committed to a production project and replicate them in a staging/dev project. To complement that, a staging/dev project can be pushed to a production project using the “Push” button.

What are they? How do they work? – https://help.tableau.com/current/pro/desktop/en-us/datafields_typesandroles.htm

What are Facts and Attributes?