Inzata’s Fully Automated data quality solution

Ensuring the accuracy, completeness, and reliability of data is most important for organizations. The integration of diverse data sources into a centralized data warehouse poses challenges that, if not addressed, can lead to compromised data quality. This article delves into the robust Data Quality Assurance Program employed by the Inzata Platform and the innovative IZ Data Quality System, both of which play pivotal roles in maintaining data integrity throughout the entire data lifecycle.

Inzata Platform’s Data Quality Assurance Program

The Inzata Platform’s data quality assurance program is designed to address challenges encountered during the entry of information into the data warehouse. Several key strategies contribute to enhancing data quality:

• Quality improvement in data is achieved by enhancing knowledge about source system data and increasing access to source data owners/analysts. The effectiveness is further boosted by well-documented data attributes, entities, and business rules governing them, resulting in superior modeling, and reporting from the data warehouse. Understanding how data is translated and filtered from source to target is crucial, necessitating mandatory access to Source System Developers when documentation is insufficient. In cases where developers lack comprehensive source definitions due to personnel changes, an empirical “data discovery” process may be required to unveil source business rules and relationships.

Integrated planning between the source and target systems, with agreement on tasks and schedules, enhances efficiency and reduces resource-wasting surprises. Effective communication plans during projects, providing timely updates on the current status of and addressing issues, are integral to this collaborative workflow approach.

• Collaborative planning between source and target systems minimizes surprises and increases efficiency.

• A well-communicated data quality control process provides current status updates and addresses issues promptly.

1. Conducting Data Quality Design Reviews with all relevant groups present is a proactive measure to prevent defects in raw data, translation, and reporting. In cases where design reviews are omitted due to tight schedules, a compensatory allocation of at least double the time is recommended for defect corrections.

2. Implementing Source Data Input/Entry Control is crucial for data quality improvement. Anomalies arise when human users/operators or automated processes deviate from defined attribute domains and business rules during data entry. This includes issues like free-form text entry, redefinition of data types, referential integrity violations, and non-standard uses of data entry functions. Screening for such anomalies and enforcing proper entry at the source creation significantly enhances data quality, positively impacting multiple downstream systems that rely on the data.

Expanding the Data Quality Assurance Horizon with Inzata Platform’s Program and IZ Data Quality Control Process

Beyond procedural activities, the Inzata Platform’s Quality Assurance Program considers user and operational processes that may impact data quality. Meanwhile, the IZ Data Quality System provides a comprehensive solution with its Data Audit functions, Data Quality Statistics, and ETL Data Quality Monitoring.

Common Challenges and Solutions:

1. Operating Procedures:

• Solution: The business users responsible for the source systems and the end users’ processes need to be engaged in identifying standard procedures that create bad data. They should understand the root causes and they should be chartered with process improvement of those data creation processes.

2. Mis-Interpretation of Data:

• Solution: Developers in the data warehouse must possess a comprehensive understanding of the data to ensure accurate modeling, translation, and reporting. In cases of incomplete documentation, conducting design reviews for both the data model and translation business rules becomes crucial. It is essential to document business rules influencing data transformation, subject them to an approval process, and, in instances of personnel changes, undertake an empirical “data discovery” process to unveil business rules and relationships.

3. Incomplete Data:

• Solution: To address incomplete data, a thorough review of each data source is imperative. Specific causes of incompleteness should be identified and targeted with quality checks. This includes implementing scripts to identify transmission errors and missing data, as well as generating logs during load procedures to flag data not loaded due to quality issues. Routine review and resolution of these logs, coupled with planned root cause analysis, are essential to spot and correct repetitive errors at their source. A designated data quality steward should regularly review the logs for effective quality control.

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

IZ Data Quality System: A Closer Look

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

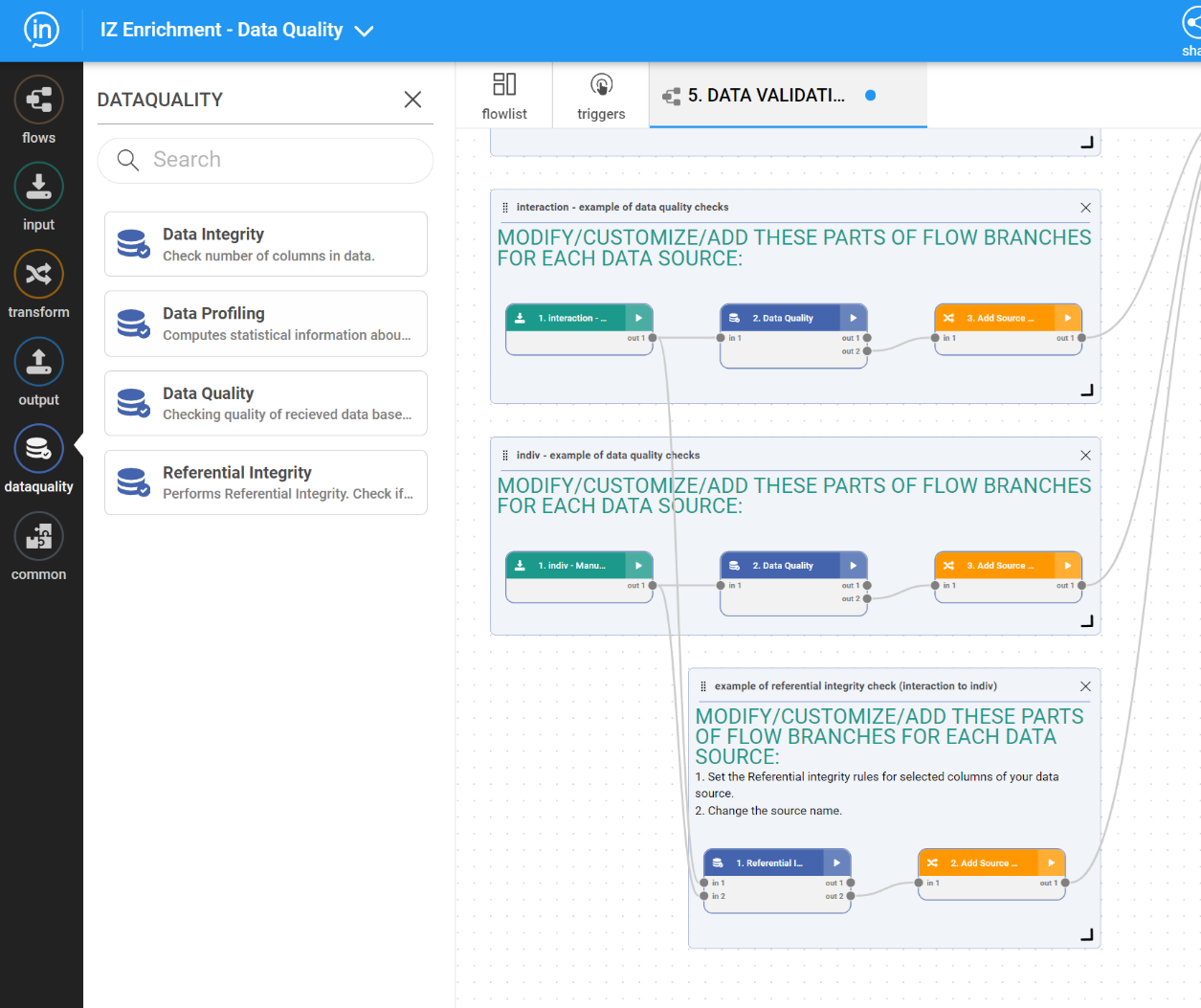

1. InFlow:

• InFlow Special Data Quality Functions – InFlow has pre-built special Data quality functions allowing easy and fast configuration of various functions (see the list at the end of this document).

• Data Integrity – checks the number of columns in each data cluster.

• Data Profiling – calculates basic statistical patterns and characteristics for a given data cluster.

• Data Quality – checks data quality features of received data based on a pre-configured set of selected audit functions (the list at the end of this document)

• Referential Integrity – checks if values from the first data set are present in the second data set.

These functions are available in a special IF menu:

Inzata’s Fully Automated data quality solution

Ensuring the accuracy, completeness, and reliability of data is most important for organizations. The integration of diverse data sources into a centralized data warehouse poses challenges that, if not addressed, can lead to compromised data quality. This article delves into the robust Data Quality Assurance Program employed by the Inzata Platform and the innovative IZ Data Quality System, both of which play pivotal roles in maintaining data integrity throughout the entire data lifecycle.

Inzata Platform’s Data Quality Assurance Program

The Inzata Platform’s data quality assurance program is designed to address challenges encountered during the entry of information into the data warehouse. Several key strategies contribute to enhancing data quality:

• Quality improvement in data is achieved by enhancing knowledge about source system data and increasing access to source data owners/analysts. The effectiveness is further boosted by well-documented data attributes, entities, and business rules governing them, resulting in superior modeling, and reporting from the data warehouse. Understanding how data is translated and filtered from source to target is crucial, necessitating mandatory access to Source System Developers when documentation is insufficient. In cases where developers lack comprehensive source definitions due to personnel changes, an empirical “data discovery” process may be required to unveil source business rules and relationships.

Integrated planning between the source and target systems, with agreement on tasks and schedules, enhances efficiency and reduces resource-wasting surprises. Effective communication plans during projects, providing timely updates on the current status of and addressing issues, are integral to this collaborative workflow approach.

• Collaborative planning between source and target systems minimizes surprises and increases efficiency.

• A well-communicated data quality control process provides current status updates and addresses issues promptly.

1. Conducting Data Quality Design Reviews with all relevant groups present is a proactive measure to prevent defects in raw data, translation, and reporting. In cases where design reviews are omitted due to tight schedules, a compensatory allocation of at least double the time is recommended for defect corrections.

2. Implementing Source Data Input/Entry Control is crucial for data quality improvement. Anomalies arise when human users/operators or automated processes deviate from defined attribute domains and business rules during data entry. This includes issues like free-form text entry, redefinition of data types, referential integrity violations, and non-standard uses of data entry functions. Screening for such anomalies and enforcing proper entry at the source creation significantly enhances data quality, positively impacting multiple downstream systems that rely on the data.

Expanding the Data Quality Assurance Horizon with Inzata Platform’s Program and IZ Data Quality Control Process

Beyond procedural activities, the Inzata Platform’s Quality Assurance Program considers user and operational processes that may impact data quality. Meanwhile, the IZ Data Quality System provides a comprehensive solution with its Data Audit functions, Data Quality Statistics, and ETL Data Quality Monitoring.

Common Challenges and Solutions:

1. Operating Procedures:

• Solution: The business users responsible for the source systems and the end users’ processes need to be engaged in identifying standard procedures that create bad data. They should understand the root causes and they should be chartered with process improvement of those data creation processes.

2. Mis-Interpretation of Data:

• Solution: Developers in the data warehouse must possess a comprehensive understanding of the data to ensure accurate modeling, translation, and reporting. In cases of incomplete documentation, conducting design reviews for both the data model and translation business rules becomes crucial. It is essential to document business rules influencing data transformation, subject them to an approval process, and, in instances of personnel changes, undertake an empirical “data discovery” process to unveil business rules and relationships.

3. Incomplete Data:

• Solution: To address incomplete data, a thorough review of each data source is imperative. Specific causes of incompleteness should be identified and targeted with quality checks. This includes implementing scripts to identify transmission errors and missing data, as well as generating logs during load procedures to flag data not loaded due to quality issues. Routine review and resolution of these logs, coupled with planned root cause analysis, are essential to spot and correct repetitive errors at their source. A designated data quality steward should regularly review the logs for effective quality control.

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

IZ Data Quality System: A Closer Look

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

1. InFlow:

• InFlow Special Data Quality Functions – InFlow has pre-built special Data quality functions allowing easy and fast configuration of various functions (see the list at the end of this document).

• Data Integrity – checks the number of columns in each data cluster.

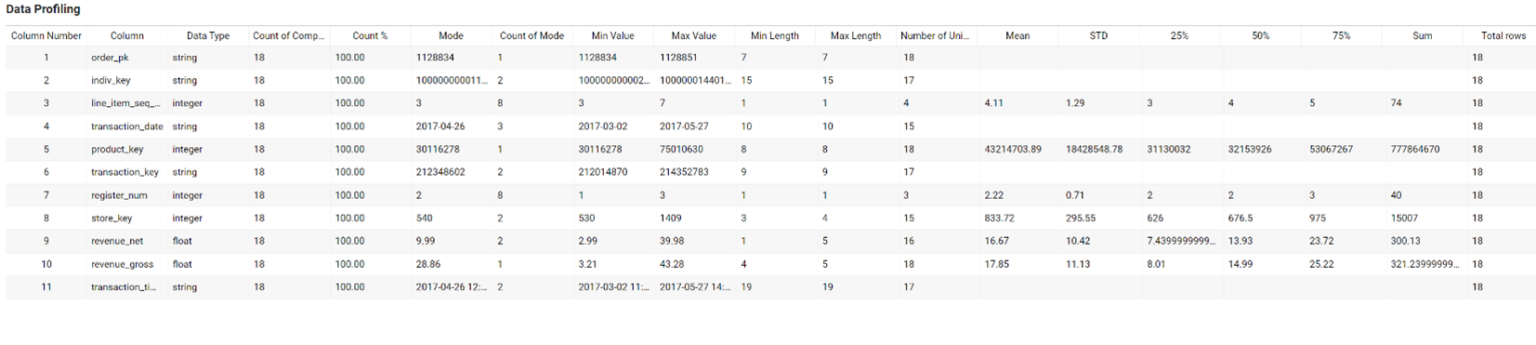

• Data Profiling – calculates basic statistical patterns and characteristics for a given data cluster.

• Data Quality – checks data quality features of received data based on a pre-configured set of selected audit functions (the list at the end of this document)

• Referential Integrity – checks if values from the first data set are present in the second data set.

These functions are available in a special IF menu:

Inzata’s Fully Automated data quality solution

Ensuring the accuracy, completeness, and reliability of data is most important for organizations. The integration of diverse data sources into a centralized data warehouse poses challenges that, if not addressed, can lead to compromised data quality. This article delves into the robust Data Quality Assurance Program employed by the Inzata Platform and the innovative IZ Data Quality System, both of which play pivotal roles in maintaining data integrity throughout the entire data lifecycle.

Inzata Platform’s Data Quality Assurance Program

The Inzata Platform’s data quality assurance program is designed to address challenges encountered during the entry of information into the data warehouse. Several key strategies contribute to enhancing data quality:

• Quality improvement in data is achieved by enhancing knowledge about source system data and increasing access to source data owners/analysts. The effectiveness is further boosted by well-documented data attributes, entities, and business rules governing them, resulting in superior modeling, and reporting from the data warehouse. Understanding how data is translated and filtered from source to target is crucial, necessitating mandatory access to Source System Developers when documentation is insufficient. In cases where developers lack comprehensive source definitions due to personnel changes, an empirical “data discovery” process may be required to unveil source business rules and relationships.

Integrated planning between the source and target systems, with agreement on tasks and schedules, enhances efficiency and reduces resource-wasting surprises. Effective communication plans during projects, providing timely updates on the current status of and addressing issues, are integral to this collaborative workflow approach.

• Collaborative planning between source and target systems minimizes surprises and increases efficiency.

• A well-communicated data quality control process provides current status updates and addresses issues promptly.

1. Conducting Data Quality Design Reviews with all relevant groups present is a proactive measure to prevent defects in raw data, translation, and reporting. In cases where design reviews are omitted due to tight schedules, a compensatory allocation of at least double the time is recommended for defect corrections.

2. Implementing Source Data Input/Entry Control is crucial for data quality improvement. Anomalies arise when human users/operators or automated processes deviate from defined attribute domains and business rules during data entry. This includes issues like free-form text entry, redefinition of data types, referential integrity violations, and non-standard uses of data entry functions. Screening for such anomalies and enforcing proper entry at the source creation significantly enhances data quality, positively impacting multiple downstream systems that rely on the data.

Expanding the Data Quality Assurance Horizon with Inzata Platform’s Program and IZ Data Quality Control Process

Beyond procedural activities, the Inzata Platform’s Quality Assurance Program considers user and operational processes that may impact data quality. Meanwhile, the IZ Data Quality System provides a comprehensive solution with its Data Audit functions, Data Quality Statistics, and ETL Data Quality Monitoring.

Common Challenges and Solutions:

1. Operating Procedures:

• Solution: The business users responsible for the source systems and the end users’ processes need to be engaged in identifying standard procedures that create bad data. They should understand the root causes and they should be chartered with process improvement of those data creation processes.

2. Mis-Interpretation of Data:

• Solution: Developers in the data warehouse must possess a comprehensive understanding of the data to ensure accurate modeling, translation, and reporting. In cases of incomplete documentation, conducting design reviews for both the data model and translation business rules becomes crucial. It is essential to document business rules influencing data transformation, subject them to an approval process, and, in instances of personnel changes, undertake an empirical “data discovery” process to unveil business rules and relationships.

3. Incomplete Data:

• Solution: To address incomplete data, a thorough review of each data source is imperative. Specific causes of incompleteness should be identified and targeted with quality checks. This includes implementing scripts to identify transmission errors and missing data, as well as generating logs during load procedures to flag data not loaded due to quality issues. Routine review and resolution of these logs, coupled with planned root cause analysis, are essential to spot and correct repetitive errors at their source. A designated data quality steward should regularly review the logs for effective quality control.

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

IZ Data Quality System: A Closer Look

The IZ Data Quality System employs a workflow processing approach with comprehensive reporting systems utilizing the following IZ Platform modules:

1. InFlow:

• InFlow Special Data Quality Functions – InFlow has pre-built special Data quality functions allowing easy and fast configuration of various functions (see the list at the end of this document).

• Data Integrity – checks the number of columns in each data cluster.

• Data Profiling – calculates basic statistical patterns and characteristics for a given data cluster.

• Data Quality – checks data quality features of received data based on a pre-configured set of selected audit functions (the list at the end of this document)

• Referential Integrity – checks if values from the first data set are present in the second data set.

These functions are available in a special IF menu:

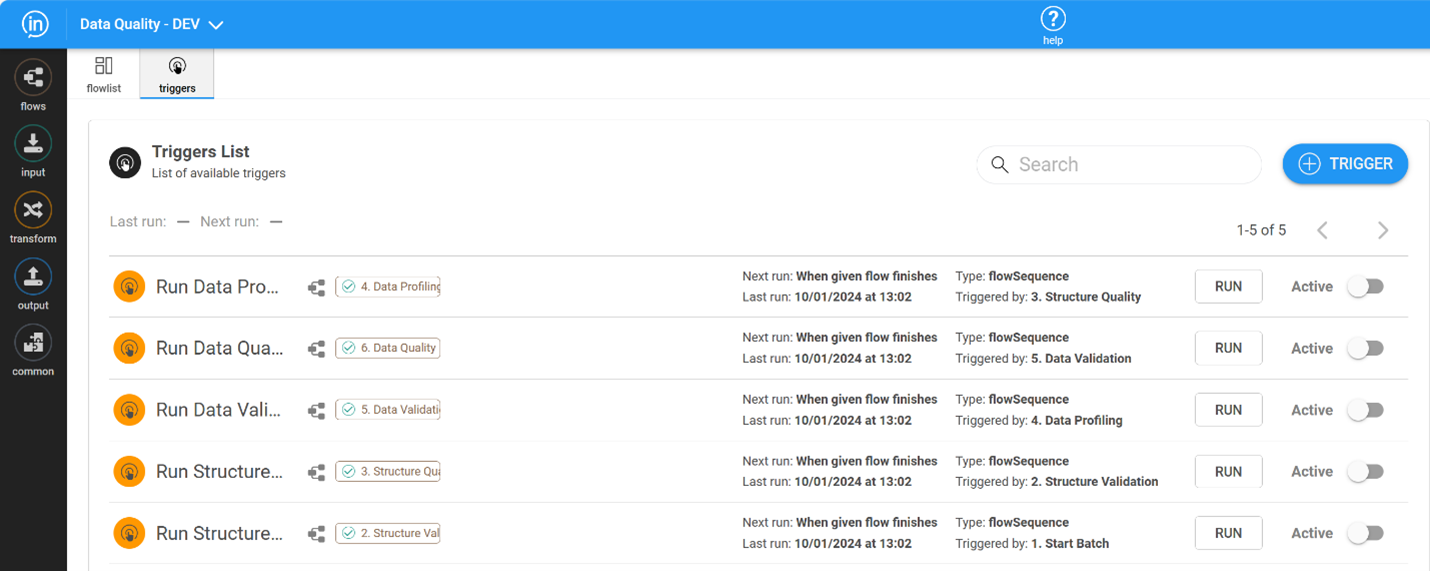

• DQ Flows monitors data anomalies in provided data and verifies the correctness of source data. The DQ Flow system also executes automatic go/no go decisions in terms of the ETL process’s continuation based on the results of data auditing procedures.

• On-the-fly communication with an automated messaging system created in the IZ Project performs data verification after transformation into target PDLs and lookup files used by the IZ project

• Loading DQ audit results into Inzata – results of Data profiling and DQ analysis are loaded into IZ DW

• Automation – the orchestration of all the processes is supported by the Triggers functionality of the InFlow module. The system is designed to make smart go/no-go decisions in a fully automated way.

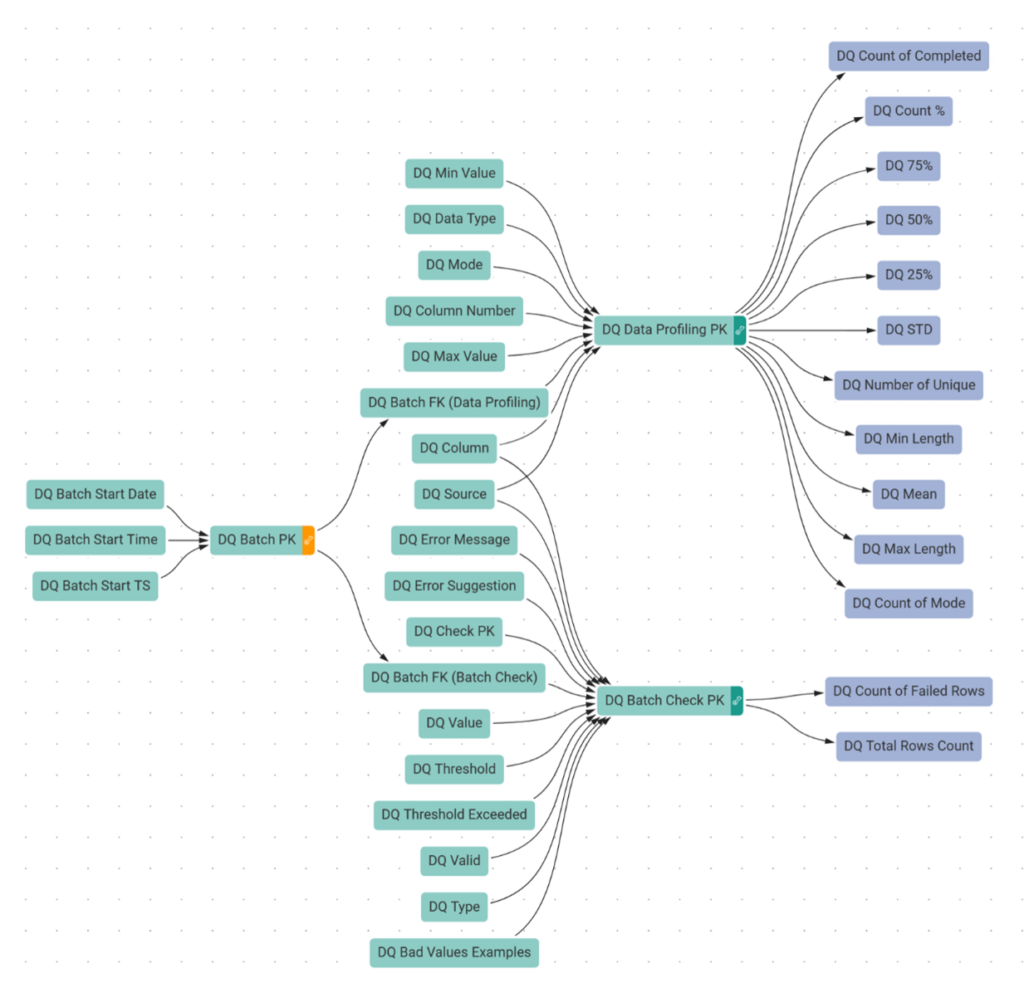

2. InModeler

• The dimensional data structure is designed to accommodate results from the ETL process of data quality analysis in the InFlow module.

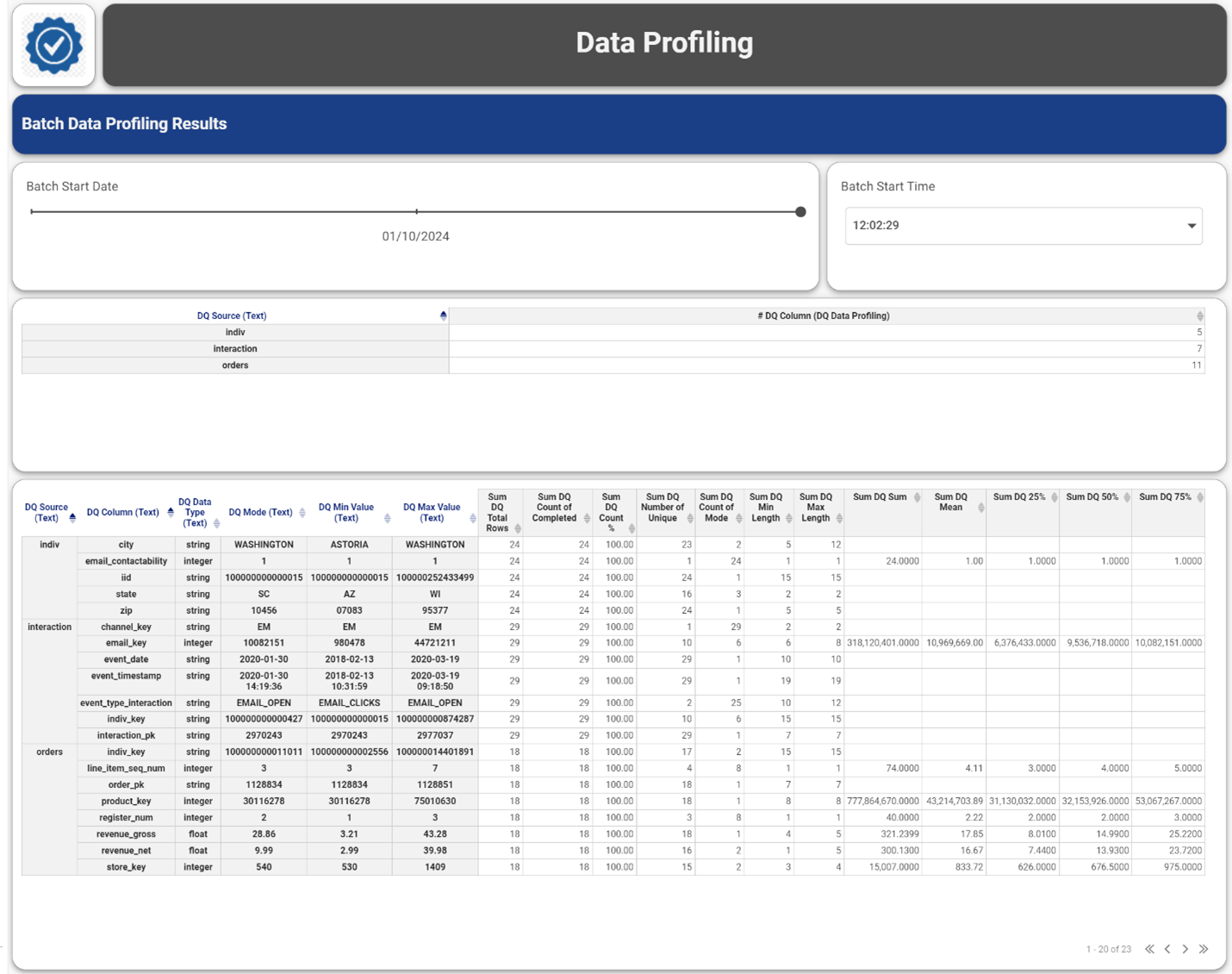

3. InBoard

• This module supports flexible reporting over the data profiling and DQ audit results, see examples in the next section below.

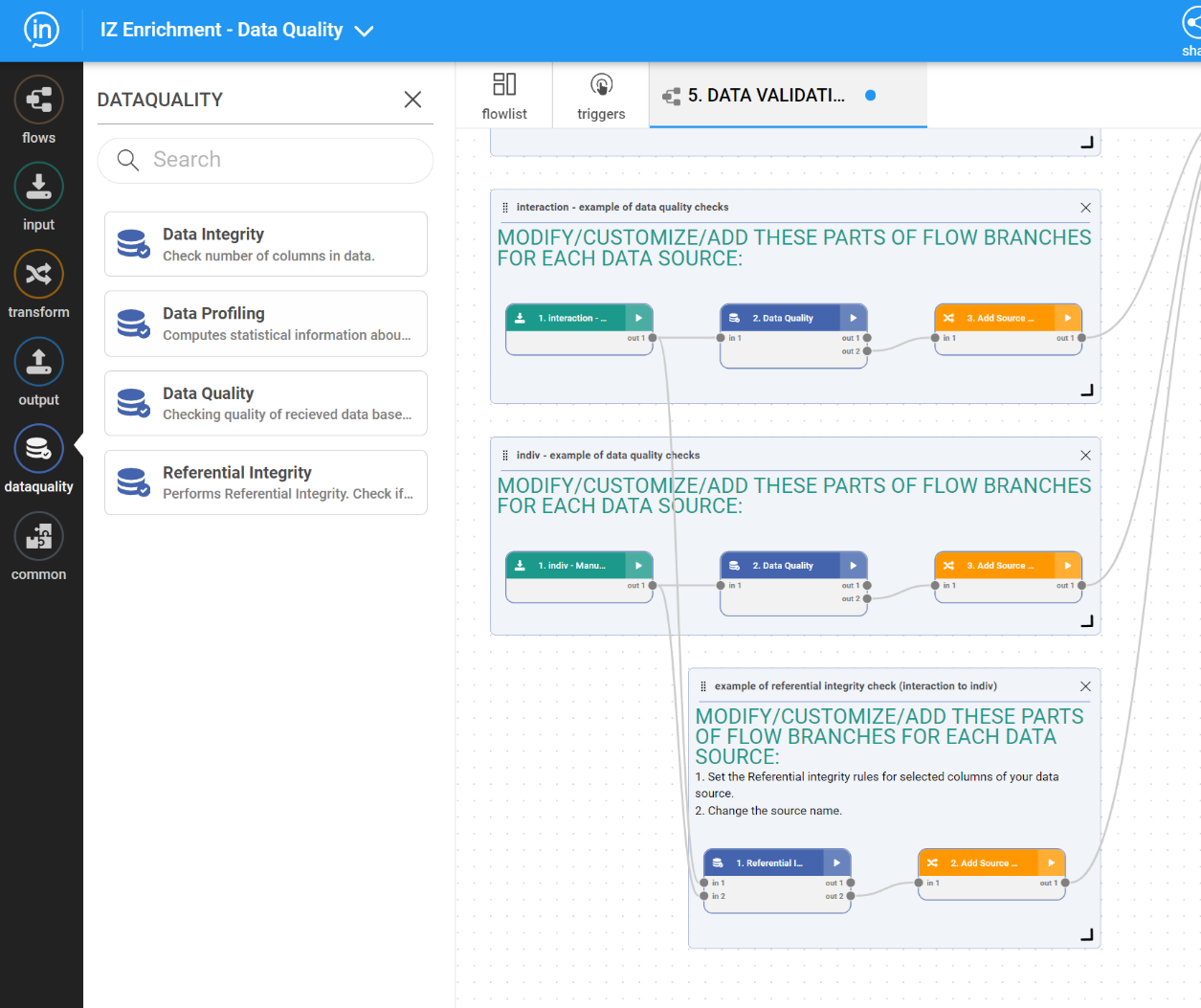

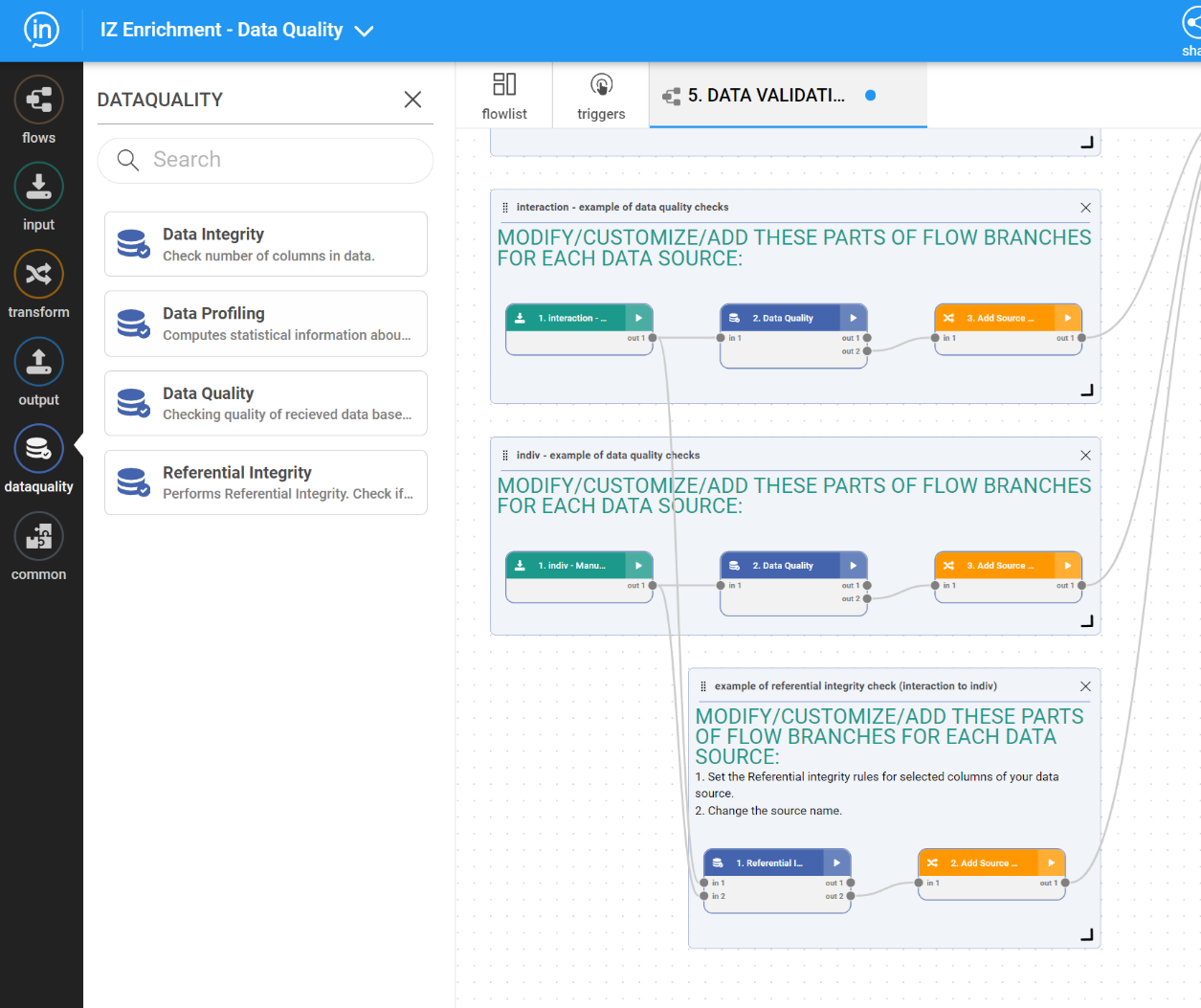

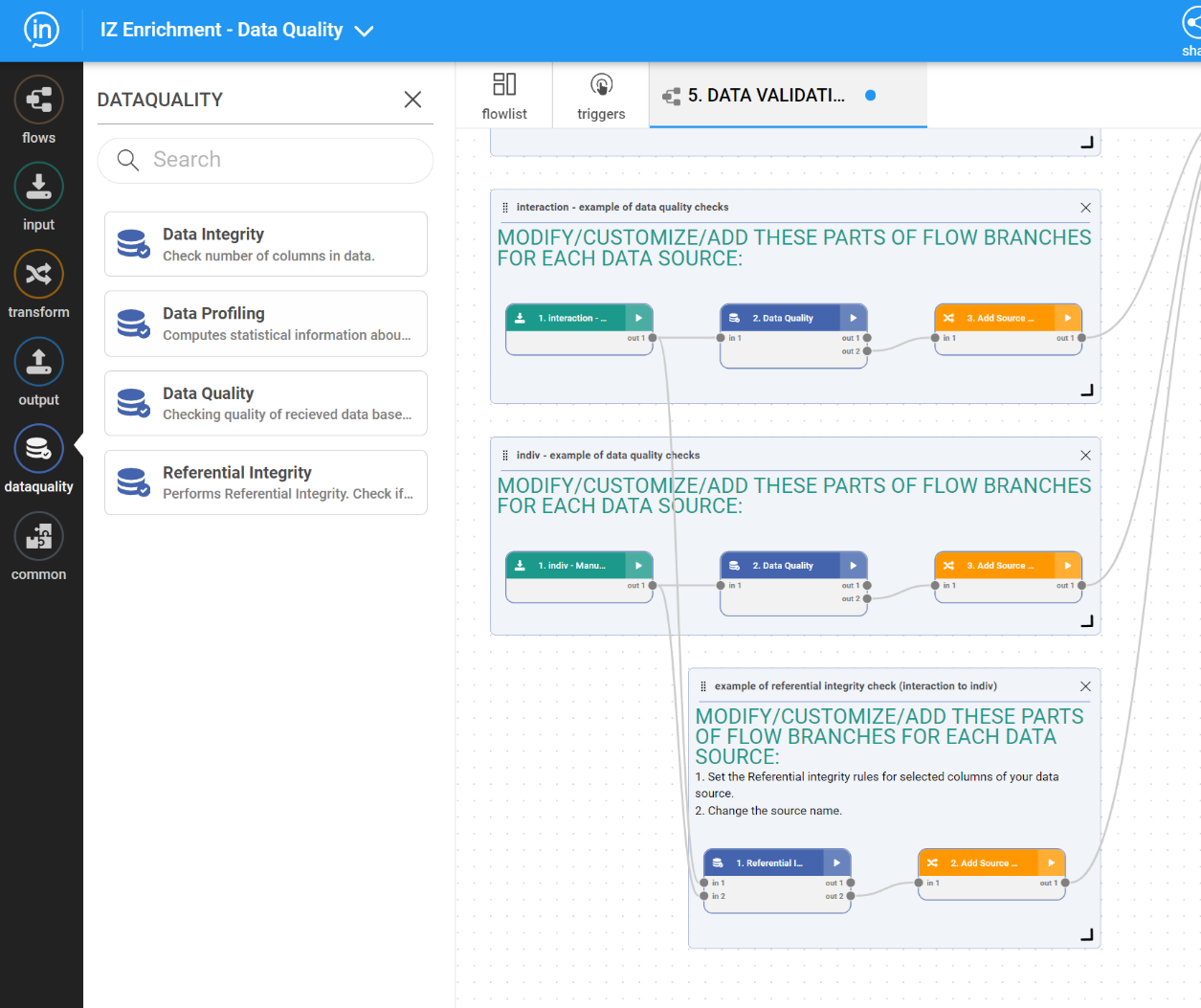

The IZ ETL process includes the following DQ flow types:

1. Start Batch Flow – adding a new batch record to the Batch cluster. It is run typically when a new data file is to be processed from the data quality point of view.

2. Structure Validation – during this phase the system verifies the presence of all the columns in a given data source file.

3. Structure DQ Manager – executes the decision whether the data file structure is correct, in such a case system continues with the next phase, otherwise, processing stops, and automated generated messages are sent out to predefined recipients.

4. Data Profiling – basic statistical profiles are generated over all the columns and the results are loaded into DQ DW

5. Data Validation – all the predefined audit checks are executed over all the columns of a given data source. The results are loaded into DQ DW. All possible DQ check types are listed in the Appendix section.

6. Data DQ Manager – executes the decision whether the data quality of all the columns is correct, in such a case system continues with the next phase, otherwise processing stops, and automated generated messages are sent out to predefined recipients. Some critical data checks may end with an error, however, if the number of failed rows stays within a given, predefined threshold, the process is still allowed to continue in the next phase.

7. Reporting – during the processing phase, the results of structure validation, data profiling, and data quality auditing were loaded DQ DW (step 2. And 5.) The results are available for comprehensive reporting and analysis. Examples of dashboards can be seen below:

The integration of the Inzata Platform’s Data Quality Assurance Program and the IZ Data Quality System creates a comprehensive framework for maintaining high-quality data throughout its lifecycle. By addressing challenges at multiple stages and employing innovative tools, organizations can ensure the reliability and accuracy of their data, empowering informed decision-making and maximizing the value derived from their data assets.