How Does Your Company’s Data Real Estate Measure Up?

Are you still letting your gut guide business and promotional plans? In today’s market, where nearly 60 percent of companies leverage “big data” and growth statistics indicate a 5,000 percent industry increase over the past 10 years, it’s a dangerous choice — especially since that number continues to grow. Before long, data-rooted marketing and procedural initiatives will become as commonplace as the Internet.

This industry push toward informational analytics begs the question: How is your company’s digital data game? Are you keeping up with the times or lagging woefully behind?

Why Is Data So Important These Days?

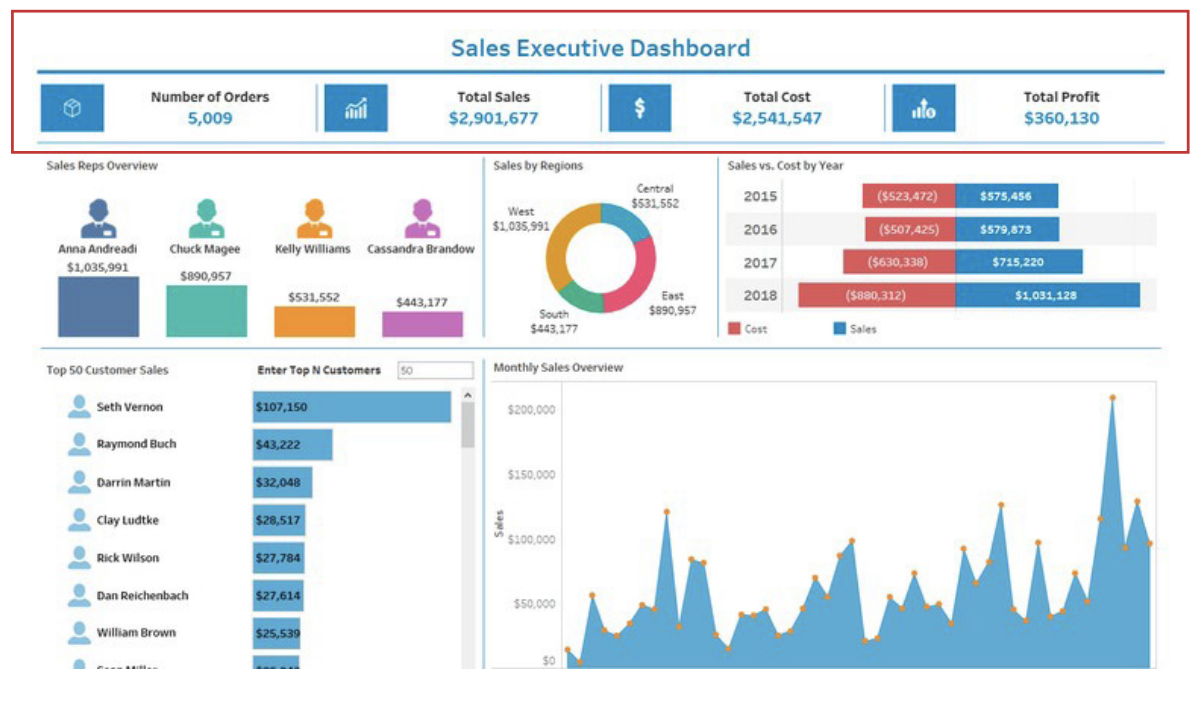

Data is like a crystal ball. It provides insight into market trends, customer behavior, and back-office logistics. Companies that invest in informational architecture tend to save money and increase efficiency, giving them a competitive edge.

What Is Data “Real Estate?”

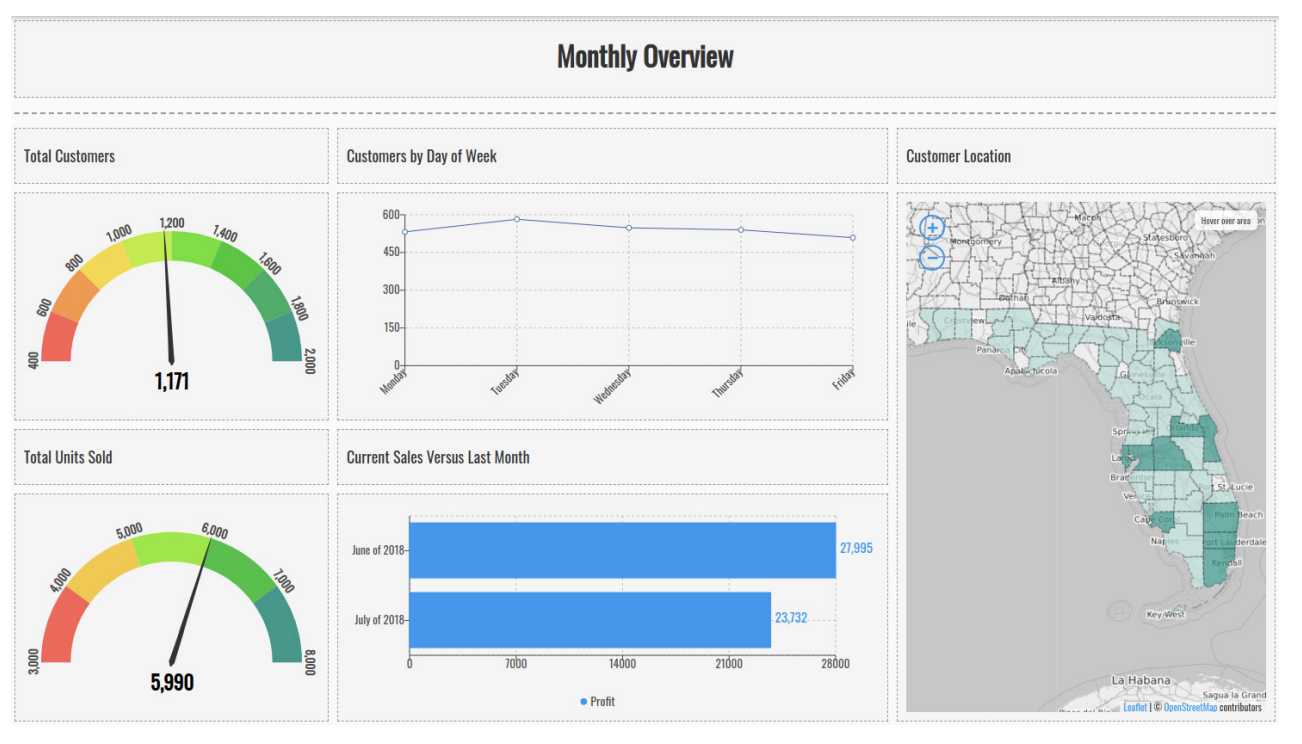

Data “real estate” refers to the software, hardware, and reporting mechanisms a business uses to collect, sort, and analyze raw data. The phrase can also encompass your informational pipeline and procurement methods.

How To Modernize Your Data Real Estate?

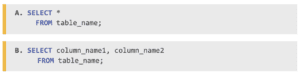

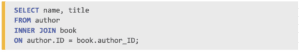

Decades ago, when businesses first started leveraging data, most IT analytics tools were static and limited. Microsoft Excel and Access were the big players back then. In short order, relational databases popped onto the scene, but early options required lots of human data entry, and they lacked dynamism.

If you’re still paddling in that data puddle, it’s time to modernize. Today’s options are light-years ahead, and they’ll likely improve your bottom line in the long run.

Embrace Automation and Merge Your Lakes

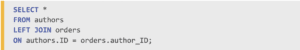

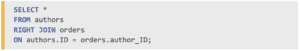

Automation advancements have seismically changed the data pipeline landscape. Today’s programs can handle many routine parsing, cleaning, and sorting tasks. What once took hours now takes minutes. Additionally, auto-correction and other machine-learning innovations have significantly improved data accuracy.

Streamline Your Data Flow: Moving from ETL vs. CDC

The next step in modernizing your data real estate is moving from an ETL environment to a CDC one. ETL stands for “extract, transform, load,” while CDC represents “change data capture.” We could write a dissertation on the technical differences between the two methodologies, but for the purposes of this conversation, suffice it to say that the latter provides a constant stream of fresh data while the former is more of a traditionally manual process.

Now here’s where things get a little bit confusing. CDC uses ELT, which stands for “extract, load, transform” — the next generation of ETL, which allows for better speed and fluidity.

The Future Is Now, And It’s Data-Driven

In days of old, when Mad Men ruled Madison Avenue, business acumen was more of a talent than a science. And while it still takes competency and knowledge to run a successful company, data analysis removes a lot of the guesswork.

The margin of error is becoming increasingly narrow, and leveraging big data will help ensure that you keep a competitive edge.