How you design your dashboard is crucial when it comes to displaying your data effectively. It’s important to visualize your data in a way that’s clear and easy for viewers to understand. However, with the abundance of data and reports needed to answer queries, it can be difficult to know what to consider in your design process. Let’s dive into the three key elements to implement when improving your dashboard design.

1. Develop a Plan

It’s natural to want to play around with your data and jump right into building dashboards. Nevertheless, when beginning, try not to start creating and adding charts right off the bat. It’s useful to plan ahead and layout the details of your dashboard prior to actually constructing it. This means determining the overarching purpose of your dashboard as well as what information needs to be included. Planning ahead will help to minimize overcrowding and continual adjustments to your design later on.

What Should Go Where?

Thinking about the user’s experience when viewing a dashboard is essential when it comes to deciding where specific information should go. Here are a few things to think about when determining your initial dashboard design plan.

Placement

There is only one thing to be said about placement: location, location, location. While your dashboard is far from the real estate sector, consider that users will naturally give more attention to the left side of the screen. According to a recent eye-tracking study, users spend 80% of their time viewing the left side of the screen and only 20% viewing the right.

Specifically, users were found to look to the top left corner of the screen the most, making this section of your dashboard prone to increased amounts of attention. The most utilized graphs and metrics should be placed in this portion of your dashboard, or any additional visualizations you deem significant.

Don’t Hide Things

Similar to the point above regarding placement, you want to prioritize key information and make sure it’s easily found. You can’t expect much work from your end viewer to dive deeper than the surface data presented. Any additional clicking or scrolling required to find information is unlikely to be discovered by viewers.

All things considered, an easy way to solidify your plan would be to create a rough draft either on paper or in any design application. This will allow you to play around with your placement and take a deeper dive into how certain elements complement each other.

2. Sometimes Less is More

We’ve all heard the common phrase that sometimes “less is more,” and dashboard design is no exception to this philosophy. You want your dashboard to be clear, concise, and easy to read. Avoid including too many charts and any unnecessary information. While an abundance of charts and graphs might appeal to the data driven enthusiast in you, they might be difficult for other viewers to read and understand. Minimizing the amount of data presented will prevent your audience from feeling overwhelmed due to information overload.

Choosing the Right Data Visualization

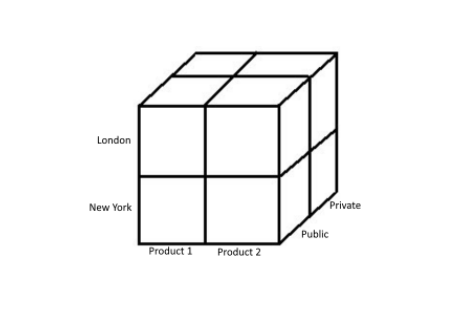

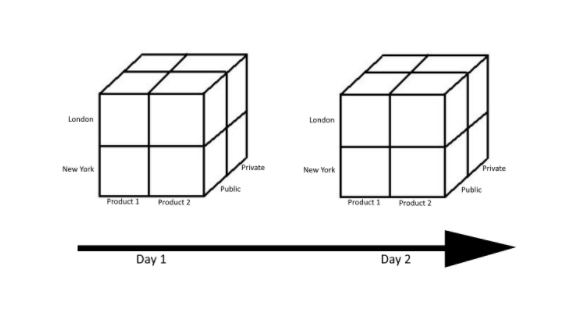

Choosing the most effective visualization for your data plays a key role in your dashboard’s simplicity. This is dependent on the type of data you are trying to visualize. Are you working with percentages? Data over a specific period of time? Are there any relationships present that you are trying to convey?

The many variables that make up your data will affect your ultimate choice in visualization. Be sure to consider characteristics such as time, dates, hierarchies, and so on.

3. Keep the End Viewer in Mind

Your audience is just as critical to your dashboard’s design as the information being presented. It’s important to always keep the end viewer in mind and understand how they are actually using the presented information.

When determining the characteristics of your end viewer, ask yourself questions such as:

- Who will be viewing this dashboard on a daily basis?

- How often do my viewers work with the type of data being presented?

- How will my audience be viewing this dashboard? Will viewers be sharing it as a pdf?

The answers to these questions will help you determine how much descriptive information to include alongside your visualizations.

Overall, there are numerous elements to consider when it comes to developing your business dashboards. It’s vital to always keep your audience in mind and plan ahead. Consider these key tips next time you’re building a new dashboard for improved design.