Kaggle Competition / GitHub Link

Intro

The objective of this Kaggle competition was to accurately predict the sales prices of homes in Ames, IA, using a provided training dataset of 1400+ homes & 79 features. This exercise allowed both experimentation/exploration for different strategies of feature engineering & advanced modeling.

EDA

To familiarize with the problem, some initial research was done on the town of Ames. As a college town, home to Iowa State University, everything (including real estate) can be tied to the particular academic calendar. The location of airports & railroads were also noted, as well as which neighborhoods are rural vs . mobile homes versus dense urban. Another interesting discovery was the Asbestos Disclosure Law, requiring sellers to notify buyers if the material is in or on their homes (such as roof shingles), which may have a direct impact on home’s price.

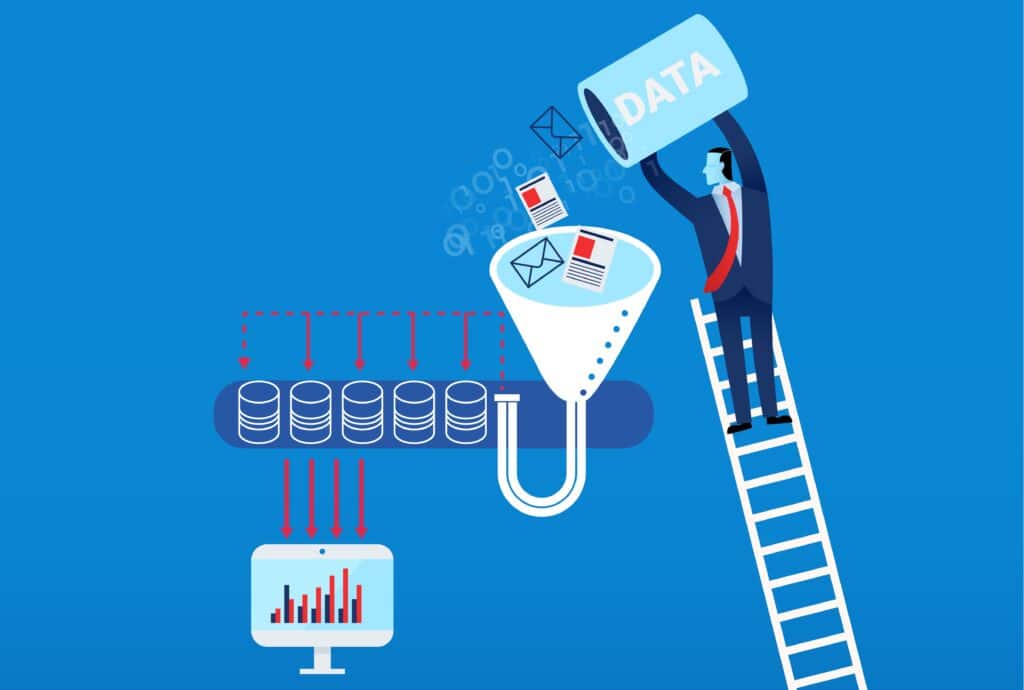

To acquaint ourselves with the dataset’s features, features were divided into Categorical & Quantitative categories, where some could be considered both. A function was written to visualize each through either box plots (abc) or scatter plots (123) to gain quick insights such as NA / 0-values, value/count distribution, evidence of relationship with the target, or obvious outliers.

To dig-in a little deeper, two functions, catdf() and quantdf() , were scripted to create the dataframe of summary details for each types of features:

The CATDF dataframe includes number of unique factors, the total set of factors, the mode, the mode percentage & the quantity of NAs. It also ran a simple linear regression with only the function & sales cost, and returned the particular score when the feature was converted into dummy variables, into a binary one (mode) vs. rest variable, or even a quantitative variable (eg Poor = 1 while Excellent = 5). This would also suggest an action item for the specific feature depending on results.

The QUANTDF dataframe includes range of values, the mean, the number of outliers, NA & 0-values, the pearson correlation with saleprice and a quick linear regression score. This also flags any high correlation along with other variables, in order to alert of potential multicollinearity issues. This proved particularly useful when comparing the TEST vs. TRAIN datasets – for example patios sizes had been overall larger in the TEST set, which may affect the overall modeling performance if that particular feature were utilized.

Feature Engineering & Selection

The second step has been to add, remove, create & manipulate additional features that could provide value to our modeling process.

We attempted to produce multiple versions of our dataset, to see which “version” associated with our feature engineering proved most beneficial when modeling.

Here are our dataset configurations, created to compare model performance:

| NOCAT |

only quantitative features were used |

| QCAT |

quantitative functions + converted ordinal categorical (1-5 with regard to Poor-Excellent) |

| DUMCAT |

using all original features but dummified all categorical |

| OPTKAT |

using some new features & converted categorical based upon CATDF suggested actions |

| MATTCAT |

all feature executive (+ a few extra), intelligent ordinality , usually our best |

Missingness

Missingness was handled differently depending on our own dataset configurations (see below). Particularly in the MATCAT dataset, significant time plus energy was spent meticulously choosing appropriate missing values within the dataset, using the general assumption made that if a home contained the null value related to area-size, that the home did not include that area on its lot (i. e. if the Pool Square Footage was null, we assumed the particular property did not really contain a pool). Some of the earlier versions of the models, such as our initial simple linear regression design makes use of mean imputation regarding numeric columns (after outlier removal), and mode imputation intended for categorical values prior to dummification.

Feature Combinations

Upon analyzing the dataset, it was clear that several features needed to be combined prior to modeling. The dataset contained square-footage ideals for multiple different types of porches and decks (screened-in, 3Season, OpenPorch, plus PoolDeck) which combined neatly to become a Porch square-footage variable. The individual features were then removed from the dataset.

Other functions were converted from square-footage units in order to binary categories, denoting whether the home contained that item, feature, or room or not.

The function written to create the particular MATCAT dataset allows the user to apply scaler transformations, and boxcox changes for largely skewed features. These conversions generally improved the models’ accuracy, especially in the linear models.

Additionally, the particular MATCAT dataset makes use of intelligent ordinality while handling NA values to get categorical features being converted to numeric. We found that in certain cases, having a poor-quality room had been more detrimental in order to a home’s saleprice than a home not possessing that will room or product at all. For instance, in our dataset, homes without a basement have the higher average saleprice than homes that have basement of the lowest quality . In cases such as this one, NA values were given the numerical value closest-matching the average saleprice associated with homes with NA for that category.

Other feature selection strategies used had been:

-

- Starting with all of the functions, running a while-loop VIF analysis to remove anything > 5

-

- Starting with single feature, adding new features iff it contributes to a better AIC/BIC score

-

- Converting selected features in order to PCA and modeling with new vectors

-

- Using Ridge/Lasso to remove features through penalization

-

- Using RandomForest Importance listing to use top subset pertaining to decision tree splits

Models & Tuning

Linear Modeling – Ridge, Lasso & ElasticNet were used, GridSearchCV optimized meant for alpha and l1_ratio. Since many significant features have a clear linear partnership with all the target variable, these model gave a higher score than the non-linear models.

Non-Linear Modeling – Random Forest (RF), Gradient Boosting (GBR) and XGBoost were used, GridSearchCV optimized for MaxFeatures for RF, because well as MaxDepth & SubSample designed for GBR. The performance was not improved using our optimized dataset, since the optimized dataset was optimized just for linear regression just. In addition , it was difficult to balance over-fitting when making use of the GBR model.

Model Stacking – H20. ai is an open-source AutoML platform, and when it was asked to predict saleprice, based on our MATCAT dataset, the AutoRegressor utilized various models (RF, GLM, XGBoost, GBM, Deep Neural Nets, Stacked Ensembles, etc) that ultimately lead to our best Kaggle Score. While it is more difficult to interpret this particular model’s findings compared to traditional machine learning techniques, the particular AutoML model neutralizes any major disadvantages any specific design may have while taking the greatest of each family.

Collaboration Tools

In addition to our standard collaboration tools (github, slack, google slides) – we also utilized Trello organize our thoughts on the different features & Google Python CoLab to work on the same Juptyer notebook file. This allowed us to work together virtually anywhere & at anytime.

Some members of r/datasets on Reddit have released a dataset of all comments on the site dating back to 2005. The datasets are categorized by year and are available to download for free by anyone and it could be a fun project to analyze the data and see what could be discovered about reddit commenters.

Some members of r/datasets on Reddit have released a dataset of all comments on the site dating back to 2005. The datasets are categorized by year and are available to download for free by anyone and it could be a fun project to analyze the data and see what could be discovered about reddit commenters.